Authors: Tianlin Liu, Anadi Chaman, David Belius, and Ivan Dokmanić

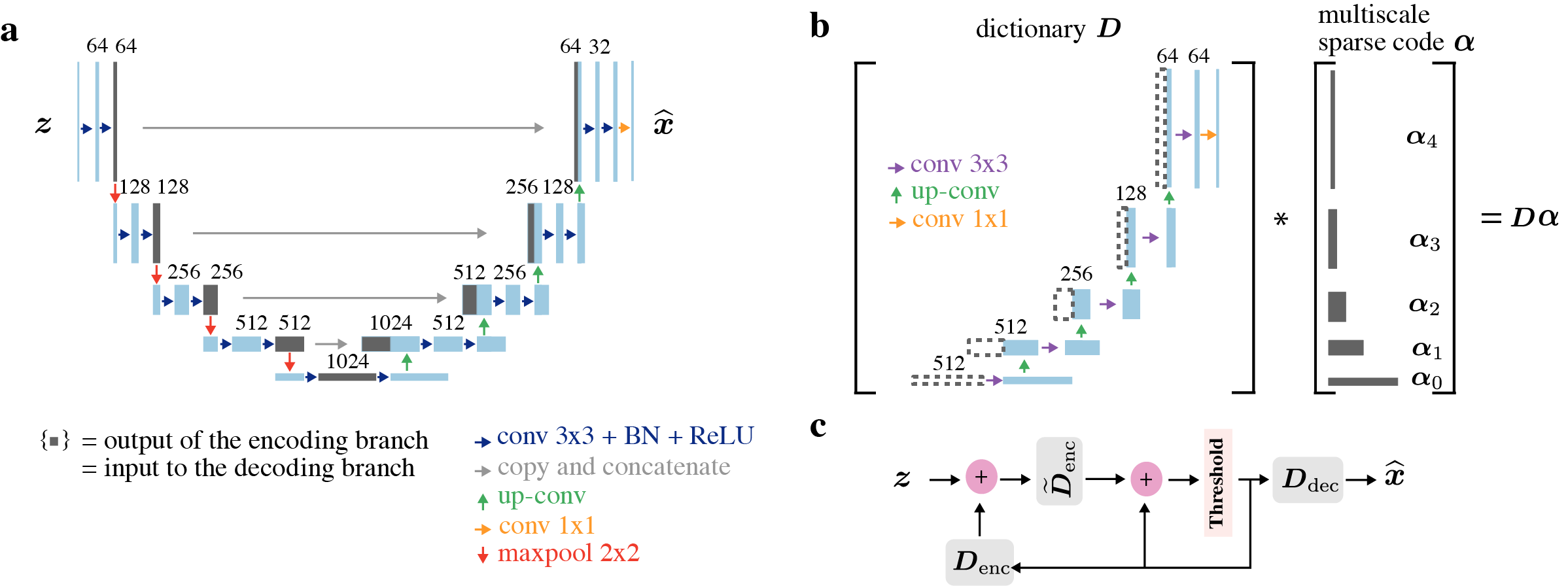

We present a novel multiscale dictionary model that is both simple and mathematically tractable. When trained using a traditional sparse-coding approach, our model performs comparably to the highly regarded U-Net. The figure below illustrates both the U-Net (panel a) and our multiscale dictionary model (panel b), as well as the forward pass of our model achieved by unrolled task-driven sparse coding (panel c).

If you're interested in experimenting with MUSC, we've prepared two Google Colab notebooks that are very easy to use. There's no need to install anything, and you can run them directly in your browser:

-

Evaluate and visualize a trained MUSC model on the LoDoPaB-CT dataset (Leuschner et al., 2021)

-

Train a small MUSC model from scratch on the ellipses dataset (Jin et al., 2017)

If you want to train the model on your own machine, you can use the provided conda environment.

Create the environment from the environment.yml file

conda env create -f environment.ymlTo train the MUSC model, use python scripts in the folder train_src.

The checkpoints for a MUSC model trained on the LoDoPaB-CT dataset are available for download on Google Drive. You can find them here.

The notebooks/evaluate_musc_ct.ipynb notebook can be used to evaluate the model on the test set.

@ARTICLE{Liu2022learning,

author={Liu, Tianlin and Chaman, Anadi and Belius, David and Dokmanić, Ivan},

journal={IEEE Transactions on Computational Imaging},

title={Learning Multiscale Convolutional Dictionaries for Image Reconstruction},

year={2022},

volume={8},

pages={425-437}}