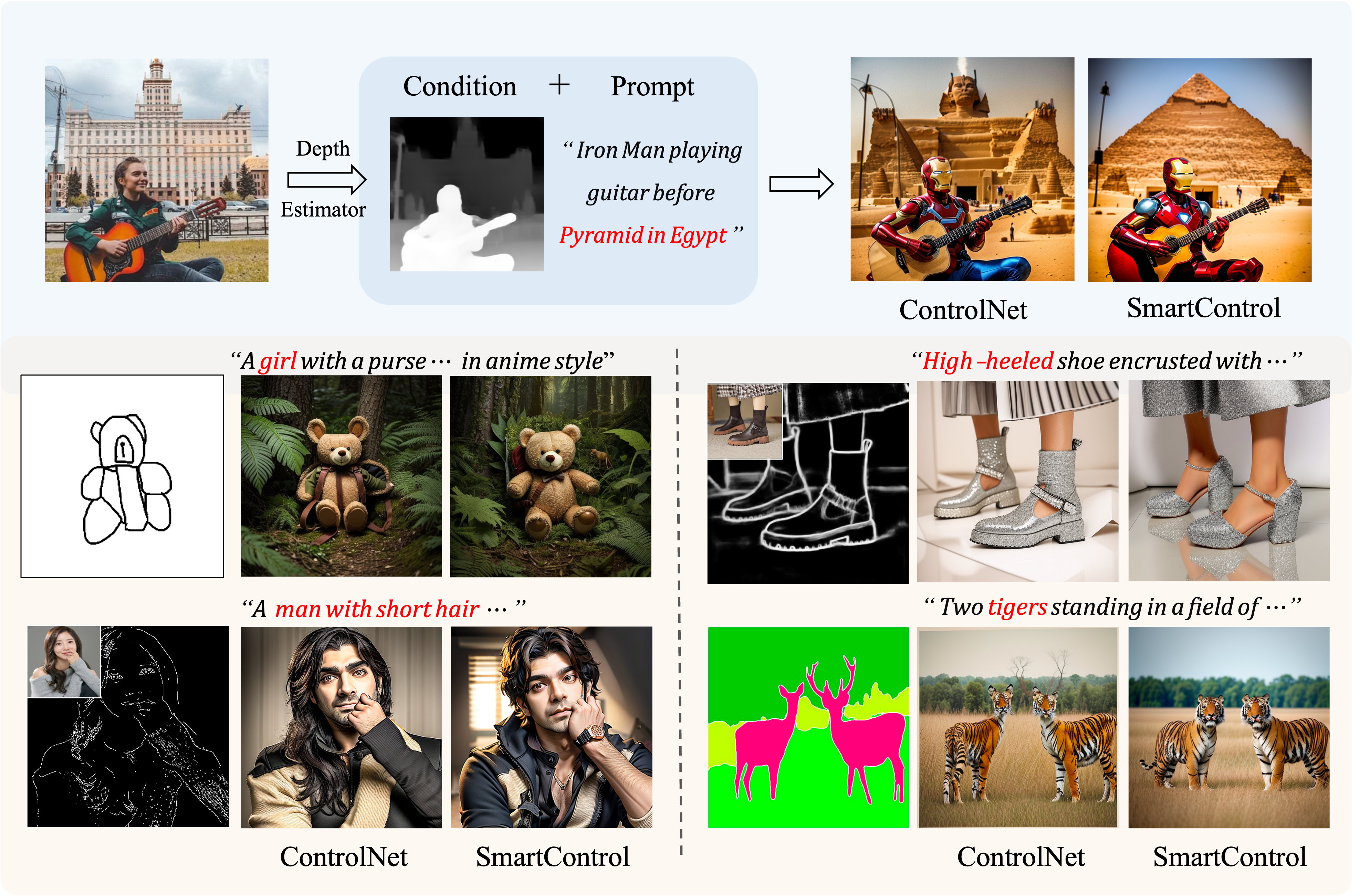

For handling the disagreements between the text prompts and rough visual conditions, we propose a novel text-to-image generation method dubbed SmartControl, which is designed to align well with the text prompts while adaptively keeping useful information from the visual conditions. Specifically, we introduce a control scale predictor to identify conflict regions between the text prompt and visual condition and predict spatial adaptive scale based on the degree of conflict. The predicted control scale is employed to adaptively integrate the information from rough conditions and text prompts to achieve the flexible generation.

- [2024/7/7] 🔥 Add train datasets and code for training.

- [2024/3/31] 🔥 We release the code and models for depth condition.

pip install -r requirements.txt

# please install diffusers==0.25.1 to align with our forward

If you want to train, you can visit this page to download our dataset. Otherwise, you can directly refer to the testing section.

The data is structured as follows:

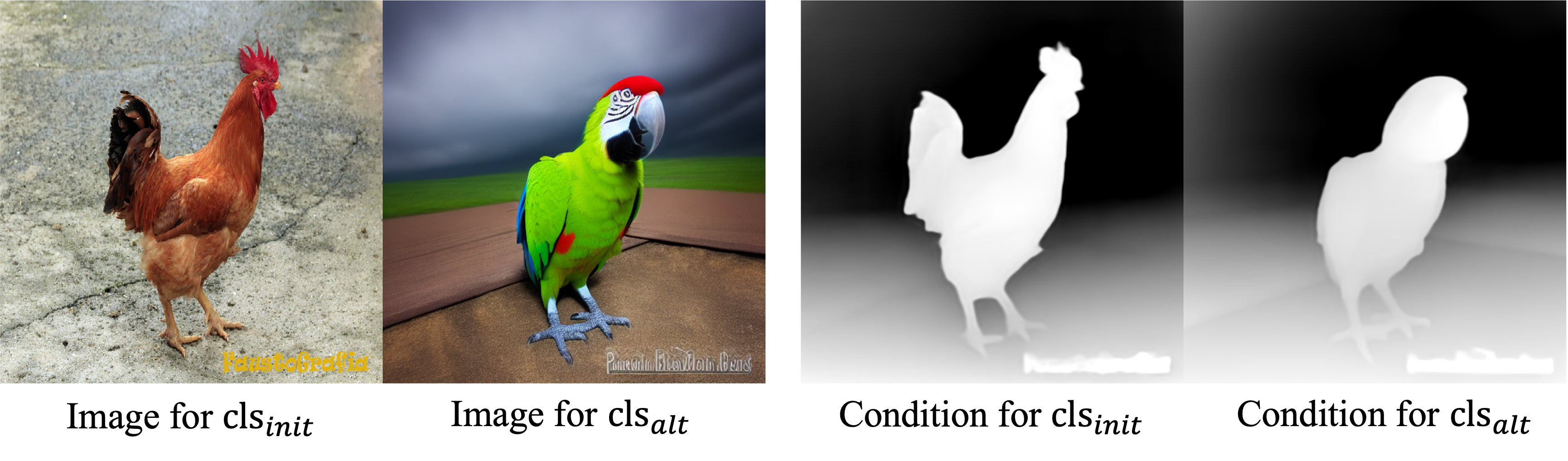

- Image for <$\texttt{cls}{init}$> vs. Image for <$\texttt{cls}{alt}$>

- Condition for <$\texttt{cls}{init}$> vs. Condition for <$\texttt{cls}{alt}$>

you can download our control scale predictor models from here. To run the demo, you should also download the following models:

- stabilityai/sd-vae-ft-mse

- SG161222/Realistic_Vision_V5.1_noVAE

- ControlNet models

- realisticvision-negative-embedding

-

If you are interested in SmartControl, you can refer to smartcontrol_demo

-

For integration our SmartControl to IP-Adapter, please download the IP-Adapter models and refer to smartcontrol_ipadapter_demo

# download IP-Adapter models cd SmartControl git lfs install git clone https://huggingface.co/h94/IP-Adapter mv IP-Adapter/models models

Our training code is based on the official ControlNet code. To train on your datasets:

-

Download the training datasets and place them in the

train\datadirectory. -

Download the pre-trained models:

Place these models in the

train\modelsdirectory.

To start the training, run the following commands:

cd train

python tutorial_train.py

Our codes are built upon ControlNet and IP-Adapter.

If you find SmartControl useful for your research and applications, please cite using this BibTeX:

@article{liu2024smartcontrol,

title={SmartControl: Enhancing ControlNet for Handling Rough Visual Conditions},

author={Liu, Xiaoyu and Wei, Yuxiang and Liu, Ming and Lin, Xianhui and Ren, Peiran and Xie, Xuansong and Zuo, Wangmeng},

journal={arXiv preprint arXiv:2404.06451},

year={2024}

}