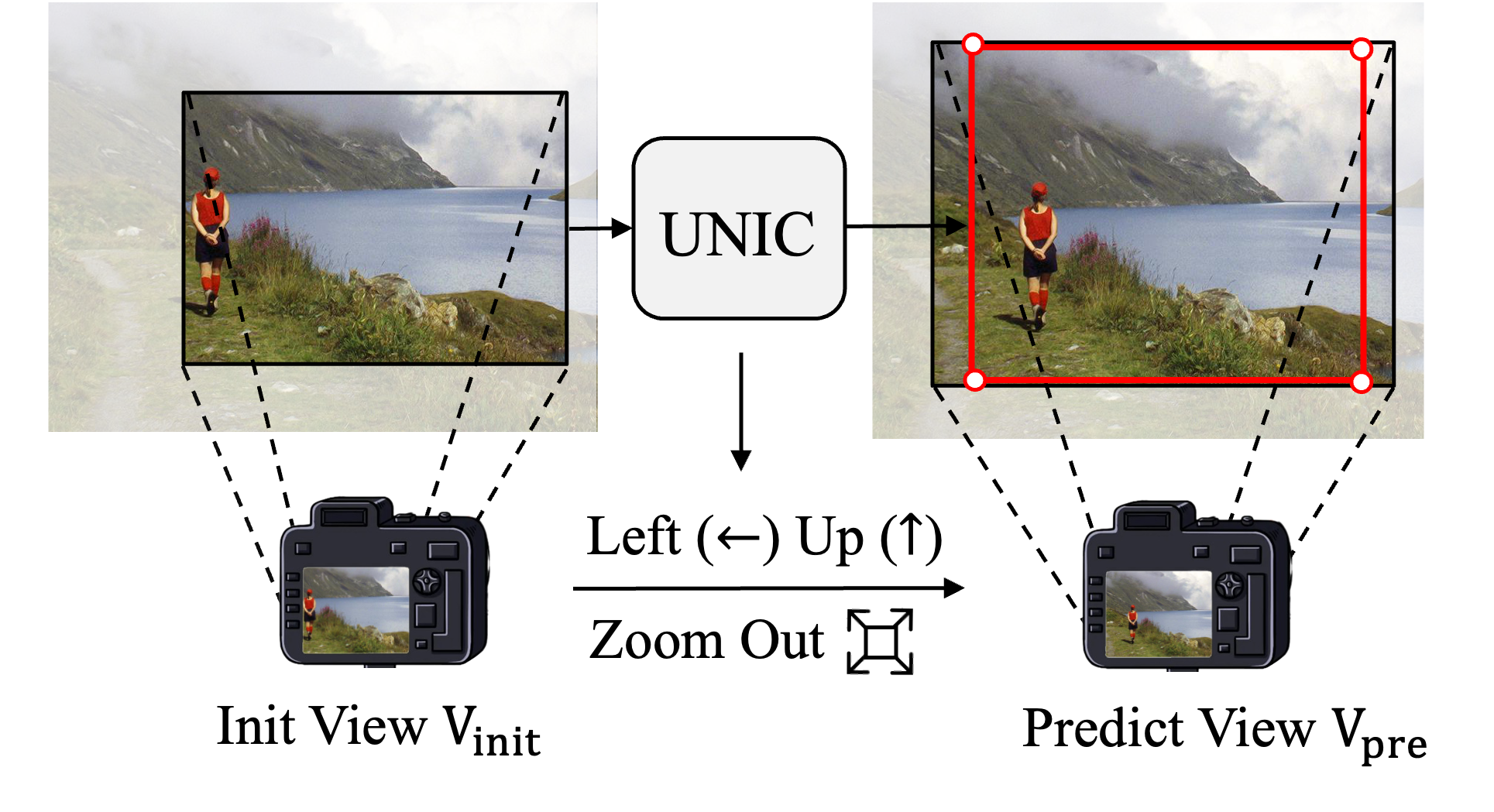

we present a joint framework for both unbounded recommendation of camera view and image composition (i.e., UNIC). In this way, the cropped image is a sub-image of the image acquired by the predicted camera view, and thus can be guaranteed to be real and consistent in image quality. Specifically, our framework takes the current camera preview frame as input and provides a recommendation for view adjustment, which contains operations unlimited by the image borders, such as zooming in or out and camera movement. To improve the prediction accuracy of view adjustment prediction, we further extend the field of view by feature extrapolation. After one or several times of view adjustments, our method converges and results in both a camera view and a bounding box showing the image composition recommendation. Extensive experiments are conducted on the datasets constructed upon existing image cropping datasets, showing the effectiveness of our UNIC in unbounded recommendation of camera view and image composition.

We recreate an unbounded image composition dataset based on GAICD and adopts a cDETR-like encoder-decoder to train UNIC. Before train or test, you need to download the GIAC dataset and generate annotations in the format of the COCO dataset.

- GAIC Dataset: Visit this page to download the GAIC Dataset.

- Annotations in format of COCO: Visit this page to download

instances_train.jsonandinstances_test.jsonand place them in theGAIC/annotationsdirectory. - init_view: The file for initial views has been placed in the

init_viewdirectory

Training on your datasets, run:

bash train.sh

We also provide the pretrained model here so that you can fast-forward to test our method.

Evaluate on your datasets, run:

bash test.sh

Our codes are built upon pytorch-ema and ConditionalDETR.