Deployment & Documentation & Stats

Build Status & Coverage & Maintainability & License

SUOD (Scalable Unsupervised Outlier Detection) is an acceleration framework for large-scale unsupervised outlier detector training and prediction. Notably, anomaly detection is often formulated as an unsupervised problem since the ground truth is expensive to acquire. To compensate for the unstable nature of unsupervised algorithms, practitioners often build a large number of models for further combination and analysis, e.g., taking the average or majority vote. However, this poses scalability challenges in high-dimensional, large datasets, especially for proximity-base models operating in Euclidean space.

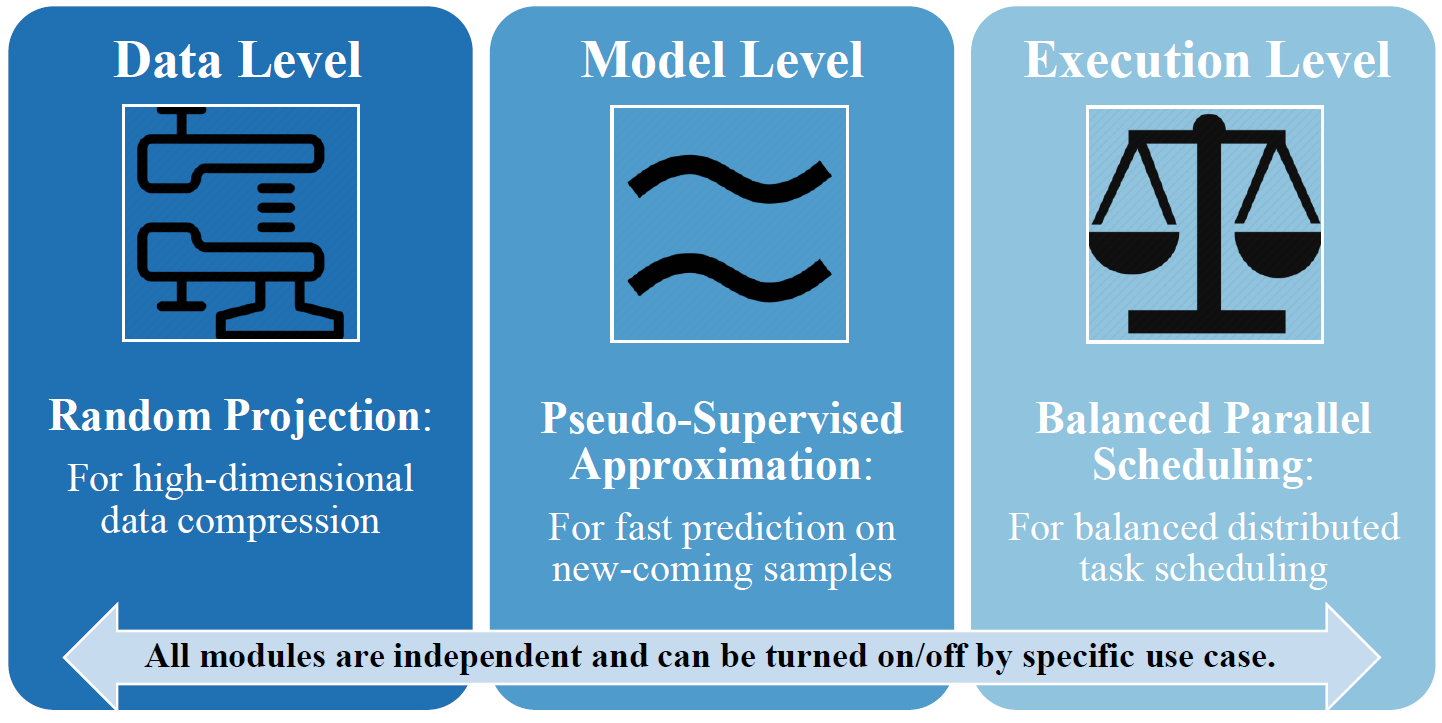

SUOD is therefore proposed to address the challenge at three complementary levels: random projection (data level), pseudo-supervised approximation (model level), and balanced parallel scheduling (system level). As mentioned, the key focus is to accelerate the training and prediction when a large number of anomaly detectors are presented, while preserving the prediction capacity. Since its inception in Jan 2019, SUOD has been successfully used in various academic researches and industry applications, include PyOD [2] and IQVIA medical claim analysis. It could be especially useful for outlier ensembles that rely on a large number of base estimators.

SUOD is featured for:

- Unified APIs, detailed documentation, and examples for the easy use.

- Optimized performance with JIT and parallelization when possible, using numba and joblib.

- Fully compatible with the models in PyOD.

- Customizable modules and flexible design: each module may be turned on/off or totally replaced by custom functions.

API Demo:

from suod.models.base import SUOD # initialize a set of base outlier detectors to train and predict on base_estimators = [ LOF(n_neighbors=5, contamination=contamination), LOF(n_neighbors=15, contamination=contamination), LOF(n_neighbors=25, contamination=contamination), HBOS(contamination=contamination), PCA(contamination=contamination), OCSVM(contamination=contamination), KNN(n_neighbors=5, contamination=contamination), KNN(n_neighbors=15, contamination=contamination), KNN(n_neighbors=25, contamination=contamination)] # initialize a SUOD model with all features turned on model = SUOD(base_estimators=base_estimators, n_jobs=6, # number of workers rp_flag_global=True, # global flag for random projection bps_flag=True, # global flag for balanced parallel scheduling approx_flag_global=False, # global flag for model approximation contamination=contamination) model.fit(X_train) # fit all models with X model.approximate(X_train) # conduct model approximation if it is enabled predicted_labels = model.predict(X_test) # predict labels predicted_scores = model.decision_function(X_test) # predict scores predicted_probs = model.predict_proba(X_test) # predict outlying probability

A preliminary version (accepted at AAAI-20 Security Workshop) can be accessed on arxiv. The extended version (under submission at a major ML system conference) can be accessed here.

If you use SUOD in a scientific publication, we would appreciate citations to the following paper:

@inproceedings{zhao2020suod,

author = {Zhao, Yue and Ding, Xueying and Yang, Jianing and Haoping Bai},

title = {{SUOD}: Toward Scalable Unsupervised Outlier Detection},

journal = {Workshops at the Thirty-Fourth AAAI Conference on Artificial Intelligence},

year = {2020}

}

Yue Zhao, Xueying Ding, Jianing Yang, Haoping Bai, "Toward Scalable Unsupervised Outlier Detection". Workshops at the Thirty-Fourth AAAI Conference on Artificial Intelligence, 2020.

Table of Contents:

It is recommended to use pip for installation. Please make sure the latest version is installed, as suod is updated frequently:

pip install suod # normal install

pip install --upgrade suod # or update if needed

pip install --pre suod # or include pre-release version for new featuresAlternatively, you could clone and run setup.py file:

git clone https://github.com/yzhao062/suod.git

cd suod

pip install .Required Dependencies:

- Python 3.5, 3.6, or 3.7

- joblib

- numpy>=1.13

- pandas (optional for building the cost forecast model)

- pyod

- scipy>=0.19.1

- scikit_learn>=0.19.1

Note on Python 2: The maintenance of Python 2.7 will be stopped by January 1, 2020 (see official announcement). To be consistent with the Python change and suod's dependent libraries, e.g., scikit-learn, SUOD only supports Python 3.5+ and we encourage you to use Python 3.5 or newer for the latest functions and bug fixes. More information can be found at Moving to require Python 3.

Full API Reference: (https://suod.readthedocs.io/en/latest/api.html).

- fit(X, y): Fit estimator. y is optional for unsupervised methods.

- approximate(X): Use supervised models to approximate unsupervised base detectors. Fit should be invoked first.

- predict(X): Predict on a particular sample once the estimator is fitted.

- predict_proba(X): Predict the probability of a sample belonging to each class once the estimator is fitted.

All three modules can be executed separately and the demo codes are in /examples/module_examples/{M1_RP, M2_BPS, and M3_PSA}. For instance, you could navigate to /M1_RP/demo_random_projection.py. Demo codes all start with "demo_*.py".

The examples for the full framework can be found under /examples folder; run "demo_base.py" for a simplified example. Run "demo_full.py" for a full example.

It is noted the best performance may be achieved with multiple cores available.

More to come... Last updated on April 27th, 2020.

Feel free to star for the future update :)

| [1] | Johnson, W.B. and Lindenstrauss, J., 1984. Extensions of Lipschitz mappings into a Hilbert space. Contemporary mathematics, 26(189-206), p.1. |

| [2] | Zhao, Y., Nasrullah, Z. and Li, Z., 2019. PyOD: A Python Toolbox for Scalable Outlier Detection. Journal of Machine Learning Research, 20, pp.1-7. |