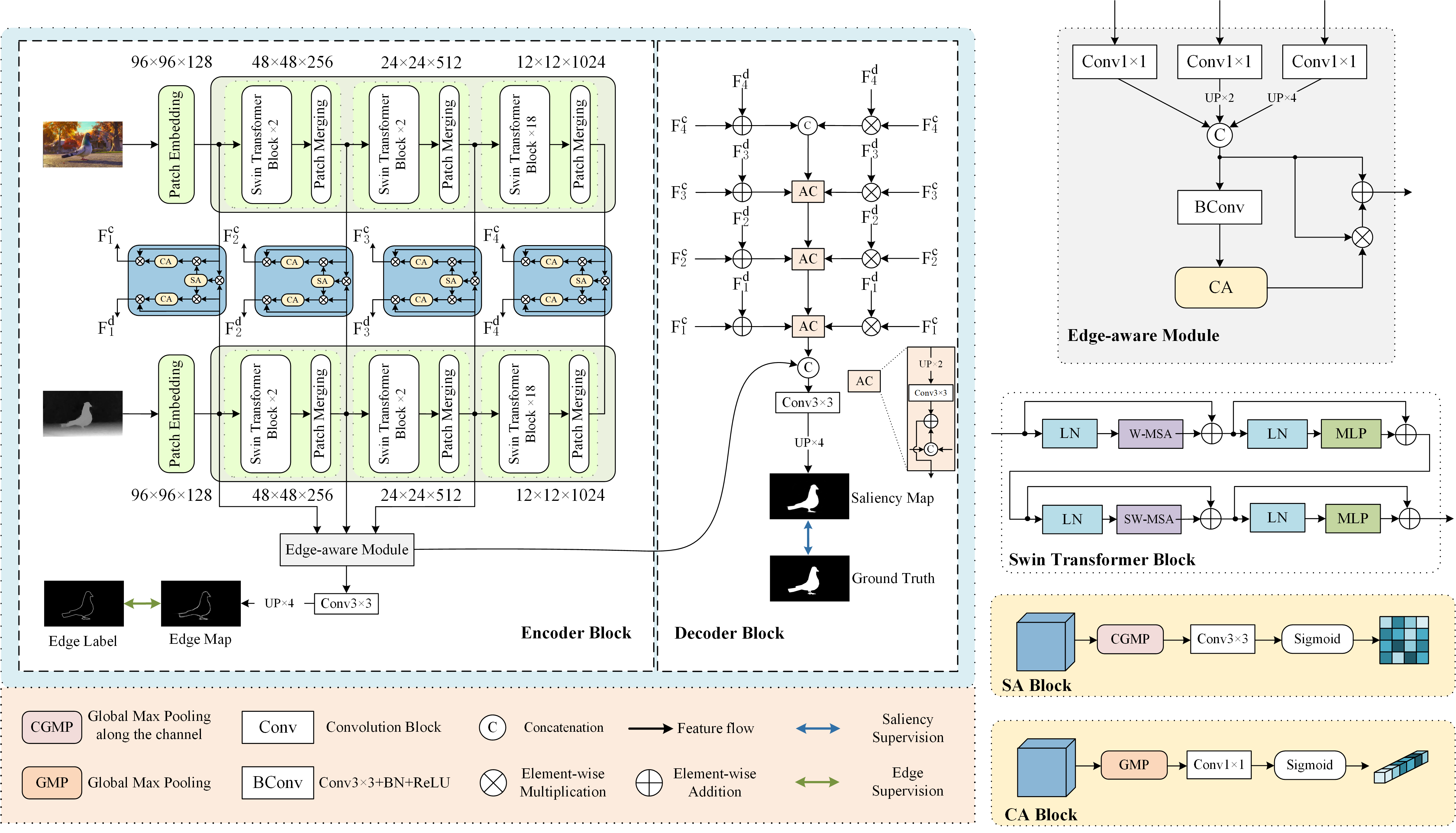

Pytorch implementation of the paper SwinNet: Swin Transformer drives edge-aware RGB-D and RGB-T salient object detection(The paper has been accepted by IEEE Transactions on Circuits and Systems for Video Technology. The details are in paper),For more details, please(https://github.com/liuzywen/SwinNet)

Authors:zhengyi Liu, Yacheng Tan, Qian He, Yun Xiao

Put the raw data under the following directory:

─ datasets\

|─ RGB-D\

├─ test\

|─···

|────├─ train\

|─···

| └─ validation\

|─···

|─ RGB-T\

├─ Train\

|─···

├─ test\

|─···

└─ validation\

|─···

- Downloading necessary data: swin_base_patch4_window12_384_22k.pth

- Put the Pretrained models under Pre_train\ directory.

- After you download training dataset, just run SwinNet_train.py to train our model.

- After you download all the pre-trained model and testing dataset, just run SwinNet_test.py to generate the final prediction map.

- evaluation_tools start from Saliency-Evaluation-Toolbox

| dataset | Smeasure ↑ | aFmeasure ↑ | Emeasure ↑ | MAE ↓ |

|---|---|---|---|---|

| NLPR | 0.941 | 0.908 | 0.967 | 0.018 |

| NJU2K | 0.935 | 0.922 | 0.934 | 0.027 |

| STERE | 0.919 | 0.893 | 0.929 | 0.033 |

| DES | 0.945 | 0.926 | 0.980 | 0.016 |

| SIP | 0.911 | 0.912 | 0.943 | 0.035 |

| DUT | 0.949 | 0.944 | 0.968 | 0.020 |

| dataset | Smeasure ↑ | aFmeasure ↑ | Emeasure ↑ | MAE ↓ |

|---|---|---|---|---|

| VT821 | 0.904 | 0.847 | 0.926 | 0.030 |

| VT1000 | 0.938 | 0.896 | 0.947 | 0.018 |

| VT5000 | 0.912 | 0.865 | 0.924 | 0.026 |

All of the saliency maps mentioned in the paper are available on GoogleDrive, OneDriver, BaiduPan-code:, AliyunDriver

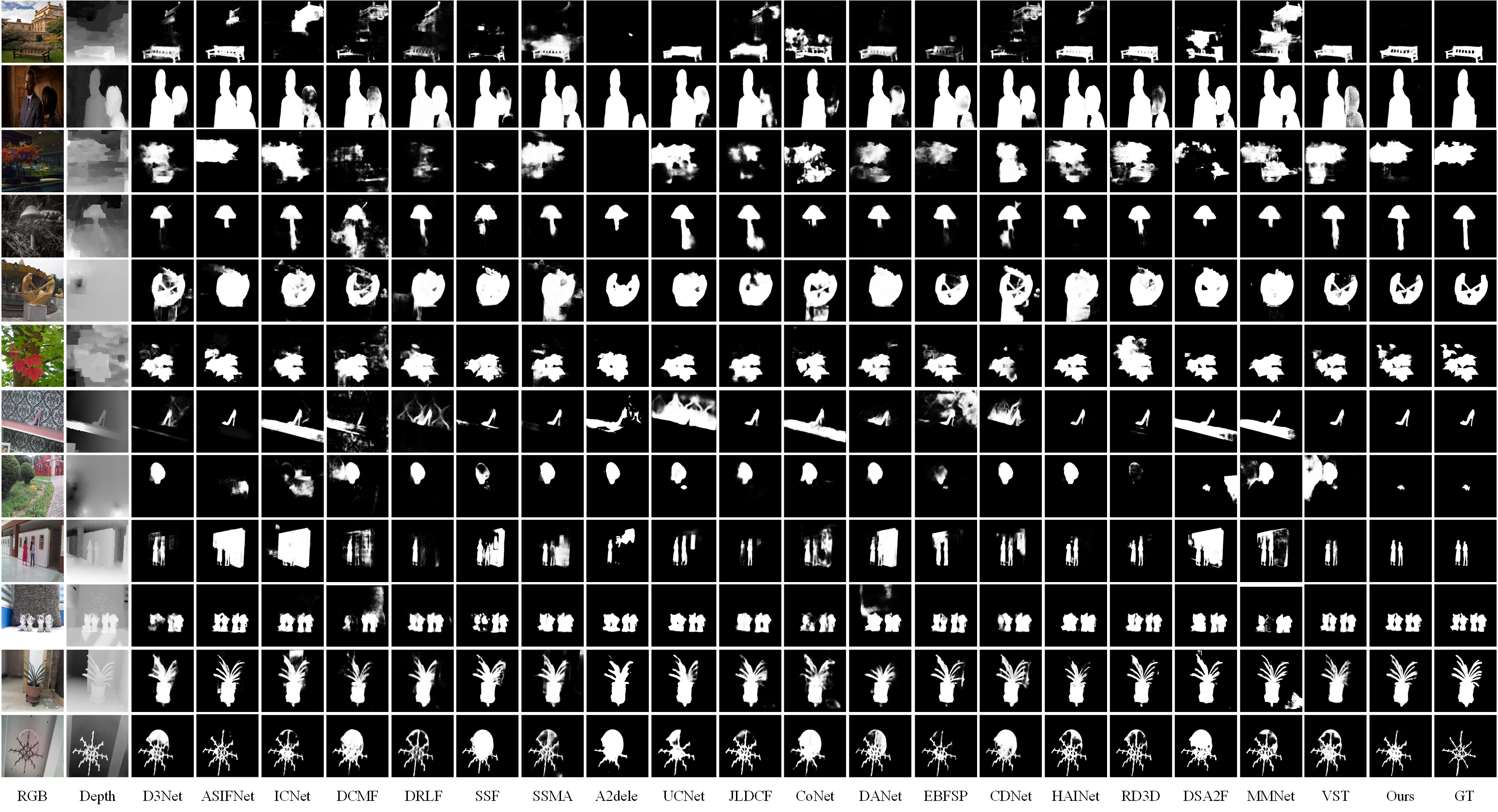

RGB-D Visual comparison

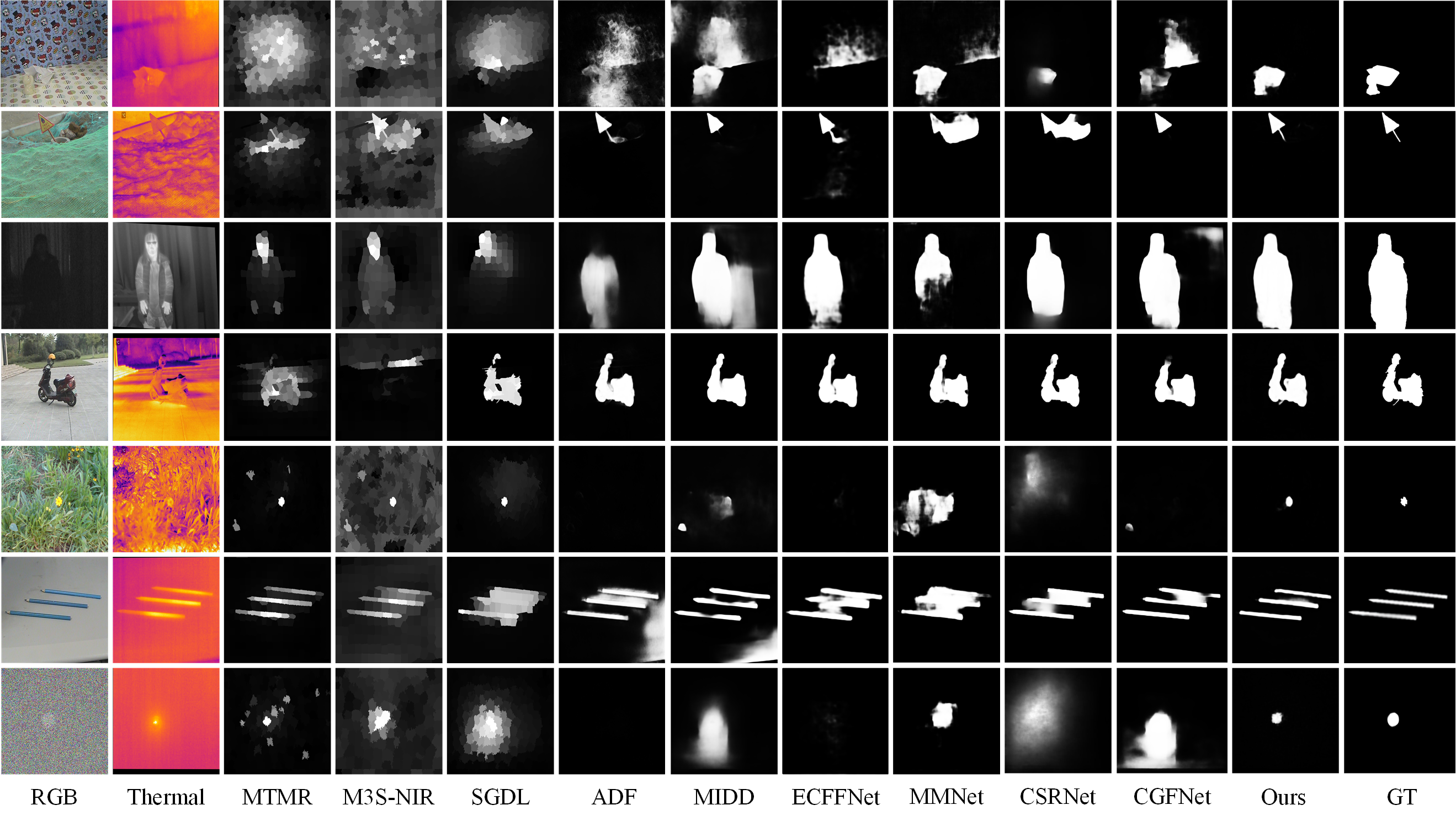

RGB-T Visual comparison

If you find this work or code useful, please cite:

@ARTICLE{9611276,

author={Liu, Zhengyi and Tan, Yacheng and He, Qian and Xiao, Yun},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

title={SwinNet: Swin Transformer drives edge-aware RGB-D and RGB-T salient object detection},

year={2021},

volume={},

number={},

pages={1-1},

doi={10.1109/TCSVT.2021.3127149}}

``