This repository provides the coding implementation of the paper: Adversarial Attacks on GMM i-vector based Speaker Verification Systems.

- Listen to audio samples at webpage: https://lixucuhk.github.io/adversarial-samples-attacking-ASV-systems

- FAR (%) of the GMM i-vector systems under white box attack with different perturbation degrees (P).

| P=0 | P=0.3 | P=1.0 | P=5.0 | P=10.0 | |

|---|---|---|---|---|---|

| MFCC-ivec | 7.20 | 82.91 | 96.87 | 18.14 | 16.65 |

| LPMS-ivec | 10.24 | 96.78 | 99.99 | 99.64 | 69.95 |

- EER (%) of the GMM i-vector systems under white box attack with different perturbation degrees (P).

| P=0 | P=0.3 | P=1.0 | P=5.0 | P=10.0 | |

|---|---|---|---|---|---|

| MFCC-ivec | 7.20 | 81.78 | 97.64 | 50.25 | 50.72 |

| LPMS-ivec | 10.24 | 94.04 | 99.95 | 99.77 | 88.60 |

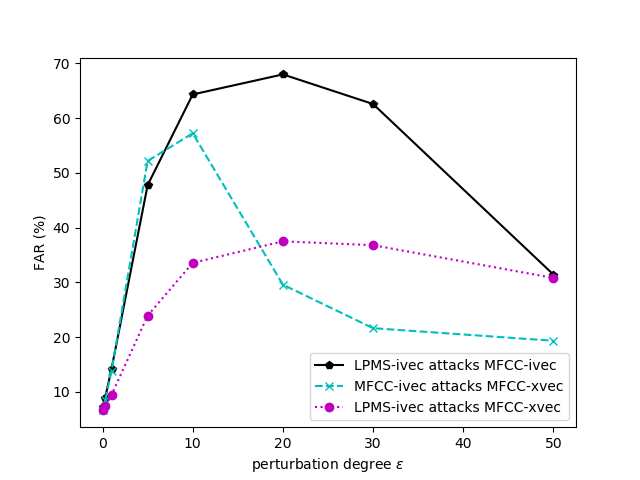

- EER (%) of the target systems under black box attack with different perturbation degrees (P).

| P=0 | P=0.3 | P=1.0 | P=5.0 | P=10.0 | P=20.0 | P=30.0 | P=50.0 | |

|---|---|---|---|---|---|---|---|---|

| LPMS-ivec attacks MFCC-ivec | 7.20 | 8.83 | 13.82 | 50.02 | 69.04 | 74.62 | 74.59 | 63.24 |

| MFCC-ivec attacks MFCC-xvec | 6.62 | 8.52 | 14.06 | 57.43 | 74.32 | 60.85 | 54.07 | 51.34 |

| LPMS-ivec attacks MFCC-xvec | 6.62 | 7.42 | 9.49 | 25.47 | 37.51 | 43.89 | 48.48 | 48.39 |

-

Python and packages

This code was tested on Python 3.7.1 with PyTorch 1.0.1. Other packages can be installed by:

pip install -r requirements.txt

-

Kaldi-io-for-python

kaldi-io-for-python is used for reading data of

ark,scpformat in kaldi via python codes. SeeREADME.mdin kaldi-io-for-python for installation.

-

Download Voxceleb1 dataset

To replicate the paper's work, get Voxceleb1 dataset at http://www.robots.ox.ac.uk/~vgg/data/voxceleb/vox1.html. It consists of short clips of human speech, and there are in total 148,642 utterances for 1251 speakers. Consistent with Nagrani et al., 4874 utterances for 40 speakers are reserved for testing. The remaining utterances are used for training our SV models.

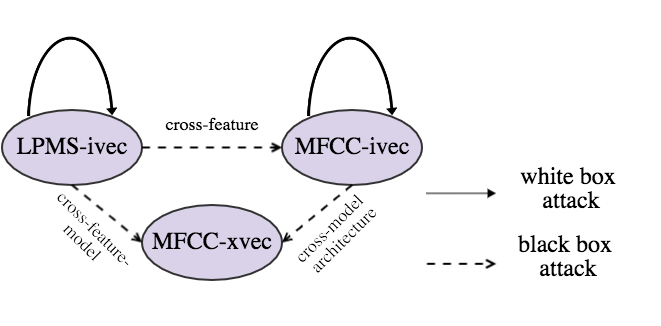

- In our experiments, three ASV models are well trained: Mel-frequency cepstral coefficient (MFCC) based GMM i-vector system (

MFCC-ivec), log power magnitude spectrum (LPMS) based GMM i-vector system (LPMS-ivec) and MFCC based x-vector system (MFCC-xvec). - You can execute the

run.shscript in the directory ofi-vector-mfcc,i-vector-lpmsandx-vector-mfccto trainMFCC-ivec,LPMS-ivecandMFCC-xvec, respectively. These codes were modified from Kaldi scripts. You need to go into the correponding directory, and run therun.sh. For e.g.,cd i-vector-mfcc ./run.sh

-

According to the attack configuration, two white box attacks are performed on

MFCC-ivecandLPMS-ivec, respectively. Three black box attack settings are:LPMS-ivec attacks MFCC-ivec,MFCC-ivec attacks MFCC-xvecandLPMS-ivec attacks MFCC-xvec. -

To leverage Pytorch to automatically generate the adversarial gradients, we rebuild the forward process in ASV systems by Pytorch, using the models trained via Kaldi scripts. Before executing the codes, you need to copy

i-vector-mfcc/copy-ivectorextractor.ccto/your_path/kaldi/src/ivectorbin/copy-ivectorextractor.ccand compile it. This code is used to convert i-vector extractor parameters intotxtformat. -

To generate adversarial samples from

LPMS-ivecand perform the white box attack on it, run:cd i-vector-lpms ./perform_adv_attacks.sh -

To generate adversarial samples from

MFCC-ivec, perform the white box attack on it and black box attack ofLPMS-ivec attacks MFCC-ivec, run:cd i-vector-mfcc ./perform_adv_attacks.sh -

To perform black box attack of

MFCC-ivec attacks MFCC-xvecandLPMS-ivec attacks MFCC-xvec, run:cd x-vector-mfcc ./spoofing_MFCC_xvec.sh

If the code is used in your research, please star our repo and cite our paper as follows:

@article{li2019adversarial,

title={Adversarial attacks on GMM i-vector based speaker verification systems},

author={Li, Xu and Zhong, Jinghua and Wu, Xixin and Yu, Jianwei and Liu, Xunying and Meng, Helen},

journal={arXiv preprint arXiv:1911.03078},

year={2019}

}

- Xu Li at the Chinese University of Hong Kong (xuli@se.cuhk.edu.hk, xuliustc1306@gmail.com)

- If you have any questions or suggestions, please feel free to contact Xu Li via xuli@se.cuhk.edu.hk or xuliustc1306@gmail.com.