IDI scoping repo - see Project Brief for more detailed information on background, goals, etc.

At the DataDive - data scientists will work to develop a simple scraper tool that take as an input “search terms” and provide the links to projects that resulted when those terms were searched. This tool will be comprised of numerous subtools that search specific DFI websites. At the DataDive we will prioritize the creation of scrapers based on IDI’s prioritization. Scrapers will be written in Python likely using some combination of Selenium, Beautifulsoup, requests, etc.

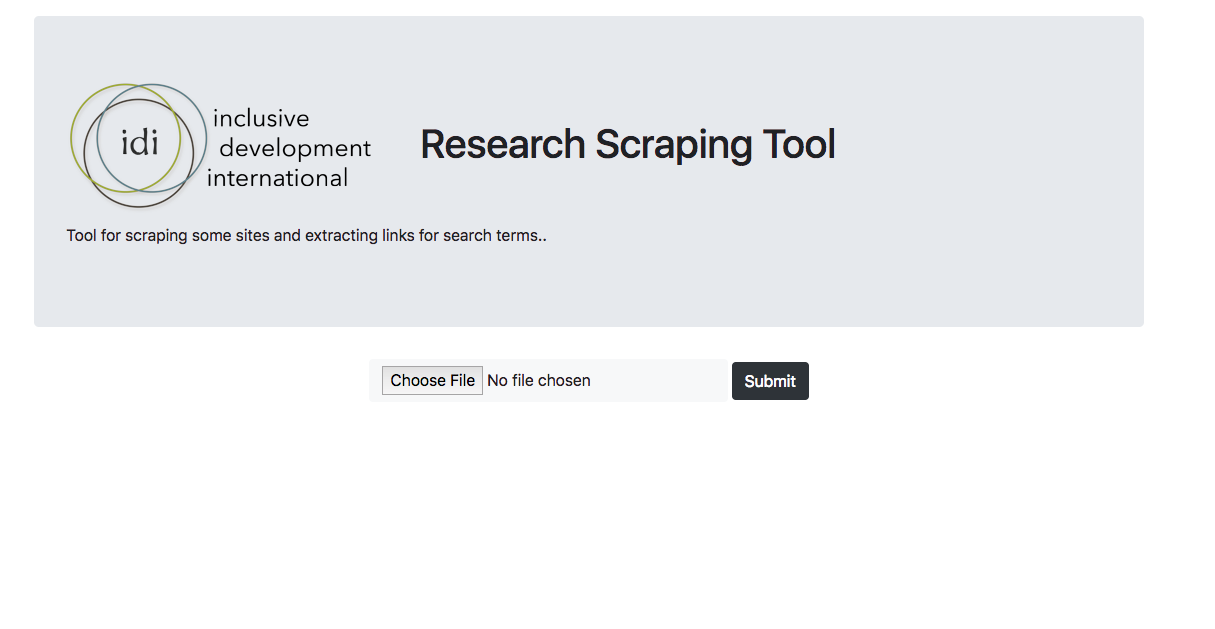

- Tool(s) take a csv/excel list of search terms as input

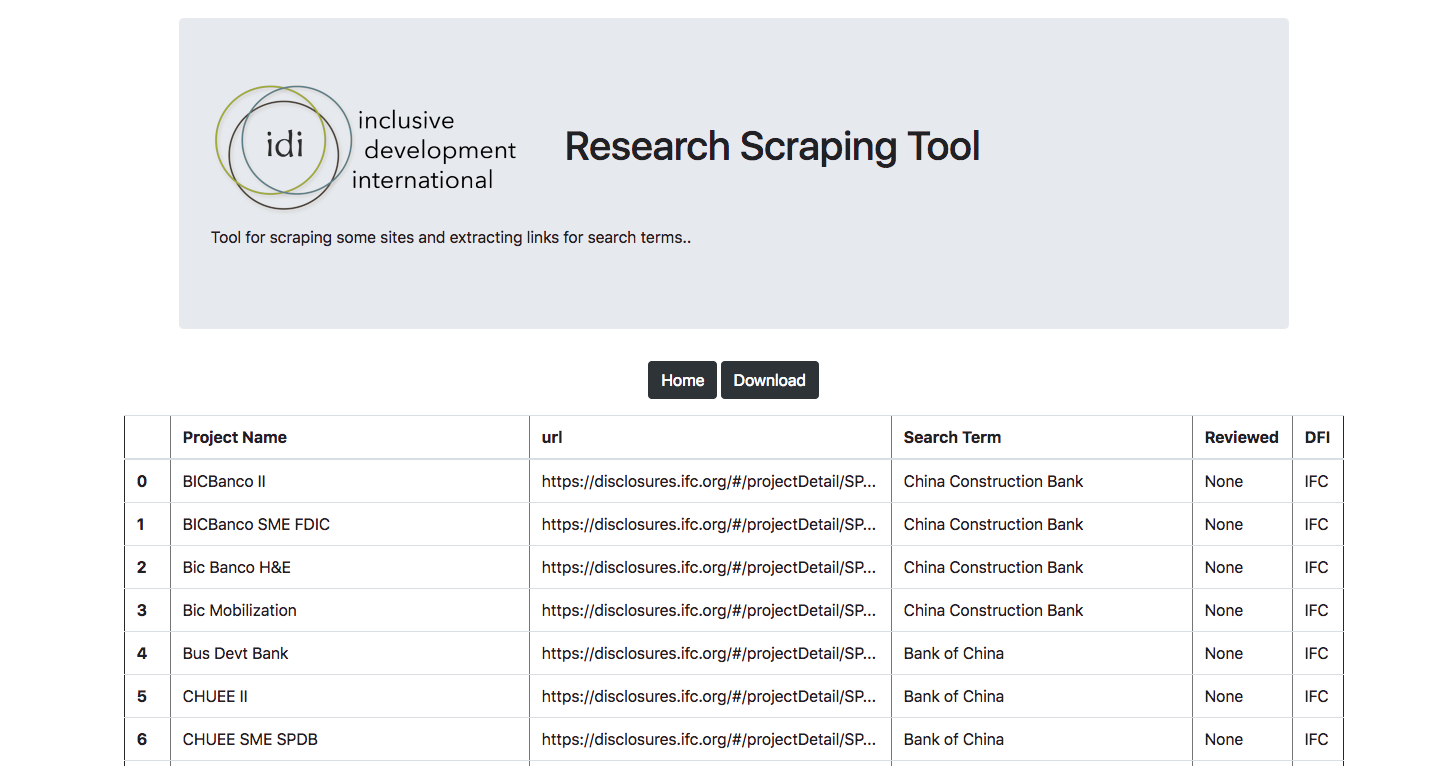

- Tool(s) return a csv/excel file with a list of links that were found for a specific search term, each row should include (where available)

- Project Name

- Search Term Used

- DFI Site scrape extracted from (what bank is being scraped)

- Tool(s) are easy to execute and have straightforward instructions for use.

- Tool(s) cover high priority DFI sites

- All scrapers return data in an identical format - meaning the output has the same columns and meanings as all other scrapers. If some data is only available for a subset of DFIs then that column will be present but empty for the DFIs that do not have that information.

- Tool(s) deduplicate projects by specific DFI site, tool should not deduplicate across DFI sites.

- Python 3 (https://www.python.org/downloads/)

- Optional, but recommended: Virtualenv (https://virtualenv.pypa.io/en/stable/) / virtualenvwrapper (https://virtualenvwrapper.readthedocs.io/en/latest/)

Most people will follow this route.

- Clone the repository (Click "Clone or download"; click the copy button;

git clone {copied text}) - Setup a virtual environment (optional, but recommended):

mkvirtualenv idi-datadive-2018 - Navigate to

./scrapers pip install -r requirements.txtto install the requirements- If you decide you need Selenium, you may need to install ChromeDriver. Call out in Slack if you need help.

- Sign up for a scraper to work on, then use the following files in

scrapersto get started on your own:miga_scraper.py- blank templateworldbank_scraper.py- example of using requests and a data APIifc_scraper.py- example of using Selenium and Beautiful Soup

- Once you're ready to test your work, update

test_scraper.pyto point to your new work. Then runpython test_scraper.py - Examine the outputs and the file,

test.csv - If everything looks good, submit it for checking and merging. Congrats! Share any tips or tricks you used with the others on Slack.

This route is for anyone who wants to contribute to the Flask app, directly.

The demo Flask app & scrapers are found in /flask_scraper_demo.

- Clone the repository (Click "Clone or download"; click the copy button;

git clone {copied text}) - Setup a virtual environment (optional, but recommended):

mkvirtualenv idi-datadive-2018 - Navigate to

./flask_scraper_demo make setupto install the requirementsmake runto run the app- You may need to install ChromeDriver. Call out in Slack if you need help.

- Navigate to http://localhost:5000/ to make sure the app works

- On the app, choose the

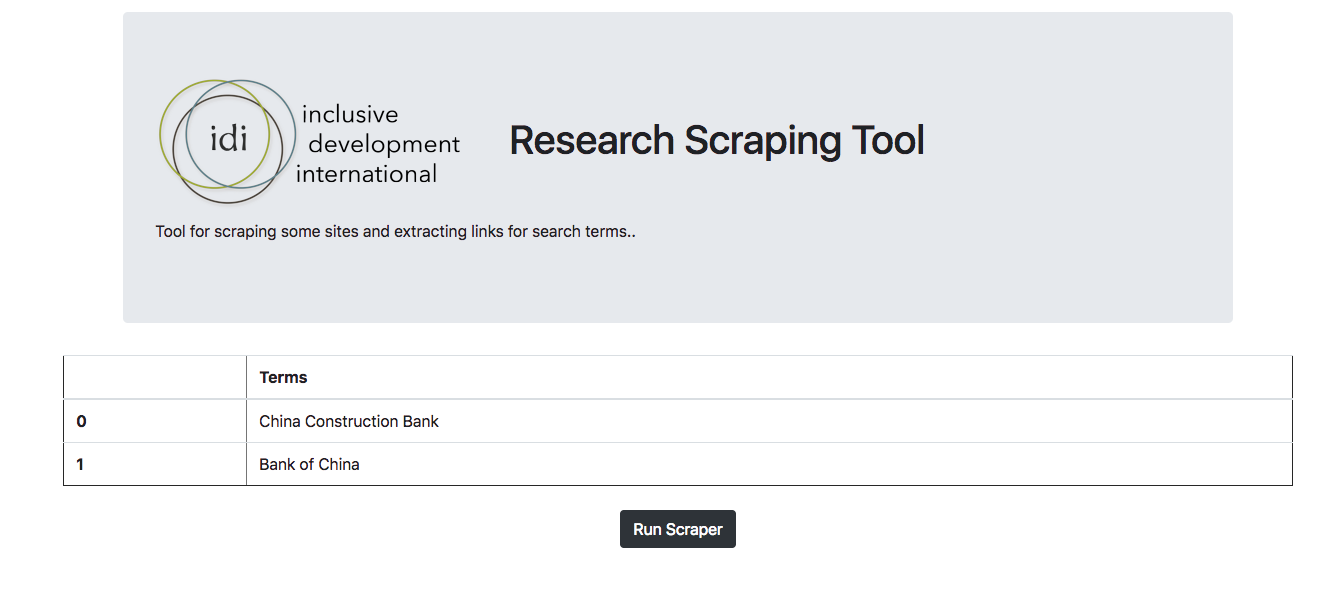

Search_Terms.txtfile & click "Submit" - It should show 2 search terms: click "Run Scraper"

- Make sure the status of the scrapers is printed to your terminal

- Make sure the table displays on the web page at the end of the run

- The main files of interest are:

app/routes.py- This file defines the routes and does some of the data munging.app/helpers.py- This file has a TableBuilder class to help with displaying and exporting results.app/scrapers/execute_search.py- This file is responsible for calling all of the contributed scrapers and gathering results.

This proof of concept app takes as input a csv file of search terms and searches the IFC site. It only works for this site currently. If you want to run it there is a demo search terms file located at flask_scraper_demo/Search_Terms.txt.