This repository contains the code and experiemnts for the IJCAI 2022 paper: Adapt to Adaptation: Learning Personalization for Cross-Silo Federated Learning, by Jun Luo and Shandong Wu at the ICCI lab, University of Pittsburgh.

Conventional federated learning (FL) trains one global model for a federation of clients with decentralized data, reducing the privacy risk of centralized training. However, the distribution shift across non-IID datasets, often poses a challenge to this one-model-fits-all solution. Personalized FL aims to mitigate this issue systematically. In this work, we propose APPLE, a personalized cross-silo FL framework that adaptively learns how much each client can benefit from other clients' models. We also introduce a method to flexibly control the focus of training APPLE between global and local objectives. We empirically evaluate our method's convergence and generalization behaviors, and perform extensive experiments on two benchmark datasets and two medical imaging datasets under two non-IID settings. The results show that the proposed personalized FL framework, APPLE, achieves state-of-the-art performance compared to several other personalized FL approaches in the literature.

To download the dependent packages, in Python 3.6.8 environment, run

pip install -r requirements.txtFour datasets are used in our experiments: MNIST, CIFAR10, and two medical imaging datasets from the MedMNIST collection.

- The two benchmark datasets, namely MNIST and CIFAR10, will be downloaded automatically when running

train_APPLE.py. - For the two medical imaging datasets from MedMNIST, namely OrganMNIST (axial) and PathMNIST, one can download them from the MedMNIST website as

./data/organmnist_axial.npzand./data/pathmnist.npz, and runpython split_medmnist.pyinside the./data/directory to create a train-test split in a stratified fashion:

To run the experiments on APPLE, simply run :

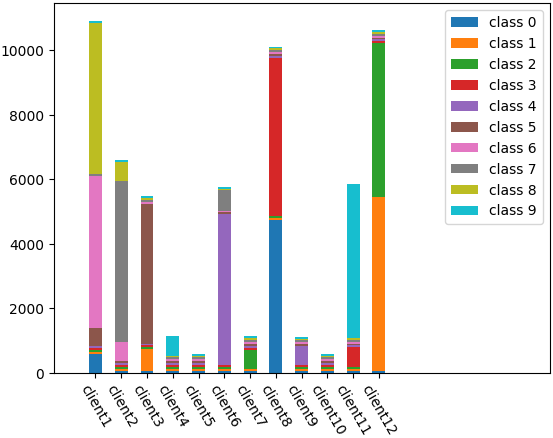

python train_APPLE.pyTwo figures of the data distribution of the federated datasets (similar to the following figure) will be saved in ./data_dist/ at the beginning of the training.

Check out the optional training arguments by running:

python train_APPLE.py --helpFor instance, if we want to train APPLE for 200 rounds on CIFAR10 under the practical non-IID setting, with the learning rate for the core models equals 0.01 and the learning rate for the Directed Relationships equals 0.001, we can run:

python train_APPLE.py --num_rounds 200 \

--data 'cifar10' \

--distribution 'non-iid-practical' \

--lr_net 0.01 \

--lr_coef 0.001By default, the results will be saved in ./results/. To collect the test results in a table and plot the training curves in one figure, run

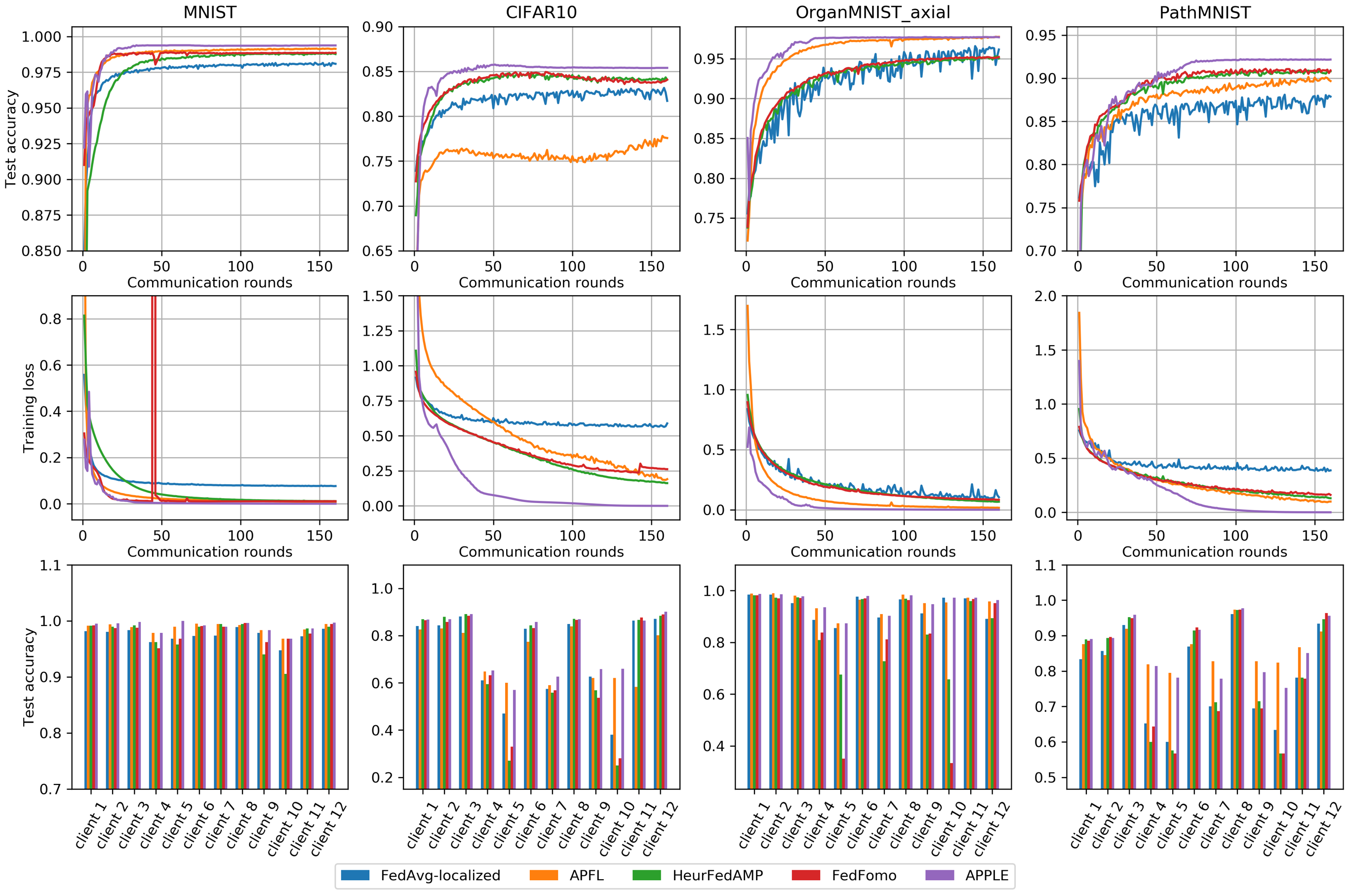

python plot_results.pyThe figure will be in the following format:

To include results of other methods with the same format into the comparison, add new or exclude existing datasets, or adjust the DPI of the output figures, modify the first block of the main function in plot_results.py, accordingly. To visualize the trajectory of the Directed Relationship vectors in APPLE, uncomment the last block in the main function.

If you find our code useful, please consider citing:

@article{luo2021adapt,

title={Adapt to Adaptation: Learning Personalization for Cross-Silo Federated Learning},

author={Luo, Jun and Wu, Shandong},

journal={arXiv preprint arXiv:2110.08394},

year={2021}

}