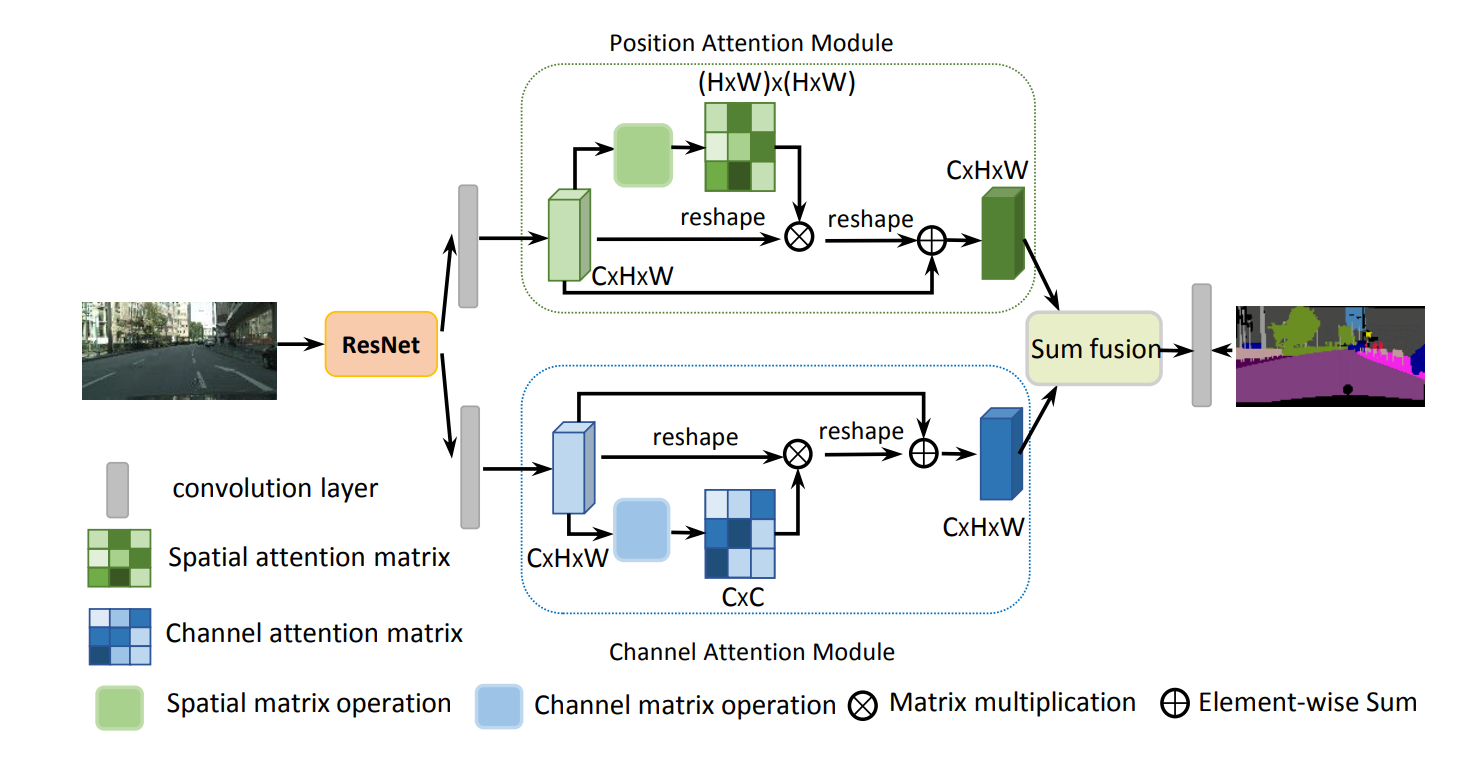

Dual Attention Network for Scene Segmentation(CVPR2019)

Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang,and Hanqing Lu

Introduction

We propose a Dual Attention Network (DANet) to adaptively integrate local features with their global dependencies based on the self-attention mechanism. And we achieve new state-of-the-art segmentation performance on three challenging scene segmentation datasets, i.e., Cityscapes, PASCAL Context and COCO Stuff-10k dataset.

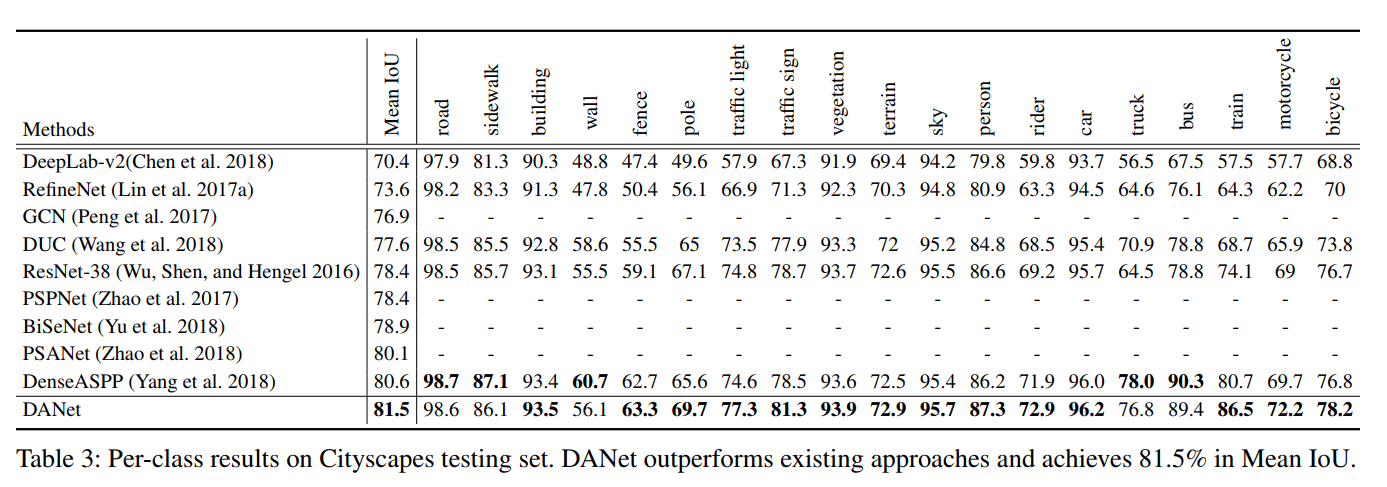

Cityscapes testing set result

We train our DANet-101 with only fine annotated data and submit our test results to the official evaluation server.

Usage

- Install pytorch

- The code is tested on python3.6 and official Pytorch@commitfd25a2a, please install PyTorch from source.

- The code is modified from PyTorch-Encoding.

-

Clone the repository:

git clone https://github.com/junfu1115/DANet.git cd DANet python setup.py install -

Dataset

- Download the Cityscapes dataset and convert the dataset to 19 categories.

- Please put dataset in folder

./datasets

4 . Evaluation

-

Download trained model DANet101 and put it in folder

./danet/cityscapes/model -

Evaluation code is in folder

./danet/cityscapes -

cd danet -

For single scale testing, please run:

CUDA_VISIBLE_DEVICES=0,1,2,3 python test.py --dataset cityscapes --model danet --resume-dir cityscapes/model --base-size 2048 --crop-size 768 --workers 1 --backbone resnet101 --multi-grid --multi-dilation 4 8 16 --eval- For multi-scale testing, please run:

CUDA_VISIBLE_DEVICES=0,1,2,3 python test.py --dataset cityscapes --model danet --resume-dir cityscapes/model --base-size 2048 --crop-size 1024 --workers 1 --backbone resnet101 --multi-grid --multi-dilation 4 8 16 --eval --multi-scales- If you want to visualize the result of DAN-101, you can run:

CUDA_VISIBLE_DEVICES=0,1,2,3 python test.py --dataset cityscapes --model danet --resume-dir cityscapes/model --base-size 2048 --crop-size 768 --workers 1 --backbone resnet101 --multi-grid --multi-dilation 4 8 16-

Evaluation Result:

The expected scores will show as follows:

(single scale testing denotes as 'ss' and multiple scale testing denotes as 'ms')

DANet101 on cityscapes val set (mIoU/pAcc): 79.93/95.97 (ss) and 81.49/96.41 (ms)

-

Training:

- Training code is in folder

./danet/cityscapes cd danet

You can reproduce our result by run:

CUDA_VISIBLE_DEVICES=0,1,2,3 python train.py --dataset cityscapes --model danet --backbone resnet101 --checkname danet101 --base-size 1024 --crop-size 768 --epochs 240 --batch-size 8 --lr 0.003 --workers 2 --multi-grid --multi-dilation 4 8 16Note that: We adopt multiple losses in end of the network for better training.

Citation

If DANet is useful for your research, please consider citing:

@article{fu2018dual,

title={Dual Attention Network for Scene Segmentation},

author={Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang,and Hanqing Lu},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

Acknowledgement

Thanks PyTorch-Encoding, especially the Synchronized BN!