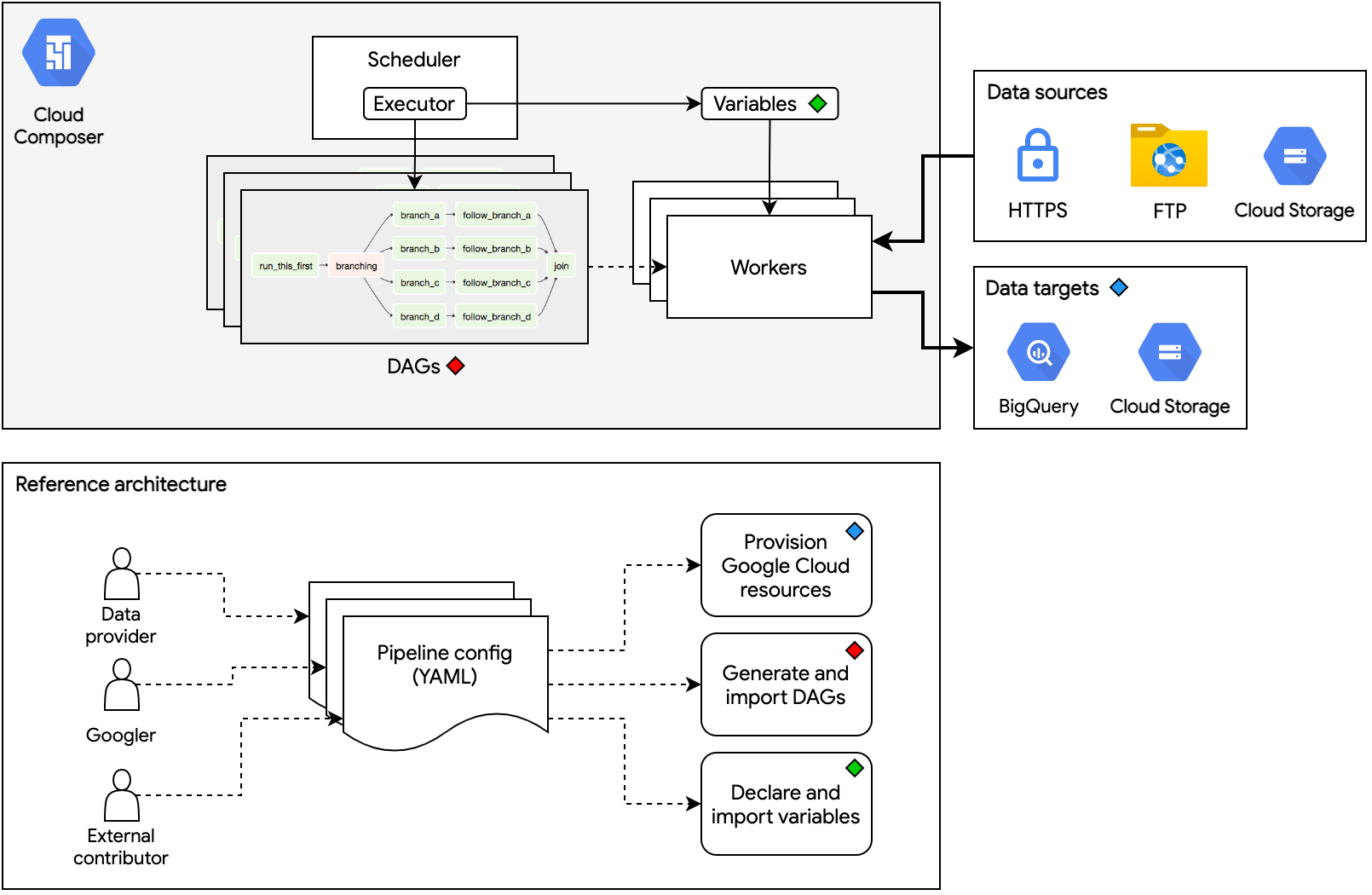

We're using the BQ Public Datasets Pipeline to deploy a cloud-native, data pipeline architecture for onboarding datasets within Argolis.

- Access to Cloudtop Env

- Access to Argolis Env

- Ensure Argolis Env is set up to allow for creation of Composer 2 env. More context found here

- Python

>=3.6.10,<3.9. We currently use3.8. For more info, see the Cloud Composer version list. - Familiarity with Apache Airflow (

>=v2.1.0) - pipenv for creating similar Python environments via

Pipfile.lock - gcloud command-line tool with Google Cloud Platform credentials configured. Instructions can be found here.

- Terraform

>=v0.15.1 - Google Cloud Composer environment running Apache Airflow

>=2.1.0and Cloud Composer>=2.0.0. To create a new Cloud Composer environment, see this guide.

- SSH into Cloudtop Instance

- Clone this repo

cd https://github.com/llooker/bq_dataset_pipeline- authenticate to Argolis via gcloud

gcloud auth login

gcloud auth application-default loginWe use Pipenv to make environment setup more deterministic and uniform across different machines. If you haven't done so, install Pipenv using these instructions.

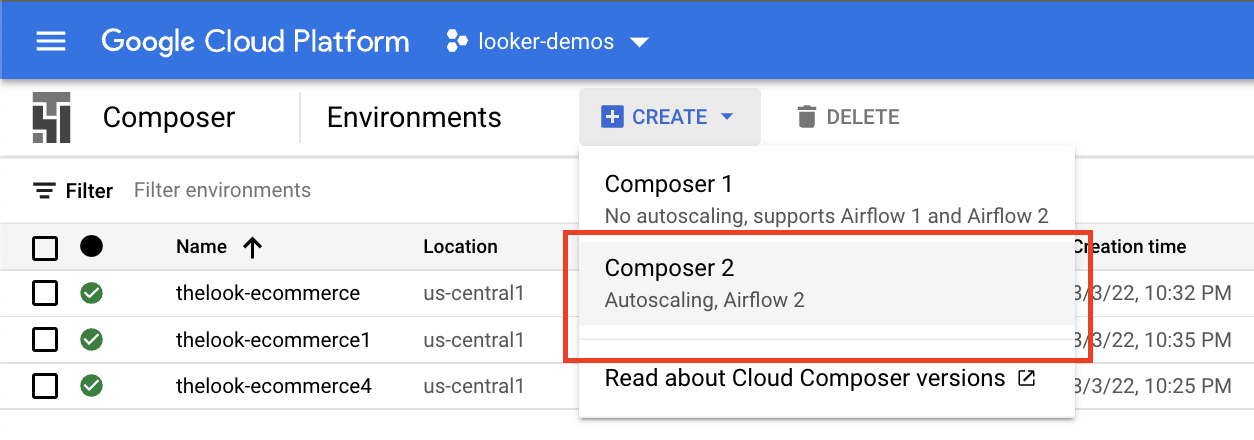

pipenv install --ignore-pipfile --dev- Within your Argolis Project create your Composer 2 Enviornment (we're naming ours

thelook-ecommercehosted inus-central1if you want to follow along)

If there's an issue spinning up a composer env, most likely your Argolis Env isn't set up properly (more context here)

- Once your Composer 2 Env is up and running (takes ~25 min) you'll want to click into it and set your enviornment variables.

| NAME | VALUE |

|---|---|

| AIRFLOW_VAR_GCP_PROJECT | Your GCP Argolis Project Name |

| GCS bucket of the Composer environment | Your Composer Enviornment's Bucket Name |

| AIRFLOW_VAR_AIRFLOW_HOME | /home/airflow/gcs |

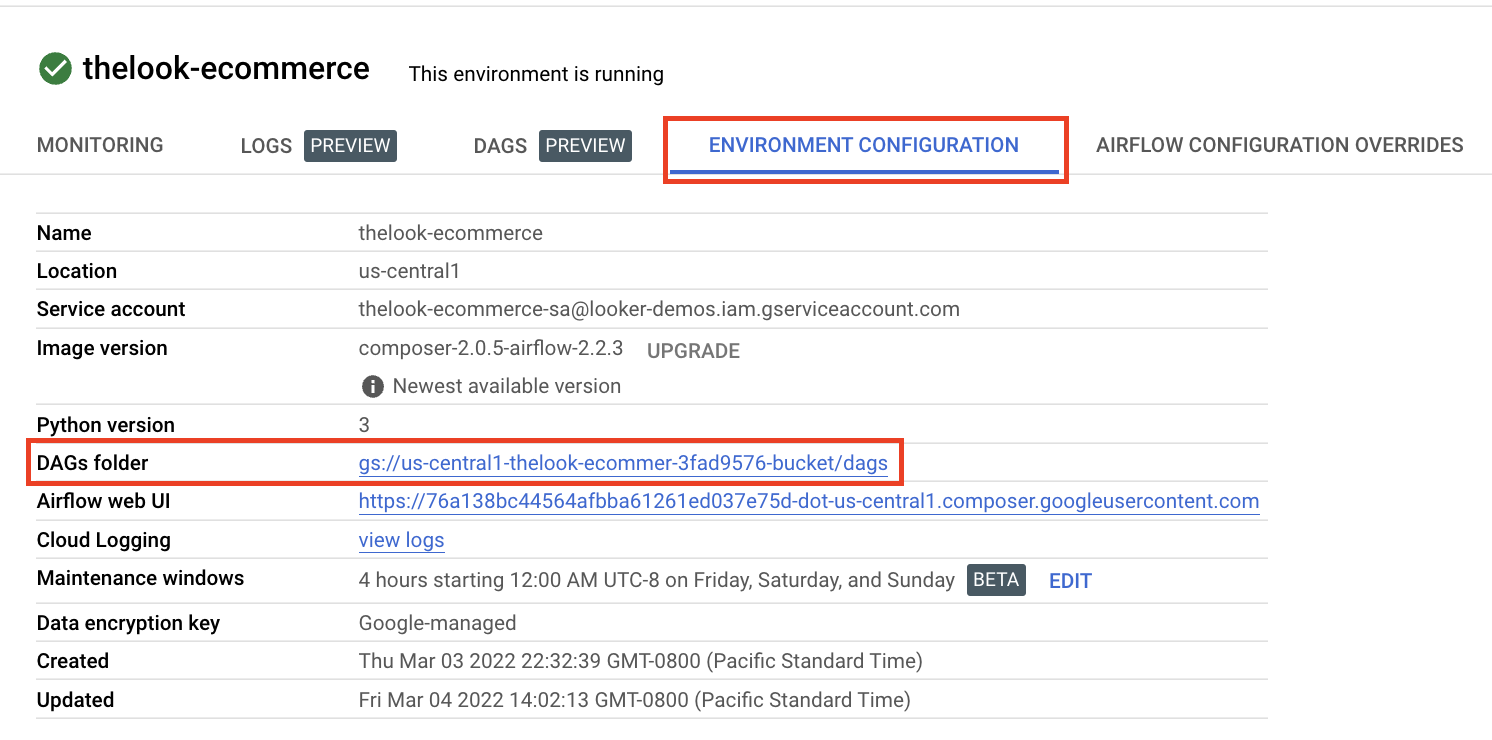

- Please note that the GCS bucket for your Composer Env can be found by clicking into the environment > Enviornment Configuation tab > DAGs Folder (be sure to just copy the bucket name - don't include any folders)

On Cloutop within the run the following command from the project root (be sure to replace the placeholders with your environment's values):

$ pipenv run python scripts/generate_terraform.py \

--dataset thelook_ecommerce \

--gcp-project-id $GCP_PROJECT_ID \

--region $REGION \

--bucket-name-prefix $UNIQUE_BUCKET_PREFIX \

--tf-apply| PLACEHOLDER | Description |

|---|---|

| $GCP_PROJECT_ID | Your Argolis Project Name |

| $REGION | Region where your Composer 2 Enviornment is Deployed |

| $UNIQUE_BUCKET_PREFIX | Your Composer Env bucket name |

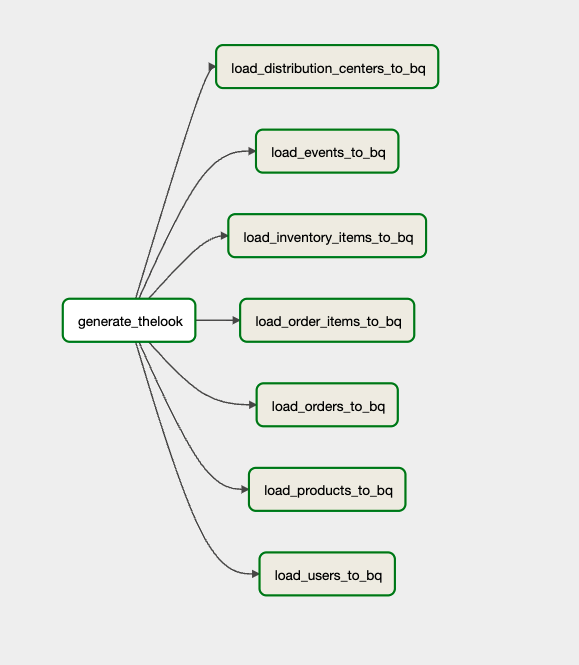

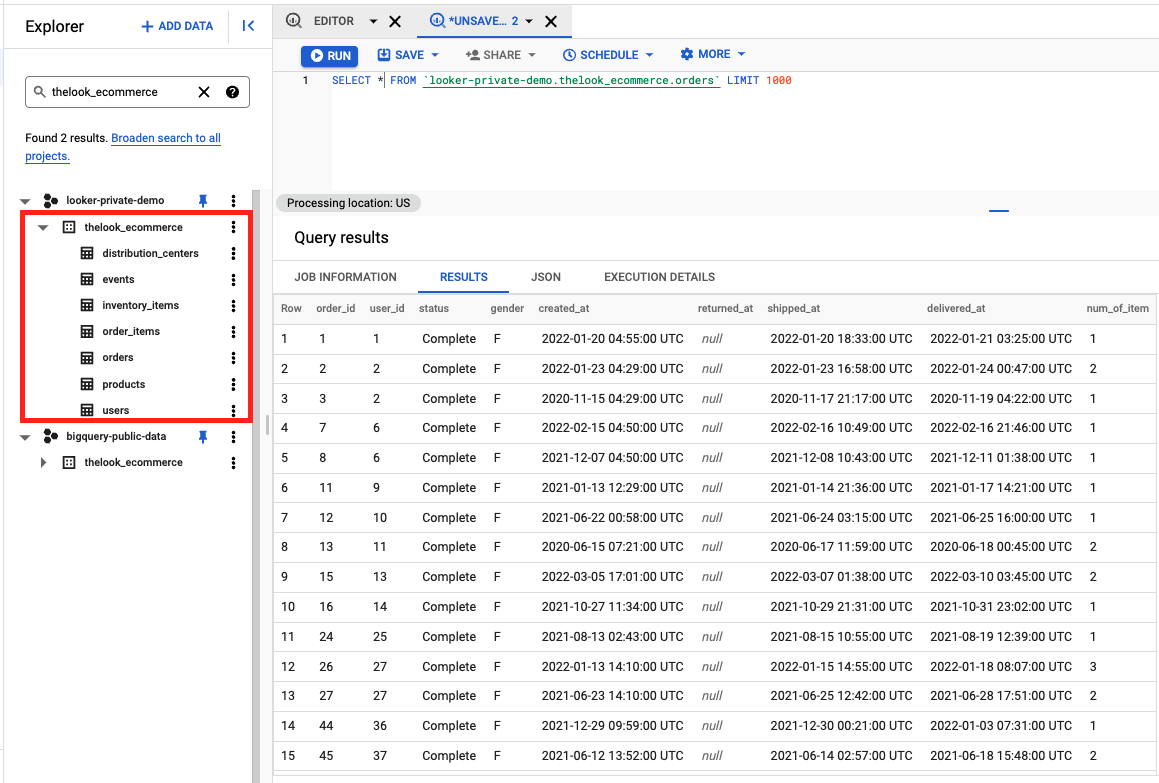

- This will generate the terrform files and create the infra within your GCP Project (eg. you should now see a dataset named

thelook_ecommercewith multiple tables defined)

Run the following command from the project root:

$ pipenv run python scripts/generate_dag.py \

--dataset thelook_ecommerce \

--pipeline $PIPELINE| PLACEHOLDER | Description |

|---|---|

| $PIPELINE | Name of your Composer 2 Env - in our example it's called thelook-ecommerce |

- This will build our Docker Image and upload into Container Registry and generate our DAG file using the

pipeline.yaml file - Within our Argolis Project, navigate to

Images - Container Registryand copy the repo name containing our docker image (eg. gcr.io/looker-demos/thelook_ecommerce__run_thelook_kub)

- On Cloudtop Navigate to your

.dev/datasets/thelook_ecommerce/pipelinesfolder - Create a new file called

thelook_ecommerce_variables.json - Paste in the json below, replacing the

docker_imagevalue with your envirnment's docker image repository link if needed

{

"thelook_ecommerce": {

"docker_image": "gcr.io/looker-demos/thelook_ecommerce__run_thelook_kub"

}

}Back in the project root directory, run the following command:

$ pipenv run python scripts/deploy_dag.py \

--dataset thelook_ecommerce \

--composer-env $CLOUD_COMPOSER_ENVIRONMENT_NAME \

--composer-bucket $CLOUD_COMPOSER_BUCKET \

--composer-region $CLOUD_COMPOSER_REGION

| PLACEHOLDER | Description |

|---|---|

| $CLOUD_COMPOSER_ENVIRONMENT_NAME | Name of your Cloud Composer Enviornment - in our example it's claled thelook-ecommerce |

| $CLOUD_COMPOSER_BUCKET | Your Composer Env bucket name |

| $CLOUD_COMPOSER_REGION | Region your Composer Env is deployed in |

- Go to your Airflow Composer Env and view the dag to make sure it's running (takes ~15-25 minutes for the data to be generated and uploaded into BQ)

- Query your BQ table and data!