This repository is the implementation for our paper :

PSNet: Fast Data Structuring for Hierarchical Deep Learning on Point Cloud

Published in: IEEE Transactions on Circuits and Systems for Video Technology

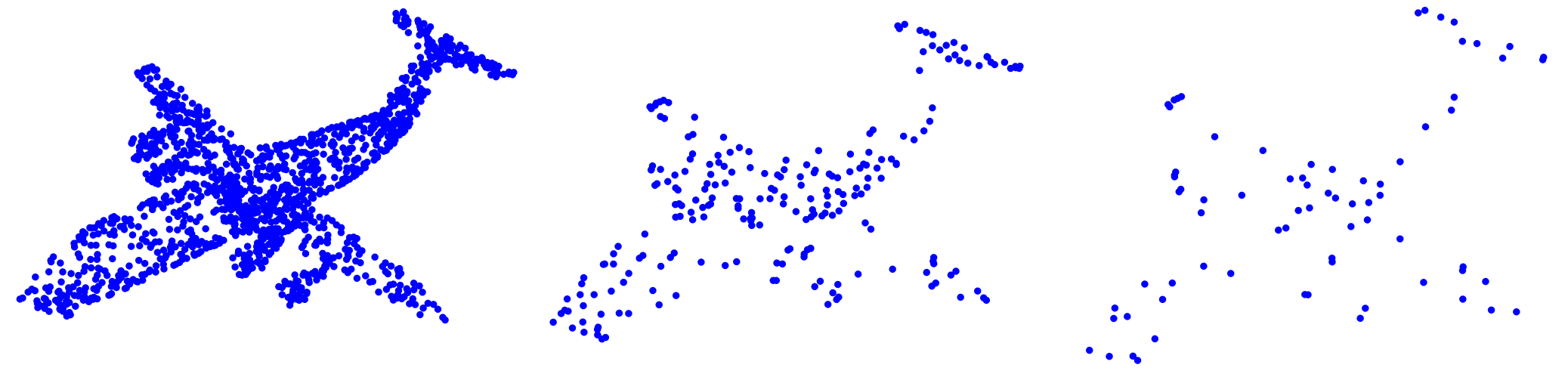

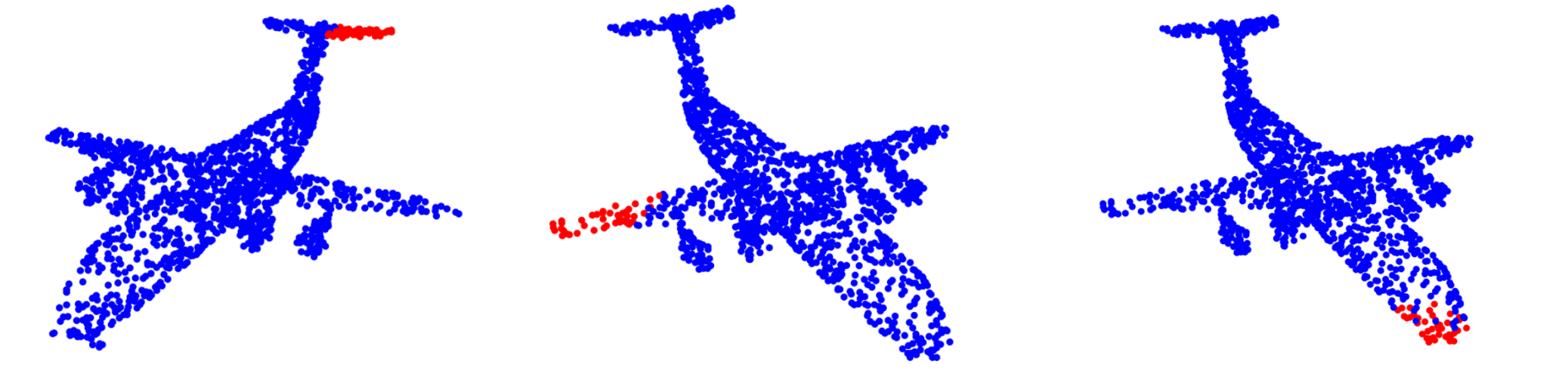

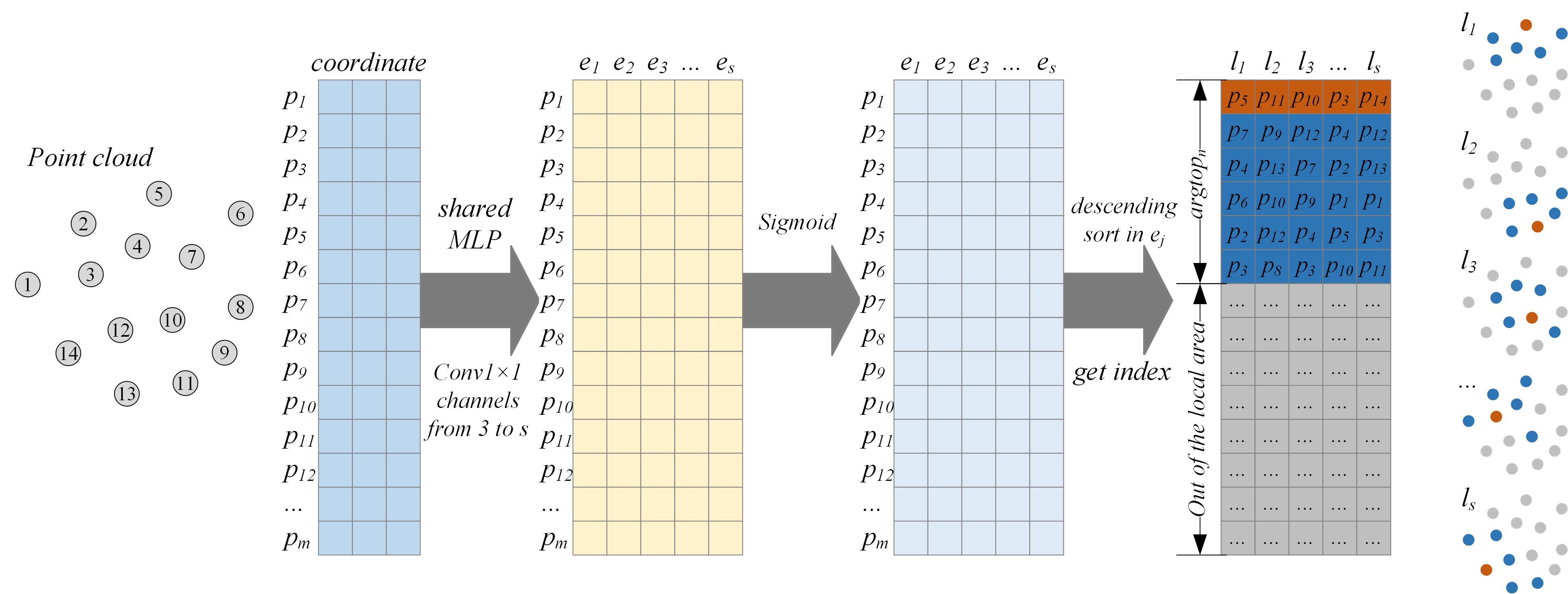

Point Structuring Net is a differentiable fast grouping and sampling method for deep learning on point cloud, which can be applied to mainstream point cloud deep learning models. Point Structuring Net perform grouping and sampling tasks at the same time. It does not use the relationship between points as a grouping reference, so that the inference speed is independent of the number of points, and friendly to parallel implementation, that reduces the time consumption of sampling and grouping effectively.

Point Structuring Net has been tested on PointNet++ [1], PointConv [2], RS-CNN [3], GAC [4]. There is not obvious adverse effects on these deep learning models of classification, part segmentation, and scene segmentation tasks and the speed of training and inference has been significantly improved.

The CORE FILE of Point Structuring Net: models/PSN.py

Python 3.7 or newer

PyTorch 1.5 or newer

NVIDIA® CUDA® Toolkit 9.2 or newer

NVIDIA® CUDA® Deep Neural Network library (cuDNN) 7.2 or newer

You can build the software dependencies through conda easily

conda install pytorch cudatoolkit cudnn -c pytorchYou may import PSNet pytorch module by:

import PSN as psnpsn_layer = psn.PSN(num_to_sample = 512, max_local_num = 32, mlp = [32, 256])Attribute mlp is the middle channels of PSN, because the channel of first layer and last layer must be 3 and sampling number.

sampled_points, grouped_points, sampled_feature, grouped_feature = psn_layer(coordinate = {coordinates of point cloud}, feature = {feature of point cloud})sampled_points is the sampled points, grouped_points is the grouped points.

sampled_feature is the sampled feature, grouped_feature is the grouped feature.

{coordinates of point cloud} is a torch.Tensor object, its shape is [batch size, number of points, 3]

{feature of point cloud} is a torch.Tensor object, , its shape is [batch size, number of points, D].

psn_msg_layer = psn.PSNMSG(num_to_sample = 512, msg_n = [32, 64], mlp = [32, 256])Attribute msg_n is the list of multi-scale n.

sampled_points, grouped_points_msg, sampled_feature, grouped_feature_msg = psn_msg_layer(coordinate = {coordinates of point cloud}, feature = {feature of point cloud})sampled_points is the sampled points, grouped_points_msg is the list of mutil-scale grouped points.

sampled_feature is the sampled feature, grouped_feature_msg is the list of mutil-scale the grouped feature.

There is an experiment on PointNet++

This experiment has been tested on follow environments:

Canonical Ubuntu 20.04.1 LTS

Python 3.8.5

PyTorch 1.7.0

NVIDIA® CUDA® Toolkit 10.2.89

NVIDIA® CUDA® Deep Neural Network library (cuDNN) 7.6.5

Intel® Core™ i9-9900K Processor (16M Cache, up to 5.00 GHz)

64GB DDR4 RAM

NVIDIA® TITAN RTX™

Microsoft Windows 11 Pro 22H2 22621.675

Python 3.10.6

PyTorch 1.14.0-nightly

NVIDIA® CUDA® Toolkit 11.7.1

AMD Ryzen™ 9 5950X Desktop Processors

32GB DDR4 3600 RAM

NVIDIA® GeForce RTX 3090

Download alignment ModelNet here and save in data/modelnet40_normal_resampled/.

python train_cls.py --log_dir [your log dir]Download alignment ShapeNet here and save in data/shapenetcore_partanno_segmentation_benchmark_v0_normal/.

python train_partseg.py --normal --log_dir [your log dir]Download 3D indoor parsing dataset (S3DIS) here and save in data/Stanford3dDataset_v1.2_Aligned_Version/.

cd data_utils

python collect_indoor3d_data.pyProcessed data will save in data/stanford_indoor3d/.

python train_semseg.py --log_dir [your log dir]

python test_semseg.py --log_dir [your log dir] --test_area 5 --visualThis implementation of experiment is heavily reference to yanx27/Pointnet_Pointnet2_pytorch

Thanks very much !

@ARTICLE{li-psnet-tcsvt,

author={Li, Luyang and He, Ligang and Gao, Jinjin and Han, Xie},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

title={PSNet: Fast Data Structuring for Hierarchical Deep Learning on Point Cloud},

year={2022},

volume={32},

number={10},

pages={6835-6849},

doi={10.1109/TCSVT.2022.3171968}}

[1] Qi, Charles Ruizhongtai, et al. “PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space.” Advances in Neural Information Processing Systems, 2017, pp. 5099–5108. [PDF]

[2] Wu, Wenxuan, et al. “PointConv: Deep Convolutional Networks on 3D Point Clouds.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 9621–9630. [PDF]

[3] Liu, Yongcheng, et al. “Relation-Shape Convolutional Neural Network for Point Cloud Analysis.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 8895–8904. [PDF]

[4] Wang, Lei, et al. “Graph Attention Convolution for Point Cloud Semantic Segmentation.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 10296–10305. [PDF]