Search message in Kafka topic.

$ samsa --broker localhost:9091 --topic test-samsa --from 2021-06-16T14:00:00Z --to 2021-07-06T14:00:00Z --cond object.id=20995 --type json- Go >1.13

- Docker + docker-compose

-

Clone code to local

$ git clone https://github.com/lnquy/samsa $ cd samsa -

Start a Kafka cluster in Docker:

$ cd deployment $ docker-compose up -

Open

http://localhost:9000and create your test topic, let's call ittest-samsawithpartition=3andreplicationFactor=1 -

Edit the

cmd/samsa/main.gofile to run thewriteTestDatafunction only.

You can use that function to publish messages (3,000,000 plaintext and 100,000 JSON messages) into the created Kafka topic above.

After finished running, check on thehttp://localhost:9000and you should seetest-samsatopic has 3 partitions with each has ~1,030,000 messages.# Example: Make sure you already edited the cmd/samsa/main.go to run the writeTestData function $ cd cmd/samsa $ go build $ ./samsa --broker localhost:9091 --topic test-samsa

-

Remove the

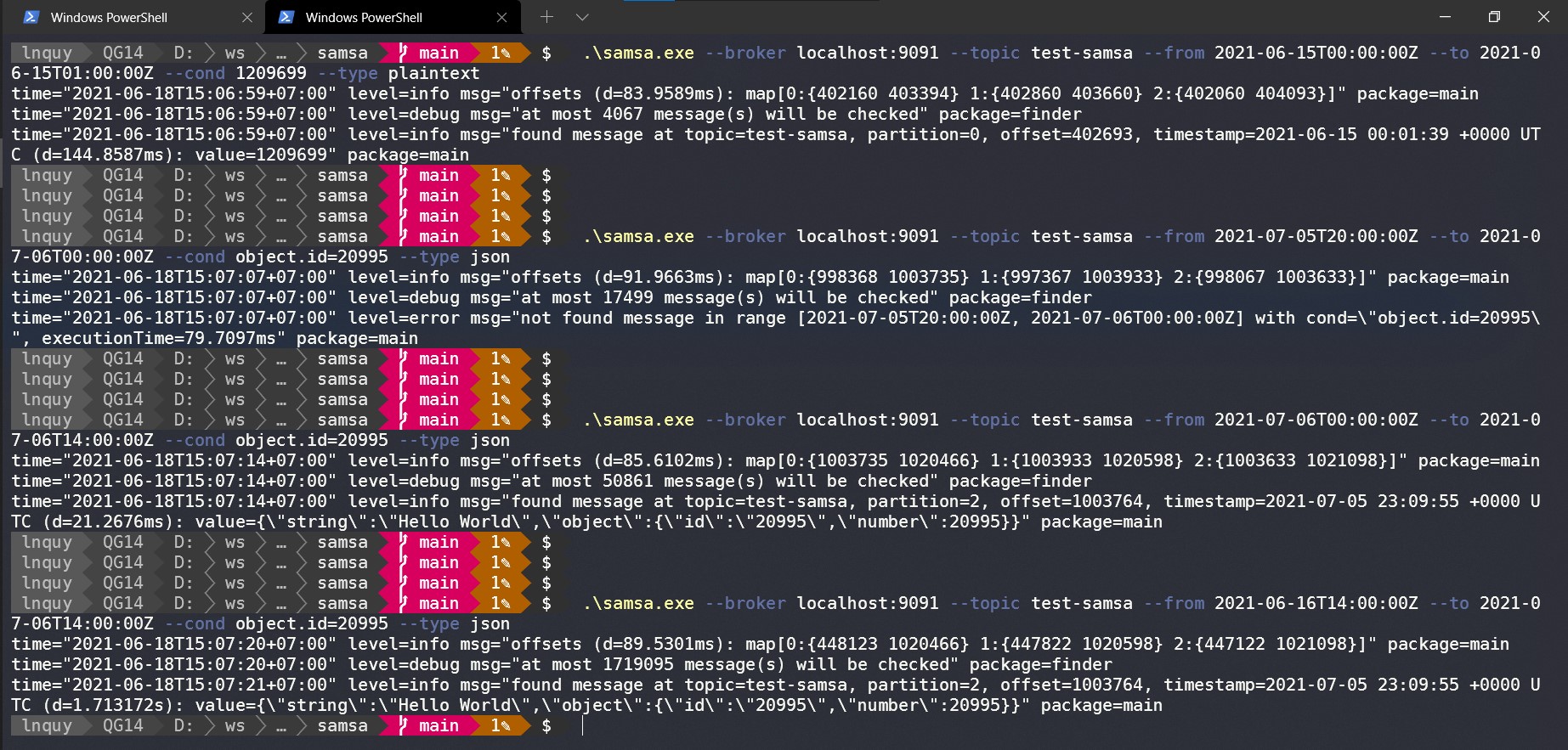

writeTestDatafunction fromcmd/samsa/main.goand we're ready to test searching message in Kafka topic now.$ go build # Plaintext exact match on message value/data. $ ./samsa --broker localhost:9091 --topic test-samsa --from 2021-06-15T00:00:00Z --to 2021-06-15T01:00:00Z --cond 1209699 --type plaintext # JSON not found in search range. $ ./samsa --broker localhost:9091 --topic test-samsa --from 2021-07-05T20:00:00Z --to 2021-07-06T00:00:00Z --cond object.id=20995 --type json # JSON found. $ ./samsa --broker localhost:9091 --topic test-samsa --from 2021-07-06T00:00:00Z --to 2021-07-06T14:00:00Z --cond object.id=20995 --type json # JSON found with bigger time range (~1,700,000 messages need to be checked). $ ./samsa --broker localhost:9091 --topic test-samsa --from 2021-06-16T14:00:00Z --to 2021-07-06T14:00:00Z --cond object.id=20995 --type json