The official code of Towards Balanced Alignment: Modal-Enhanced Semantic Modeling for Video Moment Retrieval (AAAI 2024)

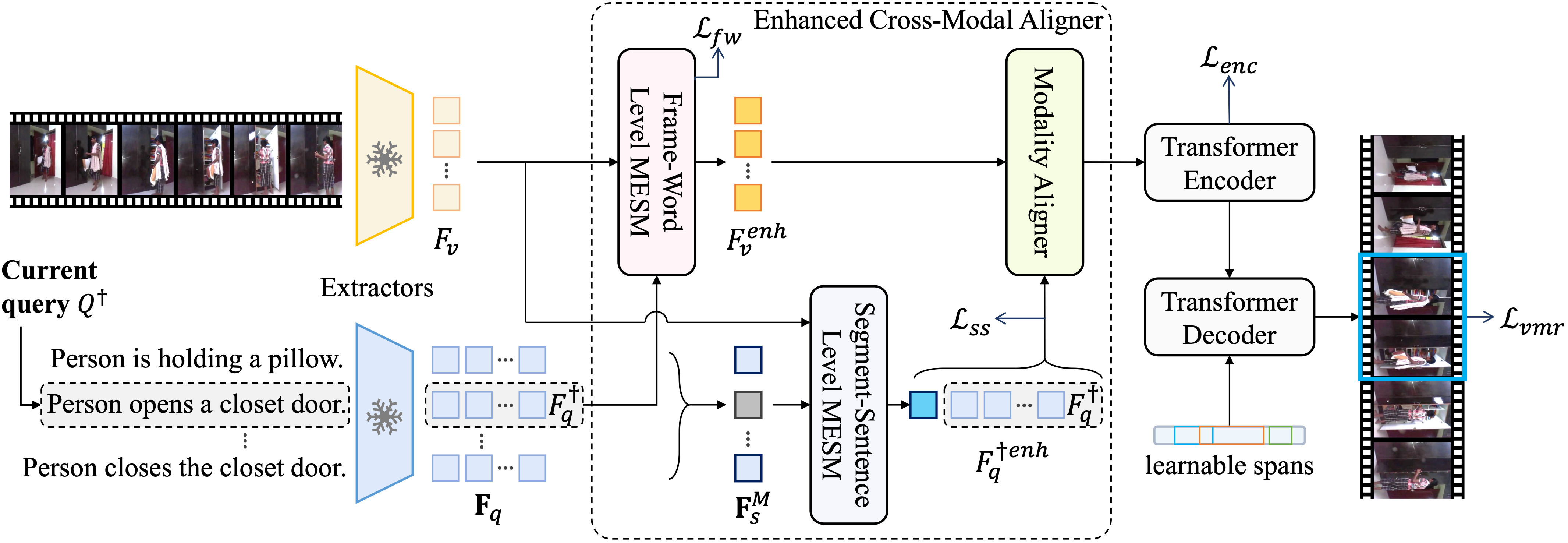

MESM focuses on the modality imbalance problem in VMR, which means the semantic richness inherent in a video far exceeds that of a given limited-length sentence. The problem exists at both the frame-word level and the segment-sentence level.

MESM proposes the modal-enhanced semantic modeling for both levels to address this problem.

This work was tested with Python 3.8.12, CUDA 11.3, and Ubuntu 18.04. You can use the provided docker environment or install the environment manully.

Assuming you are now at path /.

git clone https://github.com/lntzm/MESM.git

docker pull lntzm/pytorch1.11.0-cuda11.3-cudnn8-devel:v1.0

docker run -it --gpus=all --shm-size=64g --init -v /MESM/:/MESM/ lntzm/pytorch1.11.0-cuda11.3-cudnn8-devel:v1.0 /bin/bash

# You should also download nltk_data in the container.

python -c "import nltk; nltk.download('all')"conda create -n MESM python=3.8

conda activate MESM

conda install pytorch==1.11.0 torchvision==0.12.0 cudatoolkit=11.3 -c pytorch

pip install -r requirements.txt

# You should also download nltk_data.

python -c "import nltk; nltk.download('all')"The structure of the data folder is as follows:

data

├── charades

│ ├── annotations

│ │ ├── charades_sta_test.txt

│ │ ├── charades_sta_train.txt

│ │ ├── Charades_v1_test.csv

│ │ ├── Charades_v1_train.csv

│ │ ├── CLIP_tokenized_count.txt

│ │ ├── GloVe_tokenized_count.txt

│ │ └── glove.pkl

│ ├── clip_image.hdf5

│ ├── i3d.hdf5

│ ├── slowfast.hdf5

│ └── vgg.hdf5

├── Charades-CD

│ ├── charades_test_iid.json

│ ├── charades_test_ood.json

│ ├── charades_train.json

│ ├── charades_val.json

│ ├── CLIP_tokenized_count.txt -> ../charades/annotations/CLIP_tokenized_count.txt

│ └── glove.pkl -> ../charades/annotations/glove.pkl

├── Charades-CG

│ ├── novel_composition.json

│ ├── novel_word.json

│ ├── test_trivial.json

│ ├── train.json

│ ├── CLIP_tokenized_count.txt -> ../charades/annotations/CLIP_tokenized_count.txt

│ └── glove.pkl -> ../charades/annotations/glove.pkl

├── qvhighlights

│ ├── annotations

│ │ ├── CLIP_tokenized_count.txt

│ │ ├── highlight_test_release.jsonl

│ │ ├── highlight_train_release.jsonl

│ │ ├── highlight_val_object.jsonl

│ │ └── highlight_val_release.jsonl

│ ├── clip_image.hdf5

│ └── slowfast.hdf5

├── TACoS

│ ├── annotations

│ │ ├── CLIP_tokenized_count.txt

│ │ ├── GloVe_tokenized_count.txt

│ │ ├── test.json

│ │ ├── train.json

│ │ └── val.json

│ └── c3d.hdf5All extracted features are converted to hdf5 files for better storage. You can use the provided python script ./data/npy2hdf5.py to convert *.npy or *.npz files to an hdf5 file.

These files are built for masked language modeling in FW-MESM, and they can be generated by running

python -m data.tokenized_count-

CLIP_tokenized_count.txtColumn 1 is the word_id tokenized by the CLIP tokenizer, column 2 is the times the word_id appears in the whole dataset.

-

GloVe_tokenized_count.txtColumn 1 is the splited word in a sentence, column 2 is its tokenized id for GloVe, and column 3 is the times the word appears in the whole dataset.

We provide the merged hdf5 files of CLIP and SlowFast features here. However, VGG and I3D features are too large for our network drive storge space. In fact, we just followed QD-DETR to get video features for all extractors. They provide detailed ways to obtain features, see this link.

glove.pkl records the necessary vocabulary for the dataset. Specifically, it contains the most common words for MLM, the wtoi dictionary, and the id2vec dictionary. We use the glove.pkl from CPL, which can also be built from the standard glove.6B.300d.

Same as QD-DETR, we also use the official feature files for QVHighlights dataset from Moment-DETR, which can be downloaded here, and merge them to clip_image.hdf5 and slowfast.hdf5.

We follow VSLNet to get the C3D features for TACoS. Specifically, we run prepare/extract_tacos_org.py and set the sample_rate 128 to extract the pretrained C3D visual features from TALL and then convert it to hdf5 file. We provide the converted file here.

| Dataset | Extractors | Download Link |

|---|---|---|

| Charades-STA | VGG, GloVe | OneDrive |

| Charades-STA | C+SF, C | OneDrive |

| Charades-CG | C+SF, C | OneDrive |

| TACoS | C3D, GloVe | OneDrive |

| QVHighlights | C+SF, C | OneDrive |

You can run train.py with args in command lines:

CUDA_VISIBLE_DEVICES=0 python train.py {--args}Or run with a config file as input:

CUDA_VISIBLE_DEVICES=0 python train.py --config_file ./config/charades/VGG_GloVe.jsonYou can run eval.py with args in command lines:

CUDA_VISIBLE_DEVICES=0 python eval.py {--args}Or run with a config file as input:

CUDA_VISIBLE_DEVICES=0 python eval.py --config_file ./config/charades/VGG_GloVe_eval.jsonIf you find this repository useful, please use the following entry for citation.

@inproceedings{liu2024towards,

title={Towards Balanced Alignment: Modal-Enhanced Semantic Modeling for Video Moment Retrieval},

author={Liu, Zhihang and Li, Jun and Xie, Hongtao and Li, Pandeng and Ge, Jiannan and Liu, Sun-Ao and Jin, Guoqing},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={4},

pages={3855--3863},

year={2024}

}

This implementation is based on these repositories: