CBAS is a suite of tools for phenotyping complex animal behaviors. It is designed to automate classification of behaviors from active live streams of video data and provide a simple interface for visualizing and analyzing the results.

Written and maintained by Logan Perry, a post-bac in the Jones Lab at Texas A&M University.

CBAS was designed with circadian behavior monitoring in mind. Here's a visualization of what CBAS can do! Note that the behaviors are not set in stone, and can be easily changed to fit the user's needs.

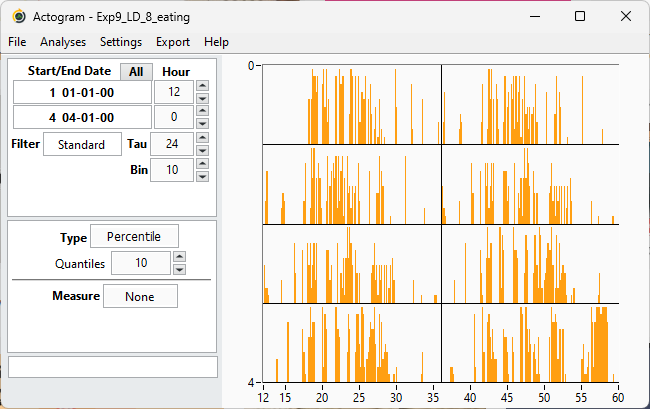

(Left) Real-time video recording of an individual mouse over two weeks in a 12h:12h light:dark cycle and in constant darkness. (Right) real-time actogram generation of nine distinct home cage behaviors.

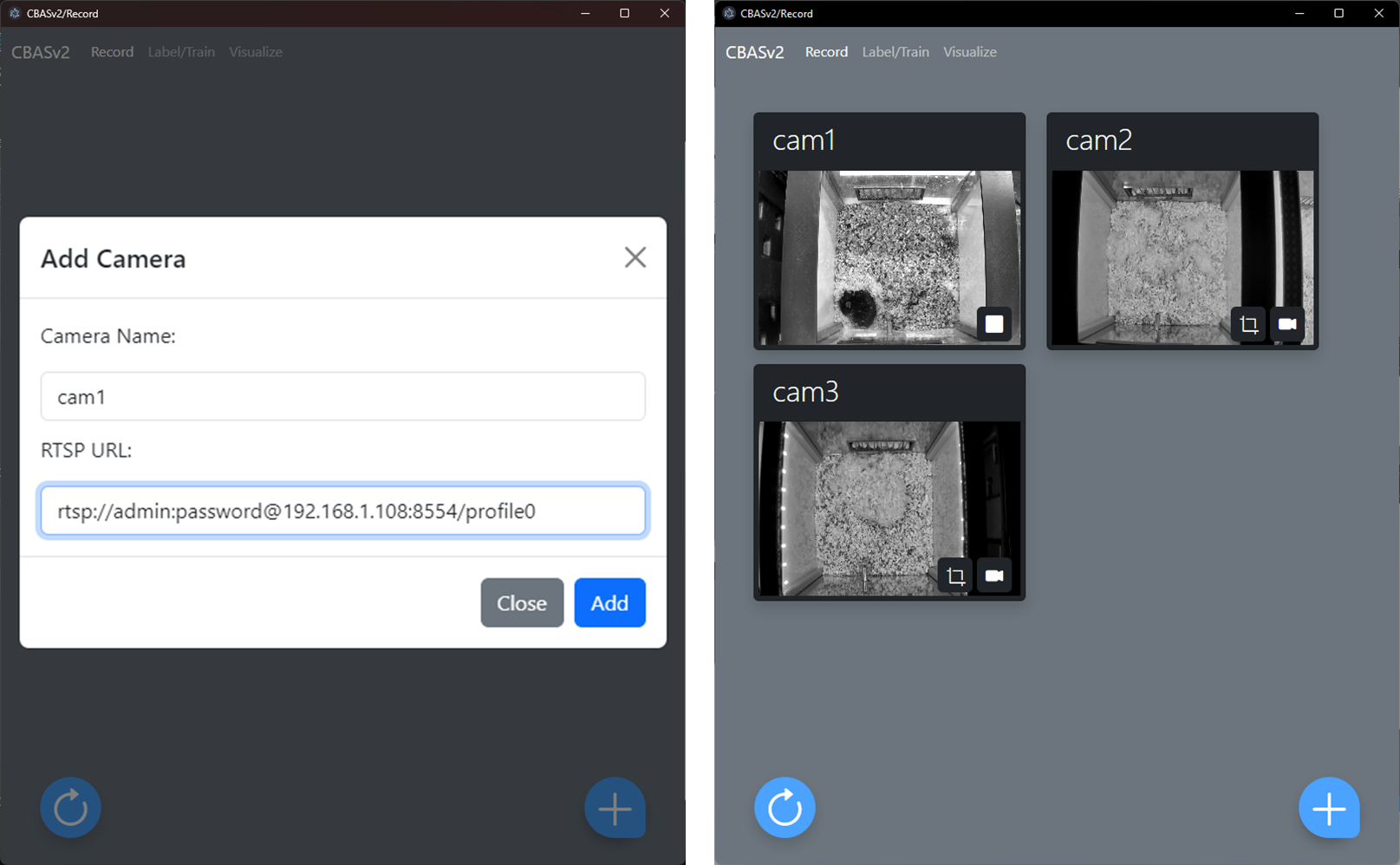

CBAS is a user-friendly, GUI-enabled Python package that allows for the automated acquisition, classification, and visualization of behaviors over time. It consists of three modules:

The acquisition module is capable of batch processing streaming video data from any number of network-configured real-time streaming protocol (RTSP) IP cameras.

CBAS performs real-time classification using a frozen feature extractor "backbone," the state-of-the-art DINOv2 vision transformer. DINOv2 has been pretrained using a self-supervised approach that is highly generalizable and can be used for a wide variety of classification tasks. We have added a joint long short-term memory and linear layer classification model 'head' onto this backbone to classify our behaviors of interest (see below) but, importantly, users can quickly and easily train their own model heads onto the static DINOv2 visual backbone to accommodate their specific behavior classification needs.

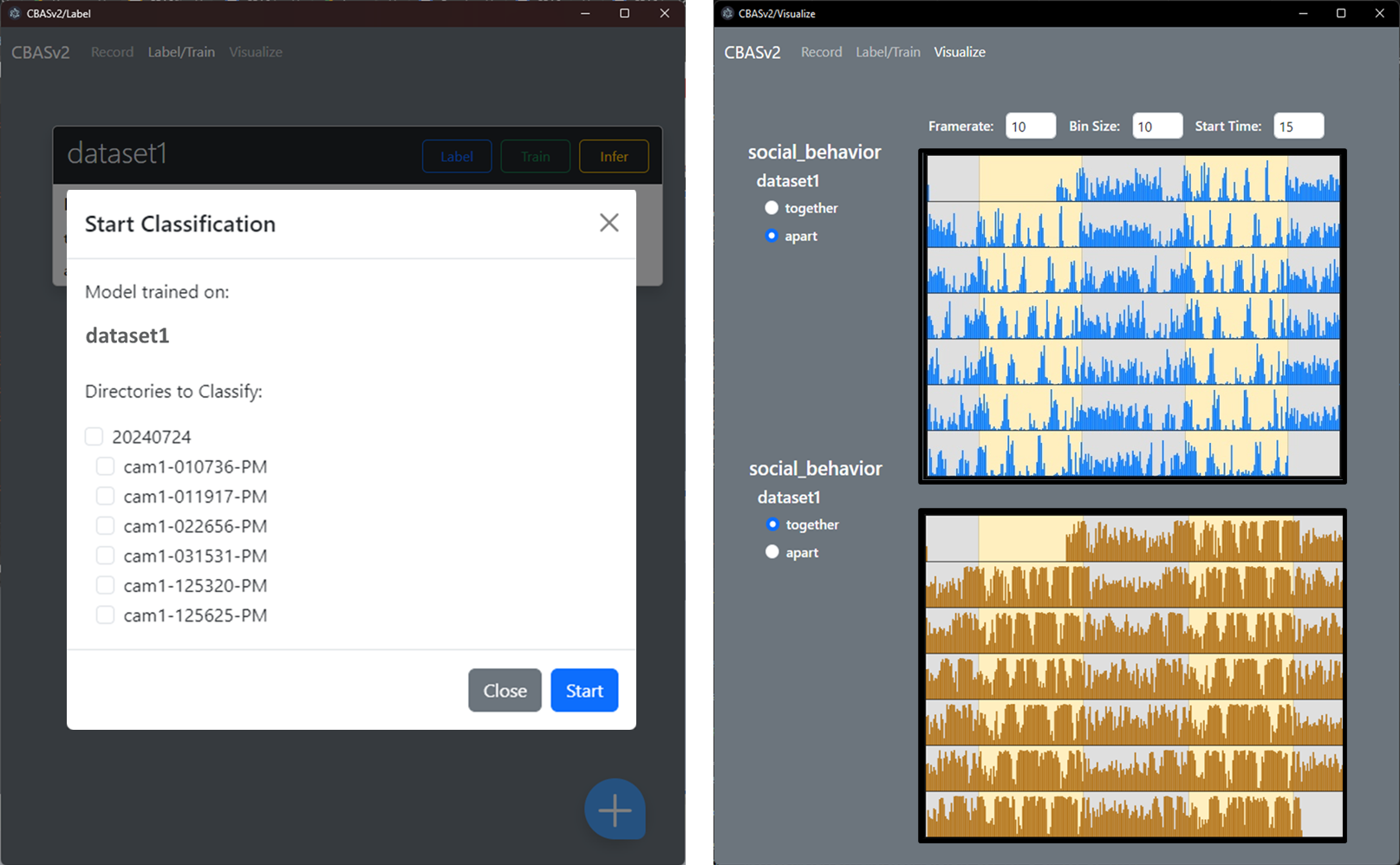

The classification and visualization module enables real-time inference on streaming video and displays acquired behavior time series data in real time as actograms that can be readily exported for offline analysis in a file format compatible with ClockLab Analysis, a widely-used circadian analysis software.

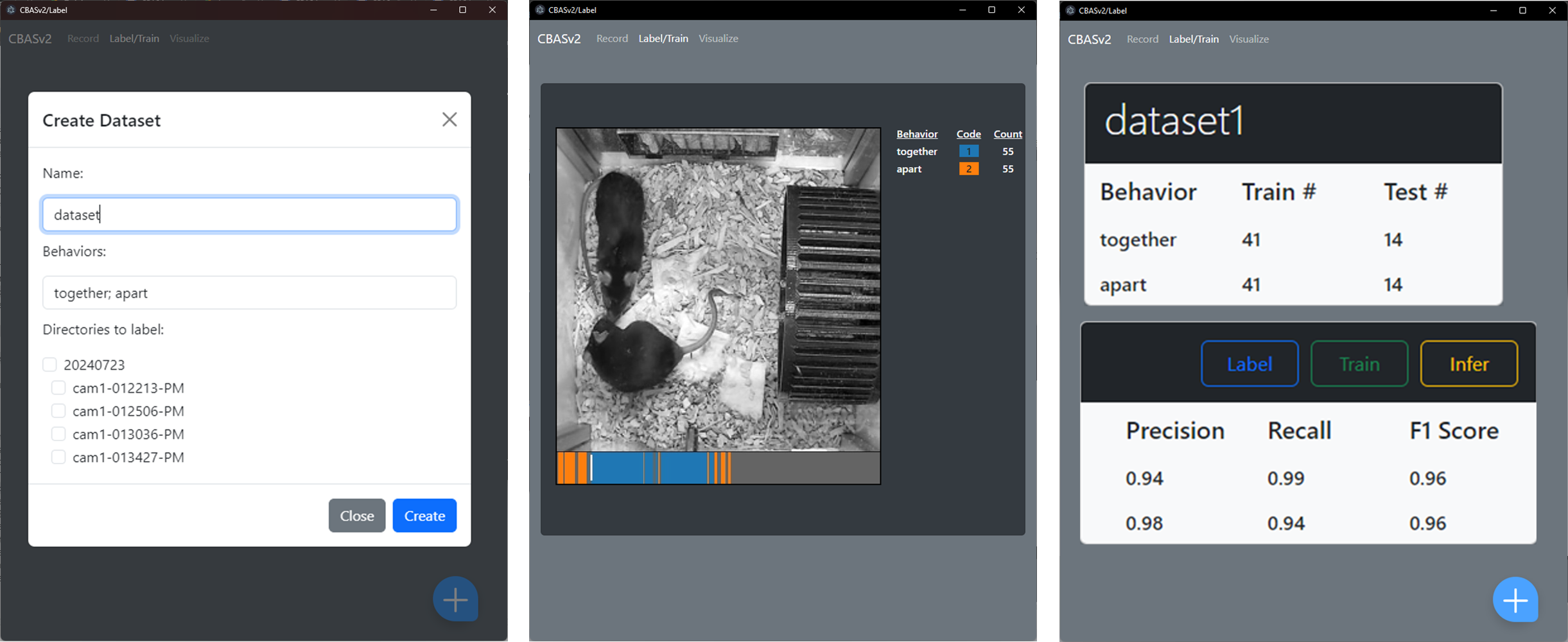

The training module allows the user to create balanced training sets of behaviors of interest, train joint LSTM and linear layer model heads, and validate model performance on a naive test set of behavior instances. Because of the frozen DINOv2 feature extractor backbone, training a custom model head is simple and relatively fast.

We have tested the installation instructions to be as straightforward and user-friendly as possible, even for users with limited programming experience.

Click here for step-by-step instructions on how to install CBAS from source (for Windows users.)

CBAS will work with any real-time streaming protocol (RTSP) IP camera, but we have provided instructions on how to install power-over-ethernet (PoE) cameras and network switches to allow for the parallel, indefinite recording of up to 24 cameras per network switch.

CBAS comes packaged with the Jones Lab's DINOv2+ joint long short-term memory (LSTM) and linear layer model head to allow users to immediately begin classification of our nine behaviors of interest (eating, drinking, rearing, climbing, grooming, exploring, nesting, digging, and resting). If you want to record these behaviors, you will need to first replicate our recording setup using these instructions and parts list.

Since the DINOv2 visual backbone remains static in our training model, users can quickly and easily adapt CBAS for various classification tasks, animal species, and video environments. The training module enables users to create balanced training sets for specific behaviors, train joint LSTM and linear layer models, and validate model performance on a naive test set of behavior instances.

To learn how to create a dataset and train a classification model, click here.

While not required, we strongly recommend using a NVIDIA GPU with high VRAM to allow for GPU optimization with CUDA.

Our PC specs are:

- CPU: 12-core AMD Ryzen 9 5900X (more recent installations use a 7900X)

- RAM: 32 GB DDR5

- SSD: 1TB+ NVMe SSD

- GPU: NVIDIA GeForce RTX 3090 24GB (more recent installations use a 4090)