- Introduction

- Identifying Conversations

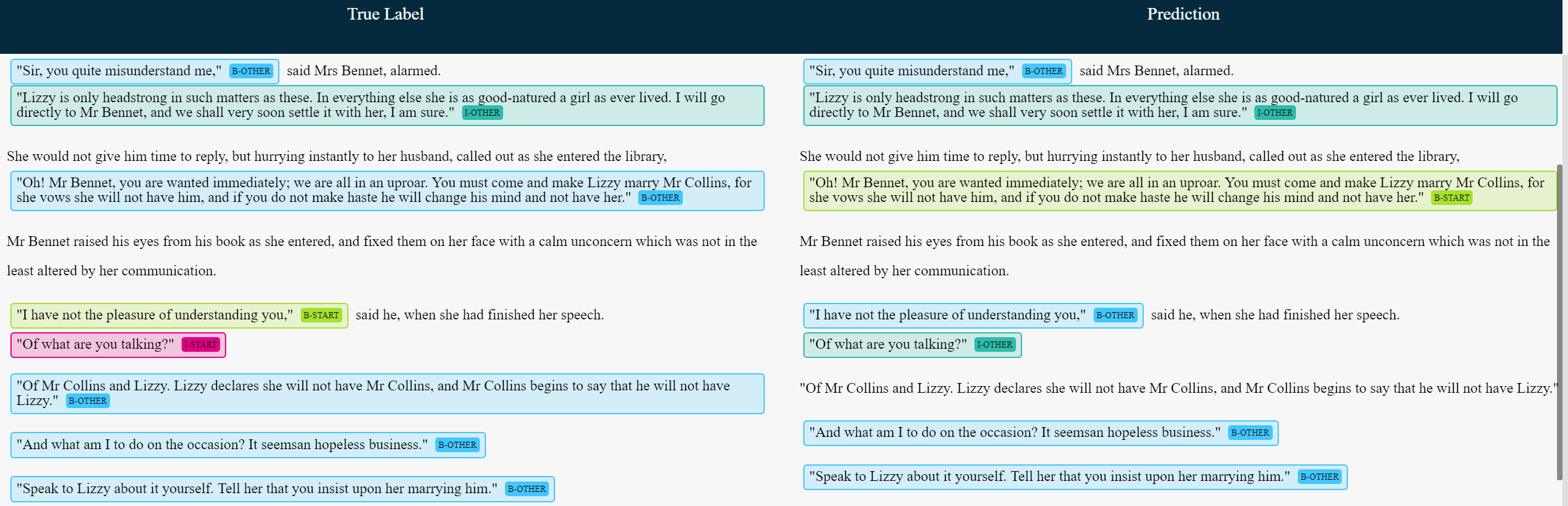

- Results

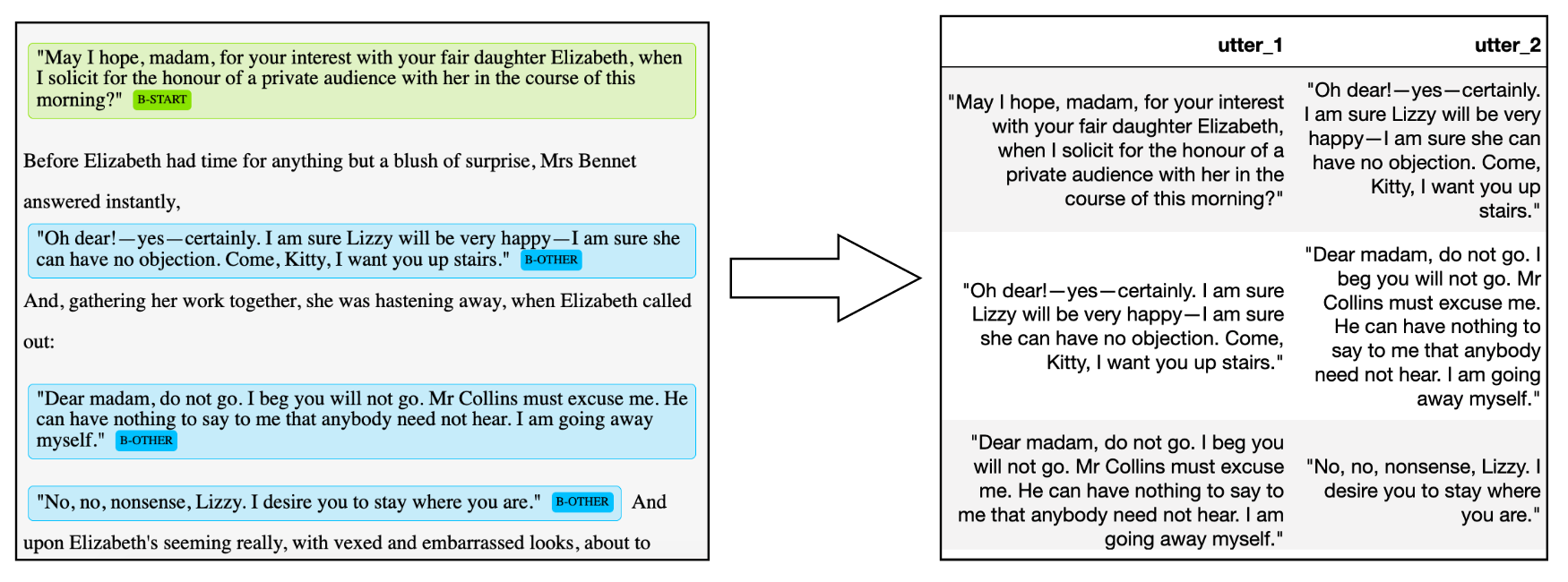

- Constructing Utterance Pairs

- Conclusion

- Quick Start

- Resources

- Contact

'Begin at the beginning,' the King said gravely, 'and go on till you come to the end: then stop.'

- King of Hearts, Alice in Wonderland (1865)

The objective of this project is to compare methods for mining conversations from narrative fiction.

Firstly, dialogue systems need natural language data. A lot of it, and the richer the better. Exciting advances in dialogue systems such as Google Duplex and Microsoft Xiaoice have been powered by deep learning models trained on rich and diverse types of conversations. For instance, XiaoIce is trained to be able to switch between 230 conversational modes or 'skills', ranging from comforting and storytelling to recommending movies after being trained on examples of conversations from each category.

Such data sources are hard to come by. Existing methods include mining reddit and twitter for conversational pairs and sequences. These methods face limitations because of the linguistic and content differences between online communication and regular human conversation, not to mention the negativity bias of internet content, seen in the infamous Microsoft "Tay" bot. Some teams have turned to collecting human-generated conversational data through crowd-sourcing tools such as Amazon Mechanical Turk. Unfortunately, these methods are expensive, slow, and do not scale well.

There is another way.

A treasure trove of varied and life-like conversational data lies within the pages of narrative fiction. Conversation in narrative fiction is rich and varied in ways that existing corpora are not. Research has found that many of the linguistic and paralinguistic features of dialogue in fiction are similar to natural spoken language. They also contain different actors with different intentions and relationships to one another, which could potentially allow a data-driven dialogue system to learn to personalize itself to different users by making use of different interaction patterns. Additionally, real-life dialogue is a role-playing 'language game' of sorts between turn-taking strategic agents, and we would like data that can capture this.

Furthermore, this project is also valuable for digital humanities researchers who want to conduct large-scale studies of dialogue in fiction.

Identifying conversations in narrative fiction is tricky. Where does one conversation end, and another begins? Stylistic and lexical features vary greatly across literary works and time periods. For instance, in some works, speaker attribution is clear, i.e. "The car is red," she said. In others, it is not, i.e. "The car is red". "Indeed it is".

Simply picking out consecutive words enclosed in quotation marks "…", "…" will not work, because some conversations are interspersed with additional narration.

Finally, and most importantly, a lot of the information about conversation in fiction is contained not in dialogue text itself, but in the exposition. Narrative exposition may add context to the ongoing conversation. It may also signal a change in conversational or situational context and thus the beginning of a new narrative sequence. Thus, any method that looks purely at the conversational utterances is likely to fall short.

Our data consists of all the text in Pride and Prejudice by Jane Austen. We chose the novel as our data because it contains several appealing qualities.

Firstly, it is a novel that is particularly rich in the relationship between dialogue and plot. A leading Austen scholar characterises her novels as "Conversational Machines", in which words are traded in a "complex role-playing game" (Morini 2009).

Secondly, it comes from a period in the history of the English language novel in which authors attempted to recreate dialogue as realistically as possible instead of the more abstract, experimental means used in later periods.

Thirdly, it is easily and legally accessible as in HTML from the open-source website Project Gutenberg as its copyright has expired. This also means that our entire development pipeline will be directly applicable to the other ~58,000 texts hosted on Project Gutenberg.

-

Utterance:

- text prefixed by an opening quote and postfixed by a closing quote, must be spoken by 1 speaker.

- E.g.

“You used us abominably ill,” answered Mrs. Hurst, “running away without telling us that you were coming out.”---> contains 2 utterances.

-

Response:

- An utterance that is a reponse to a previous utterance.

-

Speaker:

- Person or character that voiced the utterance.

-

Narrative/Exposition:

- Text that is considered non-utterance.

-

Conversation/Dialogue:

- A series of utterances that are made in response to each other

We input html files containing the complete text of narrative fiction hosted on Project Gutenberg.

Then, a Python parser extracts text within <p> tags and <h2> tags and outputs a csv file with each paragraph as a row. Utterances and non-utterances are also tagged as such using a collection of simple rules.

Inspired by the sequence-labelling scheme typically used in Named-Entity-Recognition, we use the following schema to manually assign labels to our text paragraphs:

For each utterance, we assign

B-STARTto first utterance in the conversationI-STARTif it the utterance followingB-STARTis by the same speakerB-OTHERif a speaker other than the one making the preceding utterance enters the conversationI-OTHERif the next utterance following an utterance assigned aB-OTHERtag belongs to the very same speaker

Note that we our approach does not track identities of speakers, only changes of speakers.

If, on the other hand, a paragraph is not an utterance and is instead exposition, we assign:

O, which stands for "Outside"

For example, the first few paragraphs of our text will be tagged as such:

It is a truth universally acknowledged, that a single man in possession of a good fortune,

must be in want of a wife. {O}

However little known the feelings or views of such a man may be on his first entering a

neighbourhood, this truth is so well fixed in the minds of the surrounding families, that

he is considered the rightful property of some one or other of their daughters. {O}

“My dear Mr. Bennet,” {B-START}

said his lady to him one day, {O}

“have you heard that Netherfield Park is let at last?” {I-START}

Mr. Bennet replied that he had not. {O}

“But it is,” returned she; “for Mrs. Long has just been here, and she told me all about it.” {B-OTHER}

“Do you not want to know who has taken it?” cried his wife impatiently. {I-OTHER}

It is clear that there isn't a simple set of rules one can use to extract conversations. We propose that solving this task would require a model that can detect very subtle and complex correlations between the narrative text and dialogue. It would also need to readily identify sequences of text.

Thus, we decided to model this problem as an NER-inspired sequence-labelling task. We trained several sequence-labeling models implemented in TensorFlow 2.0. We compare it against a heuristic.

We evaluate our models using two types of metrics.

- Direct comparison of sequence labels such as

B-START. - Comparison of utterance pairs built from the models' predictions.

A heuristic is designed to identify conversations in an unsupervised way. We used the following assumptions:

- All utterances in every paragraph are from the same speaker (this one-speaker-per-paragraph property is rarely violated in novels).

- We mark an utterance as the start of a new conversation based on two conditions:

- There are 3 sentences of narrative-type between previous utterance and the candidate utterance.

- Candidate utterance is the first utterance of a chapter.

We hope to design a usable heuristic, which is able to reliably parse and identify any fiction from project Gutenberg. To evaluate our attempt, we will review the reliability and accuracy of this heuristic—in identifying conversations and constructing utterance pairs.

Our main approach uses a BERT embedding+BiLSTM architecture to perform sequence-labelling at the sentence-level. The model takes 4 paragraphs as input and analyzes the conversation entities (as listed above) at the sentence level.

The latest state-of-art DL architecture for generating language representations, BERT trains a language model by applying a bidirectional transformer on the training data. It gives one of the best pre-trained embedding available across many NLP tasks. The team selected BERT pre-trained embeddings with average operator to achieve sentence level embedding on the extracted paragraphs.

Besides experiementing with different input sizes, we also explored LDA, TF-IDF, doc2vec approach to which BERT embedding outperforms on the NER task.

| Model | Recall (B-START) |

Precision (Utterance-Pair) | Directory |

|---|---|---|---|

| Convo miner heuristic | 0.50 | 0.89 | fiction_convo_miner/heuristic/ |

| BERT-BiLSTM-NER | 0.70 | 0.93 | fiction_convo_miner/seq_labeling_ner/ |

The table above compares the results of the 2 types of solutions we built. The sequence-labelling model with the BERT + BiLSTM architecture is best-performing one in absolute terms across 2 metrics.

The Recall of B-START measures the percentage of total relevant results correctly classified by the algorithm. This means that 50% of all true labels were predicted correctly by the heuristic method. On the other hand, our sequence-labelling model correctly predicts 70% of all true labels. In other words, the NER model correctly identified 70% of all conversation starters.

The Precision of the utterance pairs is the proportion of predicted pairs that are relevant. At 89%, the heuristic managed to capture 89% meaningful utterance pairs, with the remaining 11% to be falsely paired utterances. The precision of the sequence-labelling model sits at 93%, beating the precision of the heuristic.

In conclusion, our theoretical hunches were validated by the results. Our sequence-labelling model was able to far better mine conversations from text. We suggest that it could do so because it can take account the sequential nature of conversations in fiction as well as the highly complex correlations between narration and dialogue in the text.

While the sequence-labelling approach is better than a well-thought-out heuristic, a precision of 93% also mean that 7% of pairs generated are false. We must continue to question ourselves on the implication of using conversations from literary fiction. It may be a treasure trove, but our experiment was conveniently isolated to one fiction. We hoped to attend to bigger concerns, in applying transfer learning across different types fiction.

Utterance pairs, or context-reponse pairs, or dialogue pairs, are often used as training data when building dialogue systems. With this in mind, we proceed to construct utterance pairs based on the conversations identified by our models.

The box on the left shows a sample of our predictions, and on the right shows a sample of generated utterance pairs.

We construct utterance pairs by taking a B-START utterance and pair them with the next utterance in the sequence, ignoring all O. Continue for each utterance and stop the pairing at the utterance just before the next B-START.

Identifying conversations from narrative fiction seemed like a difficult problem. However, our model was surprisingly successful, and can identify the beginnings of conversations 70% of the time.

Our experiment has provides evidence for the idea that sequence-labelling performed by the latest deep-learning methods outperform heuristics for extracting conversations from narrative fiction. The combination of the bi-LSTM and Transformer architectures allows the model to capture both the highly complex syntactic and morphological aspects of speech and their sequential nature.

There remain challenges and limitations to our approach. Our data, Pride and Prejudice, presents dialogue in a rather straightforward and regular fashion, with utterances enclosed in quotation marks separated out into distinct paragraphs. To create a model that generalizes properly to more types of fiction, we will have to train the model on more diverse types of text to achieve a truly general conversational miner model.

In sum, it is an exciting time to be in dialogue systems. We can now engineer systems to be empathetic (XiaoIce), speak with human-like expressive range (Google Duplex), communicate with Dementia (Endurance) and tell stories (Talk to Judy). At the core of these advances are deep learning architectures trained on large and highly-curated samples of conversational data. We hope that our model would help support further advances by introducing a scalable means of extracting more of such data from literary fiction.

- Write Tests for the parser and heuristic scripts.

- Label more examples of different types of narrative fiction and train the model on them for better generalization results.

- Evaluate possible ways to apply DL approaches across different types of fiction in order to generate conversations that contain specific properties (theme, mood, personality etc.)

- Applying our model on more of the 58,000 texts in Project Gutenberg to generate large-scale conversational corpora from fiction. We will then Open-Source the data for future researchers/engineers.

pip install -r requirements.txt

Pride and Prejudice Labeled data

We built a simple visualization tool for displaying our model predictions and heuristic predictions. Load it up!

python seq_label_visualizer/app.py

First parse a html book by running ./fiction_convo_miner/parse.py. Then run ./fiction_convo_miner/heuristic/heuristic_rb.ipynb

Feel free to re-run our notebook in Google Colab. Or use it as a starter code!

Run ./fiction_convo_miner/sequence_labeling_ner/fiction_bert_lstm_train_v4_4bs_final.ipynb

or

This list is a non-exhaustive list of the main sources of information we used in doing this project.

GloVe: Global Vectors for Word Representation,

A large annotated corpus for learning natural language inference,

A Survey of Available Corpora for Building Data-Driven Dialogue Systems

The Design and Implementation of XiaoIce,an Empathetic Social Chatbot

The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems

Personalizing Dialogue Agents: I have a dog, do you have pets too?

Training Millions of Personalized Dialogue Agents

I Know The Feeling: Learning To converse with Empathy

Creating an Emotion Responsive Dialogue System

Learning Personas from Dialogue with Attentive Memory Networks