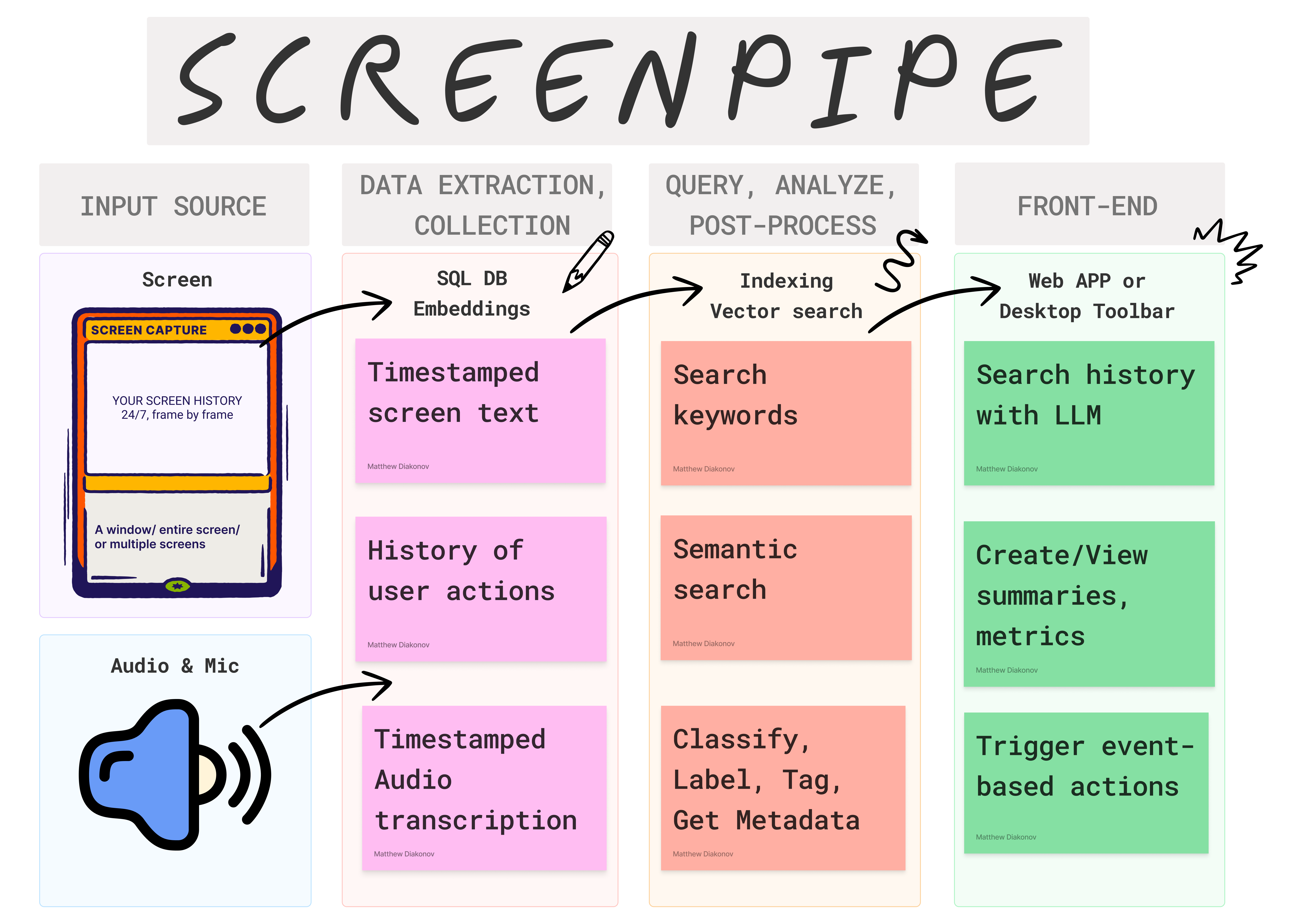

Library to build personalized AI powered by what you've seen, said, or heard. Works with Ollama. Alternative to Rewind.ai. Open. Secure. You own your data. Rust.

We are shipping daily, make suggestions, post bugs, give feedback.

Building a reliable stream of audio and screenshot data, where a user simply clicks a button and the script runs in the background 24/7, collecting and extracting data from screen and audio input/output, can be frustrating.

There are numerous use cases that can be built on top of this layer. To simplify life for other developers, we decided to solve this non-trivial problem. It's still in its early stages, but it works end-to-end. We're working on this full-time and would love to hear your feedback and suggestions.

Struggle to get it running? I'll install it with you in a 15 min call.

BACKEND

MacOS

Option I: Library

-

Navigate to the folder where you want the data to be stored

-

Install dependencies:

brew install pkg-config

brew install ffmpeg

brew install jq- Install library

brew tap louis030195/screen-pipe https://github.com/louis030195/screen-pipe.git

brew install screenpipe- Run it:

screenpipe or if you don't want audio to be recorded

screenpipe --disable-audioif you want to save OCR data to text file in text_json folder in the root of your project (good for testing):

screenpipe --save-text-filesif you want to run screenpipe in debug mode to show more logs in terminal:

screenpipe --debugyou can combine multiple flags if needed

Option II: Install from the source

- Install dependencies:

brew install pkg-config

brew install ffmpeg

brew install jqInstall Rust.

- Clone the repo:

git clone https://github.com/louis030195/screen-pipeThis runs a local SQLite DB + an API + screenshot, ocr, mic, stt, mp4 encoding

cd screen-pipe # enter cloned repocargo build --release --features metalSign the executable to avoid mac killing the process when it's running for too long

codesign --sign - --force --preserve-metadata=entitlements,requirements,flags,runtime ./target/release/screenpipeThen run it

./target/release/screenpipe # add "--disable-audio" if you don't want audio to be recorded

# "--save-text-files" if you want to save OCR data to text file in text_json folder in the root of your project (good for testing)

# "--debug" if you want to run screenpipe in debug mode to show more logs in terminalWindows

- Install dependencies:

# Install ffmpeg (you may need to download and add it to your PATH manually)

# Visit https://www.ffmpeg.org/download.html for installation instructions

# Install Chocolatey from https://chocolatey.org/install

# Then install pkg config

choco install pkgconfigliteInstall Rust.

- Clone the repo:

git clone https://github.com/louis030195/screen-pipe

cd screen-pipe- Run the API:

# This runs a local SQLite DB + an API + screenshot, ocr, mic, stt, mp4 encoding

cargo build --release --features cuda # remove "--features cuda" if you do not have a NVIDIA GPU

# then run it

./target/release/screenpipeLinux

- Install dependencies:

sudo apt-get update

sudo apt-get install -y libavformat-dev libavfilter-dev libavdevice-dev ffmpeg libasound2-dev tesseract-ocr libtesseract-devInstall Rust.

- Clone the repo:

git clone https://github.com/louis030195/screen-pipe

cd screen-pipe- Run the API:

cargo build --release --features cuda # remove "--features cuda" if you do not have a NVIDIA GPU

# then run it

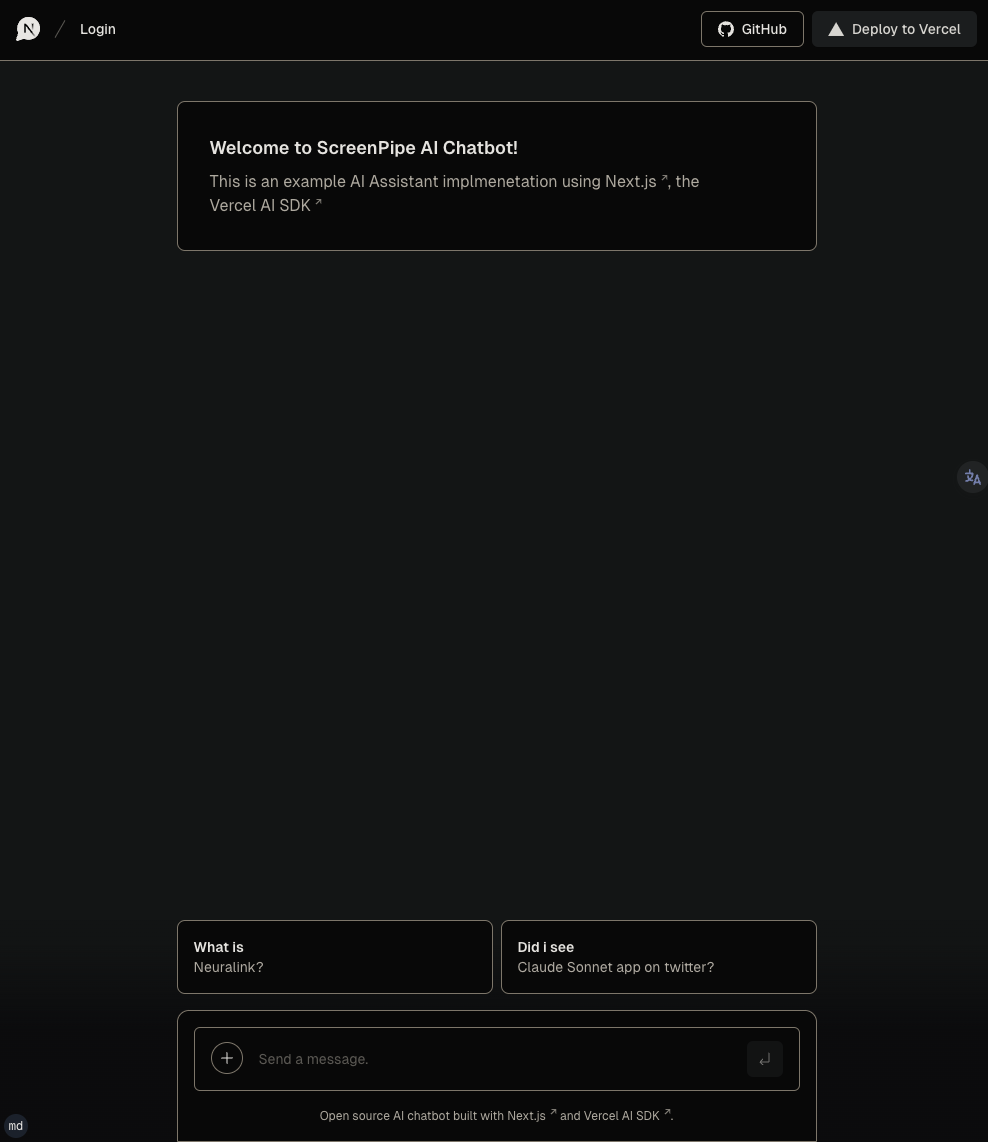

./target/release/screenpipeFRONTEND

git clone https://github.com/louis030195/screen-pipeNavigate to app directory

cd screen-pipe/examples/ts/vercel-ai-chatbot Set up you OPENAI API KEY in .env

echo "OPENAI_API_KEY=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" > .envInstall dependencies and run local web server

npm install npm run devCheck which tables you have in the local database

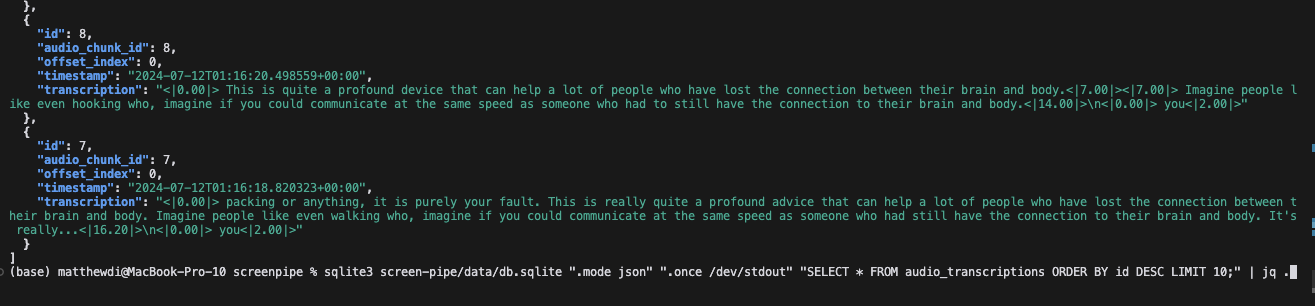

sqlite3 data/db.sqlite ".tables" Print a sample audio_transcriptions from the database

sqlite3 data/db.sqlite ".mode json" ".once /dev/stdout" "SELECT * FROM audio_transcriptions ORDER BY id DESC LIMIT 1;" | jq .Print a sample frame_OCR_text from the database

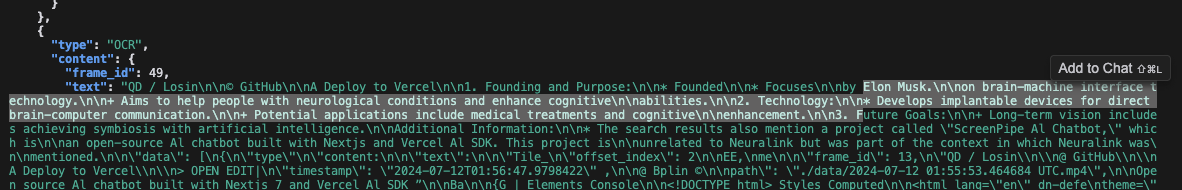

sqlite3 data/db.sqlite ".mode json" ".once /dev/stdout" "SELECT * FROM ocr_text ORDER BY frame_id DESC LIMIT 1;" | jq -r '.[0].text'Play a sample frame_recording from the database

ffplay "data/2024-07-12_01-14-14.mp4"Play a sample audio_recording from the database

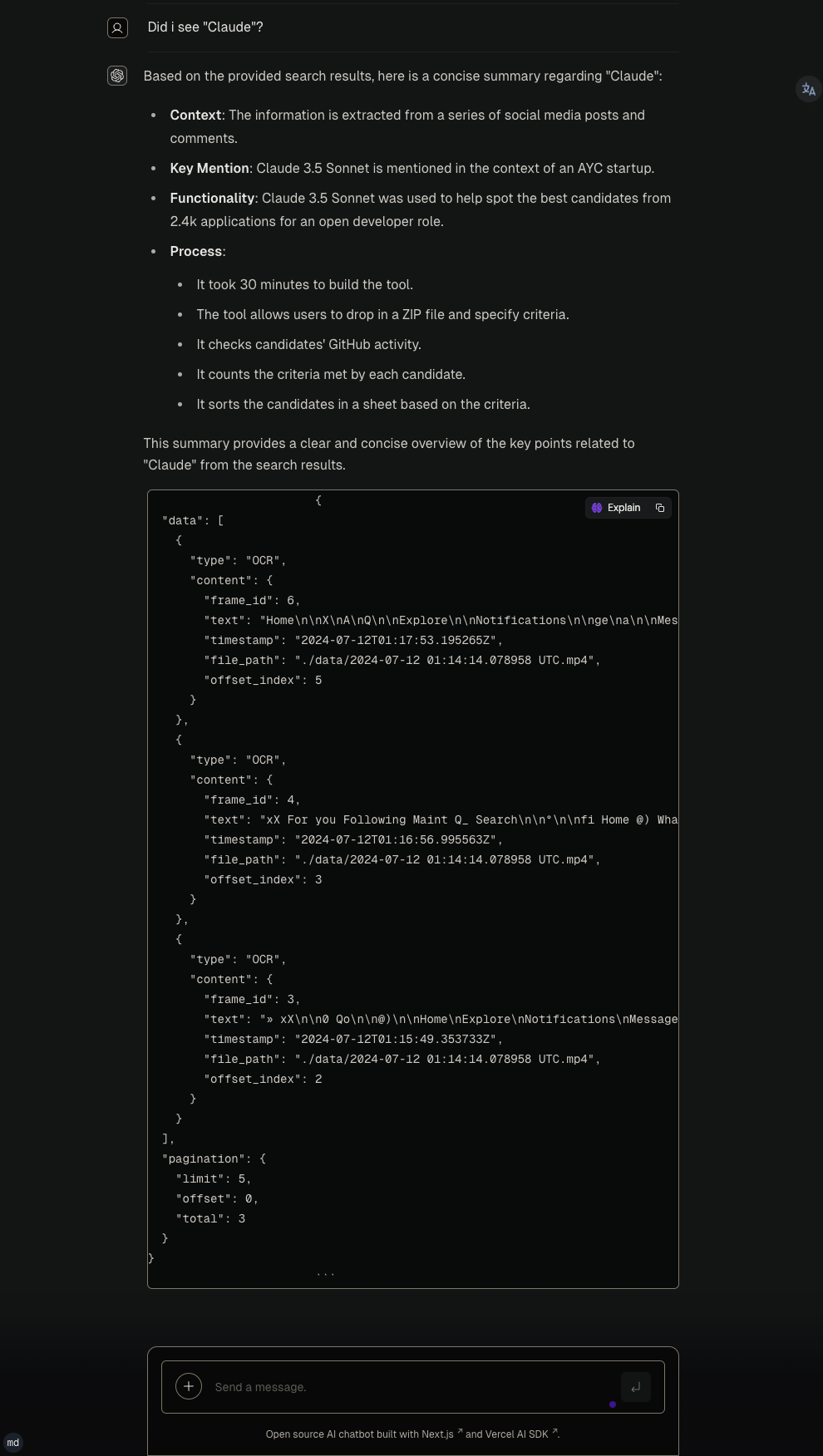

ffplay "data/Display 1 (output)_2024-07-12_01-14-11.mp4"Example to query the API

- Basic search query

curl "http://localhost:3030/search?q=Neuralink&limit=5&offset=0&content_type=ocr" | jqOther Example to query the API

# 2. Search with content type filter (OCR)

curl "http://localhost:3030/search?q=QUERY_HERE&limit=5&offset=0&content_type=ocr"

# 3. Search with content type filter (Audio)

curl "http://localhost:3030/search?q=QUERY_HERE&limit=5&offset=0&content_type=audio"

# 4. Search with pagination

curl "http://localhost:3030/search?q=QUERY_HERE&limit=10&offset=20"

# 6. Search with no query (should return all results)

curl "http://localhost:3030/search?limit=5&offset=0"Keep in mind that it's still experimental.

screenpipe_demo2.1.mp4

- RAG & question answering: Quickly find information you've forgotten or misplaced

- Automation:

- Automatically generate documentation

- Populate CRM systems with relevant data

- Synchronize company knowledge across platforms

- Automate repetitive tasks based on screen content

- Analytics:

- Track personal productivity metrics

- Organize and analyze educational materials

- Gain insights into areas for personal improvement

- Analyze work patterns and optimize workflows

- Personal assistant:

- Summarize lengthy documents or videos

- Provide context-aware reminders and suggestions

- Assist with research by aggregating relevant information

- Collaboration:

- Share and annotate screen captures with team members

- Create searchable archives of meetings and presentations

- Compliance and security:

- Track what your employees are really up to

- Monitor and log system activities for audit purposes

- Detect potential security threats based on screen content

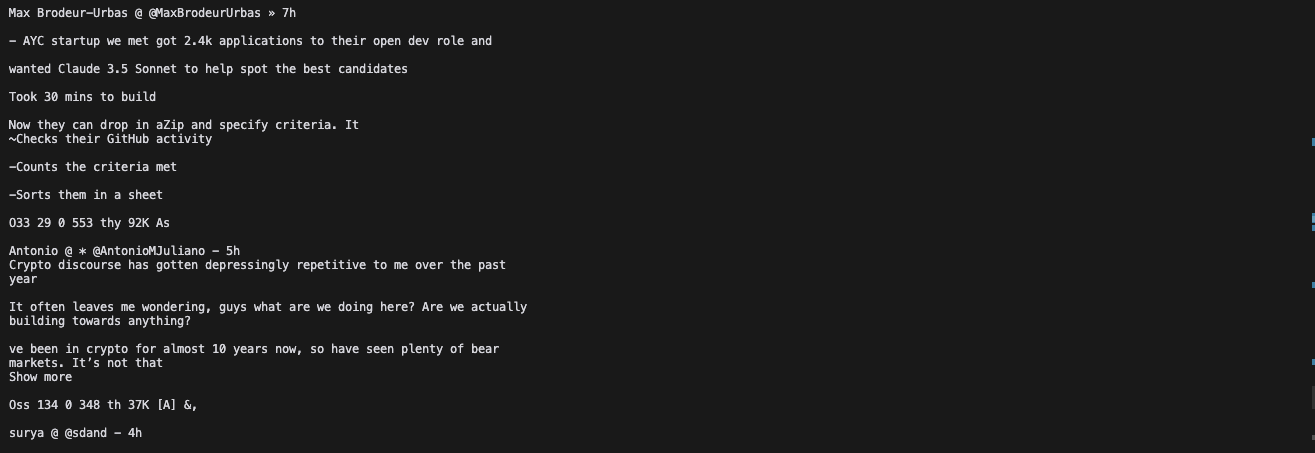

Check this example of screenpipe which is a chatbot that make requests to your data to answer your questions

070424.mp4

Alpha: runs on my computer (Macbook pro m3 32 GB ram) 24/7.

- screenshots

- optimised screen & audio recording (mp4 & mp3 encoding, estimating 30 gb/m with default settings)

- sqlite local db

- OCR

- audio + stt (works with multi input & output devices)

- local api

- TS SDK

- multimodal embeddings

- cloud storage options (s3, pgsql, etc.)

- cloud computing options

- bug-free & stable

- custom storage settings: customizable capture settings (fps, resolution)

- data encryption options & higher security

- fast, optimised, energy-efficient modes

Example with vercel/ai-chatbot project here inside the repo here:

Recent breakthroughs in AI have shown that context is the final frontier. AI will soon be able to incorporate the context of an entire human life into its 'prompt', and the technologies that enable this kind of personalisation should be available to all developers to accelerate access to the next stage of our evolution.

This is a library intended to stick to simple use case:

- record the screen & associated metadata (generated locally or in the cloud) and pipe it somewhere (local, cloud)

Think of this as an API that let's you do this:

screenpipe | ocr | llm "send what i see to my CRM" | api "send data to salesforce api"Any interfaces are out of scope and should be built outside this repo, for example:

- UI to search on these files (like rewind)

- UI to spy on your employees

- etc.

Contributions are welcome! If you'd like to contribute, please read CONTRIBUTING.md.

The code in this project is licensed under MIT license. See the LICENSE file for more information.

This is a very quick & dirty example of the end goal that works in a few lines of python: https://github.com/louis030195/screen-to-crm

Very thankful for https://github.com/jasonjmcghee/xrem which was helpful. Although screenpipe is going in a different direction.

What's the difference with adept.ai and rewind.ai?

- adept.ai is a closed product, focused on automation while we are open and focused on enabling tooling & infra for a wide range of applications like adept

- rewind.ai is a closed product, focused on a single use case (they only focus on meetings now), not customisable, your data is owned by them, and not extendable by developers

Where is the data stored?

- 100% of the data stay local in a SQLite database and mp4/mp3 files. You own your data

Do you encrypt the data?

- Not yet but we're working on it. We want to provide you the highest level of security.

How can I customize capture settings to reduce storage and energy usage?

- You can adjust frame rates and resolution in the configuration. Lower values will reduce storage and energy consumption. We're working on making this more user-friendly in future updates.

What are some practical use cases for screenpipe?

- RAG & question answering

- Automation (write code somewhere else while watching you coding, write docs, fill your CRM, sync company's knowledge, etc.)

- Analytics (track human performance, education, become aware of how you can improve, etc.)

- etc.

- We're constantly exploring new use cases and welcome community input!