HttpInference is a http android client and http server example for deep learning offloading.

- git clone https://github.com/lovgrammer/HttpInference.git

- import project to your Android Studio

- build and install

- Install python3

- ./install.sh

- cd infserver

- python3 manage.py migrate

- python3 manage.py runserver ip:port

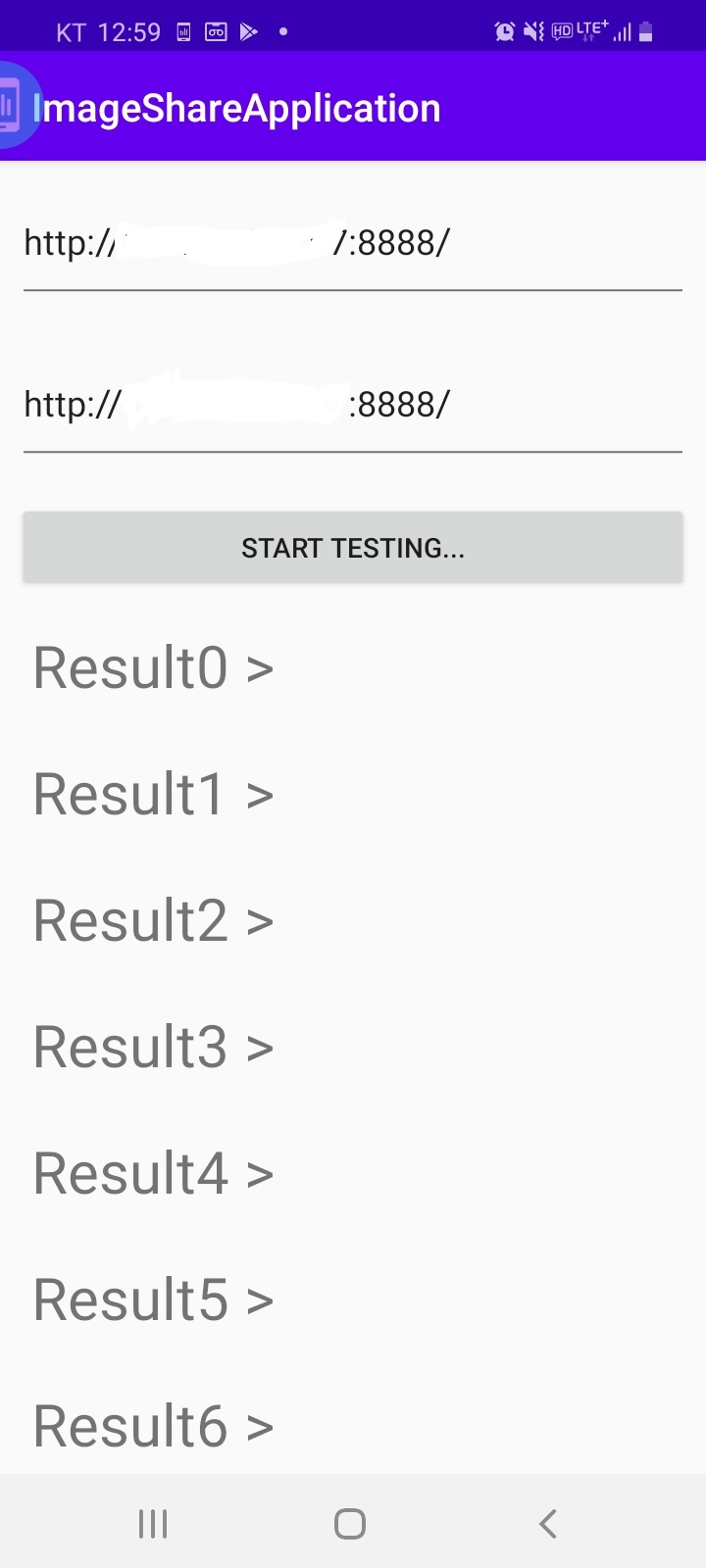

- Type in the remote1 and remote2 server IP "http://yourip:yourport/" (you should put / in the end of the string) (e.g., remote1 - edge server, remote2 - cloud server)

- Click start button

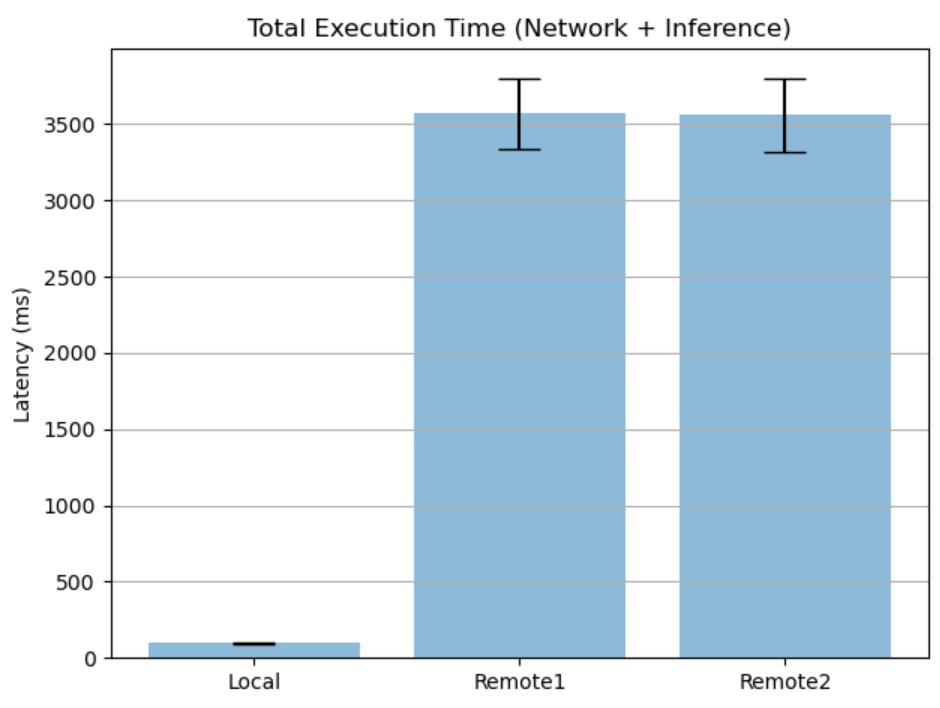

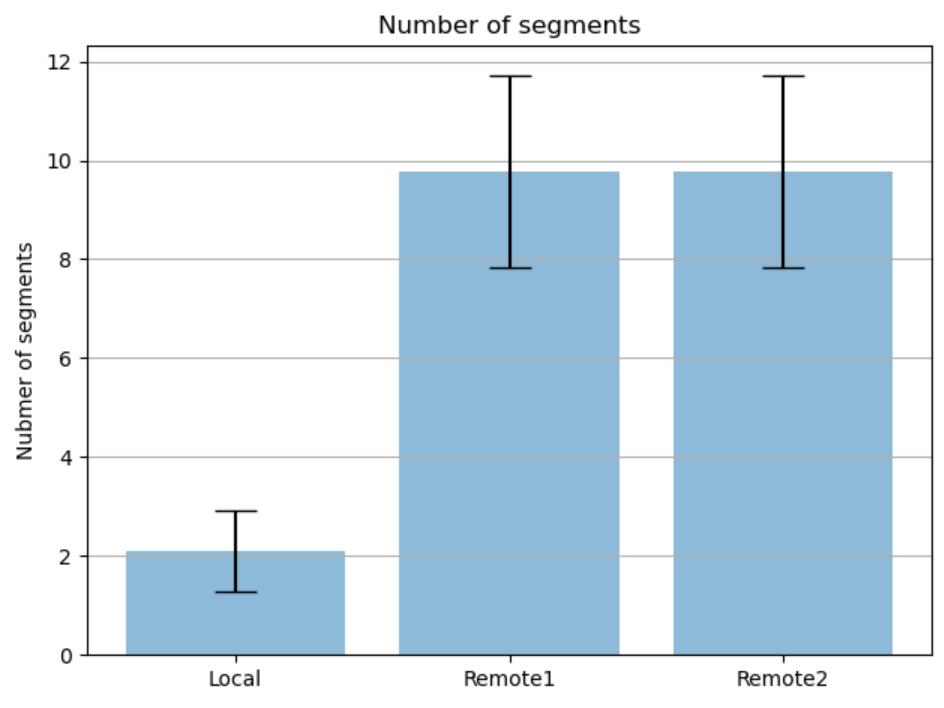

- Then, HttpInference app execute three process for 100 images

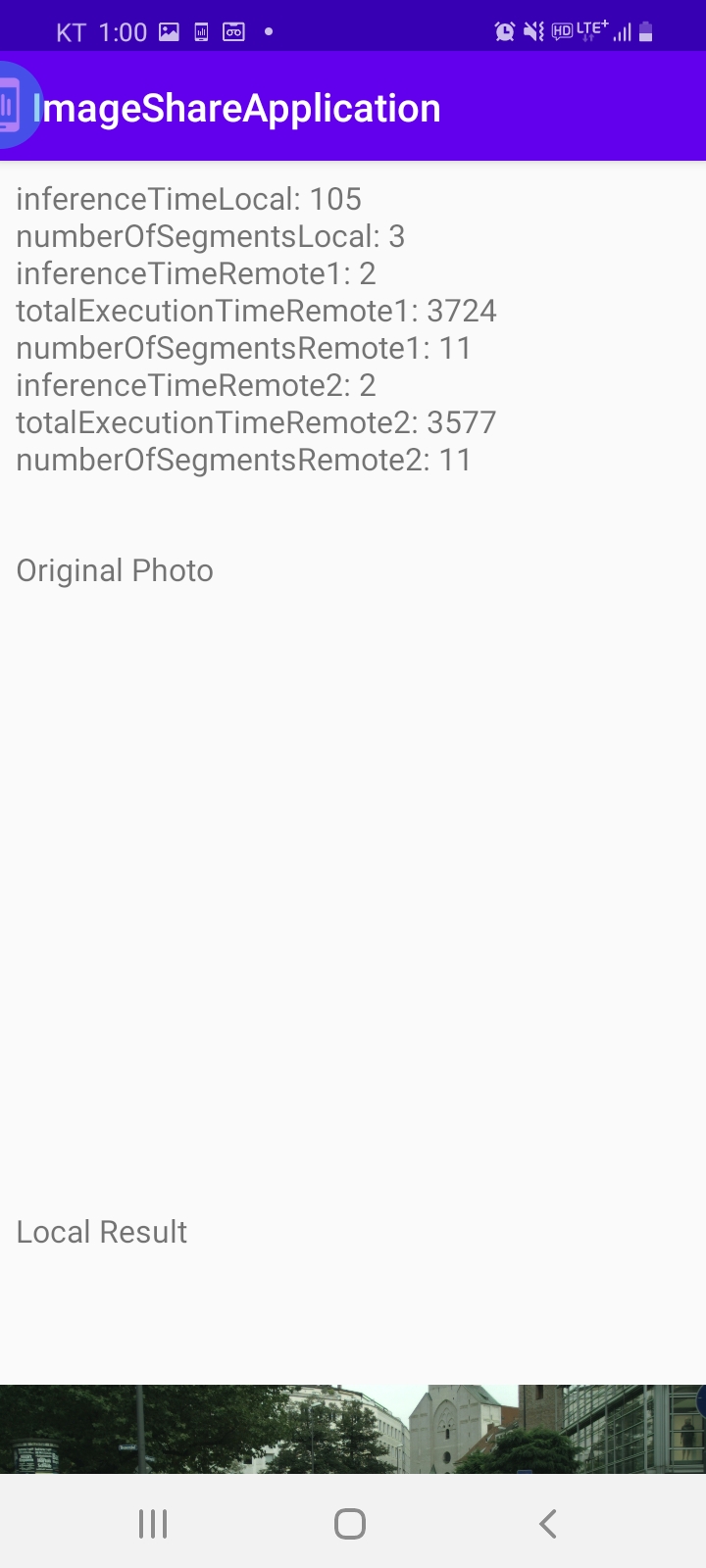

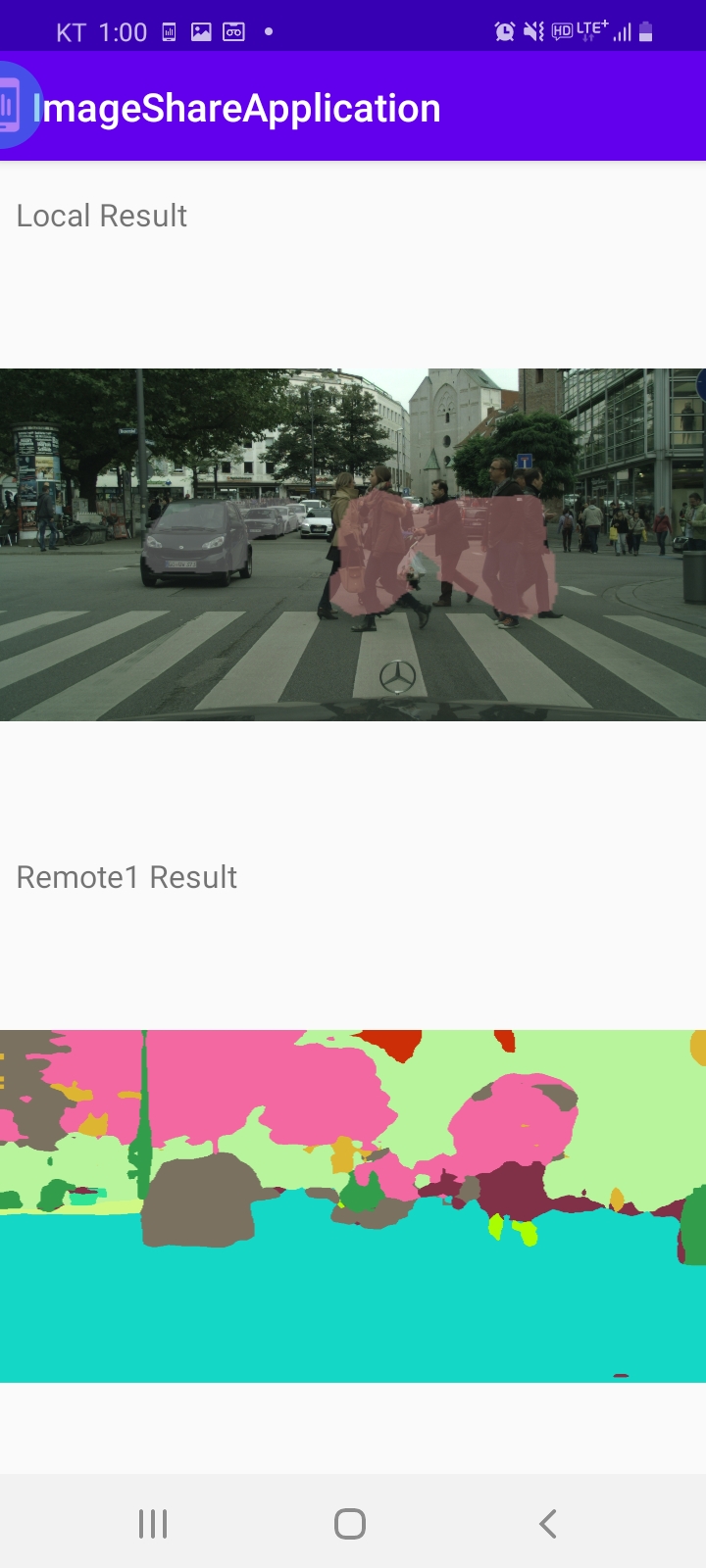

- run segmentation inference in the local device

- send file to remote1 inference server and get result image from the server

- send file to remote2 inference server and get result image from the server

- The measurement result of latency and segmentation results will be stored in the /sdcard/result.csv

- adb pull /sdcard/result.csv .

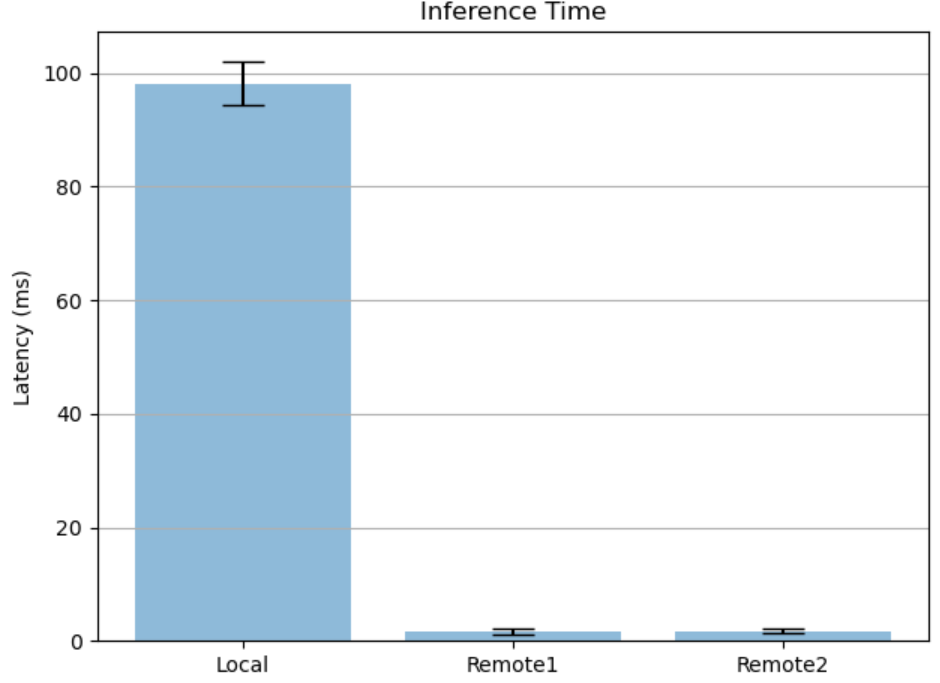

- python analysis_inftime.py

- python analysis_segnum.py

- python analysis_totaltime.py