1). create a virtual env with python2.7

virtualenv -p python2 venv

2). add the root directory of this project to PYTHONPATH

eg: you clone this project in directory ~/mygit, please add ~/mygit/dev_virt to PYTHONPATH:

export PYTHONPATH=$PYTHONPATH:~/mygit/dev_virt

3). install pip requirements

source venv/bin/activate && pip install -r requirements.txt

4). set up the DB_HOST, eg: example.com or 127.0.0.1:8000 for localhost test

export DB_HOST=127.0.0.1:8000

If you don't have a cmdb now, just let DB_HOST not set. It will do nothing with database.

5). setup the platform env: Xen for xenserver or KVM for KVM platform, the default is Xen

export PLATFORM=KVM

6). Log server is available to write the debug and exception infor to /var/log/virt.log. Remember to use sudo when

start the log server in case of no permission to the directory /var/log

sudo nohup python ~/dev_virt/lib/Log/logging_server.py &

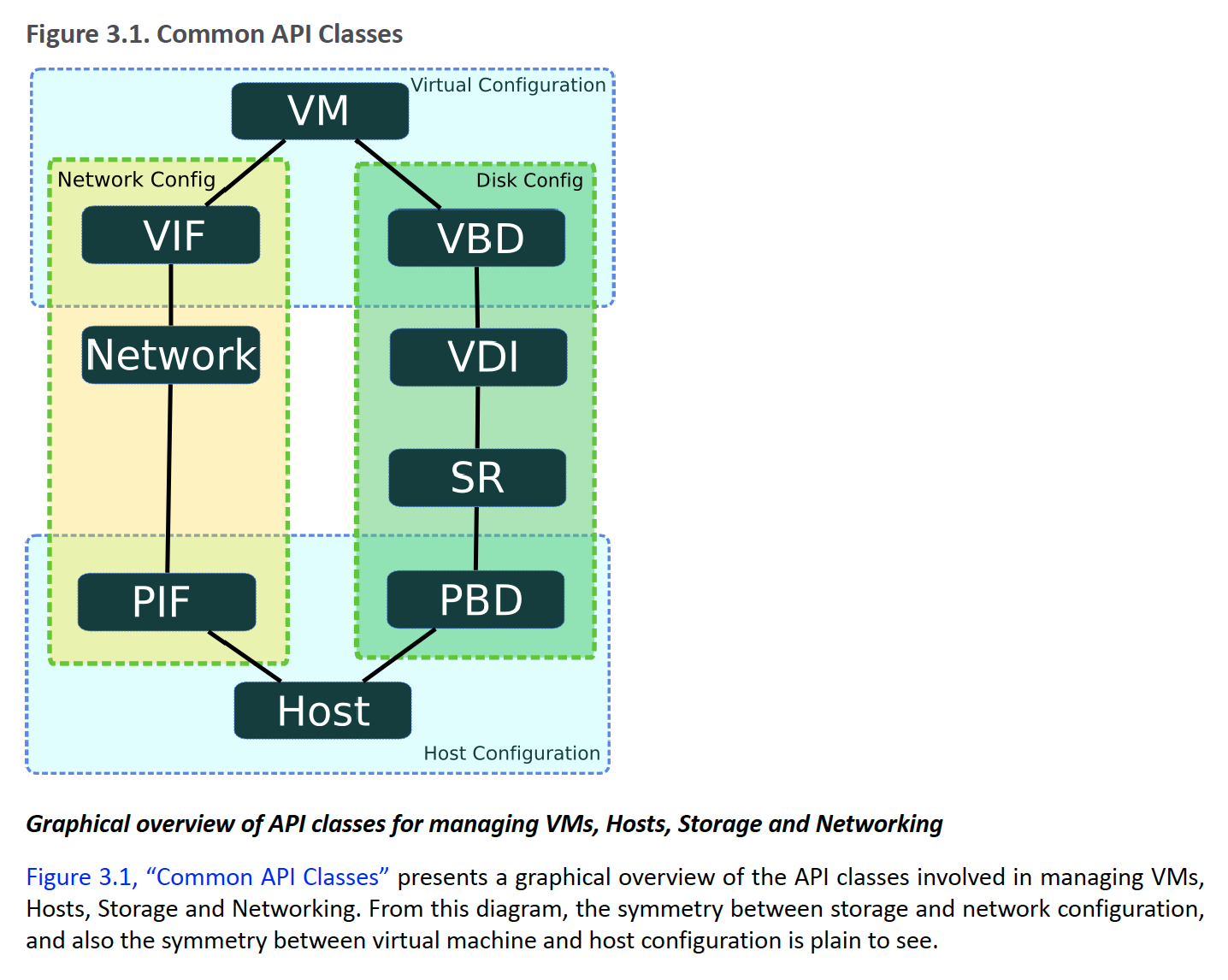

- PIF: physical interface, the eth on server

- VIF: virtual interface, the eth on VM

- SR: storage repository, the storage pool on server

Note: schedule_node.py can auto schedule the wanted vm to a available server

you can list the supported role with --list-roles, if there is no role you want, you can define a new one

in etc/Nodeconfig.json, each role is a dict defined the cpu, memory, etc. You can define k8s-master,

k8s-node, etcd template as you want.

for example:

"jenkins": {

"cpu": 8,

"memory": 32,

"template": "template",

"disk_size": 100,

"add_disk_num": 2

}

schedule_node.py --role=rolename --cluster=<dev|test|prod>

Note: user can define the vm-name rule at function

generate_vmname_key(role, cluster)in filelib/Utils/schedule.py

schedule_node.py --role=rolename --name=new_vm_name --host=hostip --ip=vm_ip

setup_vms.py --validate xmlFileValidate the given xml filesetup_vms.py --create xmlFileDo the create up according to the xml file

Create VMs with xml just need to write a xml config following the xml-example. Before really creating VMs, please run setup_vms.py --validate to check your xml.

<servers xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:noNamespaceSchemaLocation="">

<SERVER serverIp="192.168.1.17" user="root" passwd="xxxxxx">

<!--VM element: vmname, template is required, others are options, if you want to keep those options params same with that in template, don't set it here -->

<VM vmname="createViaXml" cpucores="2" cpumax="4" memory="2" maxMemory="4" template="NewTemplate">

<!--IP element: vifIndex and ip is required, device/network/bridge is options, if no device/network/bridge, will choose manage network as default -->

<IP vifIndex="0" ip="192.168.1.240" network="xenbr1" />

<!--add multiple IPs here -->

<!--DISK element: size is required, storage is options, if no storage, it will choose the storage which has largest free size -->

<DISK size="2" storage="Local storage" />

<!-- add multiple DISK here -->

</VM>

<!-- add multiple VMs here in the same server -->

</SERVER>

<!--here you can add another server -->

<SERVER serverIp="192.168.1.15" user="root" passwd="xxxxxx">

<!--VM element: vmname, template is required, others are options -->

<VM vmname="createViaXml2" cpucores="1" cpumax="2" minMemory="1" memory="2" maxMemory="3" template="NewTemplate">

<!--IP element: vifIndex and ip is required, device/network/bridge is options, if no device/network/bridge, will choose manage network as default -->

<IP vifIndex="0" ip="192.168.1.241" device="eth1" />

<IP vifIndex="1" ip="192.168.1.241" network="xenbr1" />

<IP vifIndex="2" ip="192.168.1.242" bridge="xenbr1" />

<!--DISK element: size is required, storage is options, is no storage, it will choose the storage which has largest free size -->

<DISK size="2" />

</VM>

</SERVER>

</servers>sync_server_info.pywill sync both server and VM information to DB, and create a record in DB if not existsync_server_info.py --updateJust update information to DB

--list-vmList all the vms in server.--list-templList all the templates in the server.--list-networkList the bridge/switch network in the host--list-SRList the storage repository infor in the host

-

-c VM_NAME, --create=VM_NAMECreate a new VM with a template. -

-t TEMPLATE, --templ=TEMPLATETemplate used to create a new VM.create_vm.py -c "test_vm" -t "CentOS 7.2 Template"

-

--vif=VIF_INDEXThe index of virtual interface on which configure will be -

--device=DEVICEThe target physic NIC name with an associated network VIF attach(ed) to -

--network=NETWORKThe target bridge/switch network which VIF connect(ed) to -

--ip=VIF_IPThe ip assigned to the virtual interfacea VIF index(--vif) is needed and a PIF name(--device) or a bridge name(--network) is needed

create_vm.py -c "test2" -t "CentOS 7.2 template" --ip=192.168.1.100 --vif=0 --device=eth0

create_vm.py -c "test2" -t "CentOS 7.2 template" --ip=192.168.1.100 --vif=0 --network="xapi0"

neither --device nor --network, the default manage network will be used

create_vm.py -c "test2" -t "CentOS 7.2 template" --ip=192.168.1.100 --vif=0

-

--cpu-max=MAX_CORESConfig the max VCPU cores. -

--cpu-cores=CPU_CORESConfig the number of startup VCPUs for the new created VMcreate_vm.py -c "test2" -t "CentOS 7.2 template" --cpu-core=2 --cpu-max=4

The max cpu cores can be configured when VM is power off only, and it affect the upper limit when set the cpu cores lively

-

--memory=MEMORY_SIZEConfig the target memory size in GB. -

--min-mem=MIN_MEMORYConfig the min static memory size in GB. -

--max-mem=MAX_MEMORYConfig the max static memory size in GB.There are static memory and dynamic memory. The static memory can be set only when VM is power off, and dynamic memory can be set when VM is running or stop. Memory limits must satisfy: min_static_memory <= min_dynamic_memory <= max_dynamic_memory <= max_static_memory. The max_static_memory will affect the upper limit when set the dynamic memory lively when VM is running.

-

--memory will set the min dynamic memory and max dynamic memory to the target one, when VM power on, it runs at that memory size -

--min-mem will set the min static memory -

--max-mem will set the max static memorycreate_vm.py -c "test2" -t "CentOS 7.2 template" --memory=2 --max-mem=4

-

--add-disk=DISK_SIZEThe disk size(GB) add to the VM -

--storage=STORAGE_NAMEThe storage location where the virtual disk putcreate_vm.py "test1"--add-disk=2 --storage=data2

if no --storage, will use the storage which has a largest free volume

create_vm.py "test1"--add-disk=2

To delete a vm, please use delete_vm.py --vm=vm_to_delete, this will ask user to input 'yes' or 'no' to confirm

-

delete_vm.py --vm=vm_name [--host=ip --user=user --pwd=passwd]if

--forceis set, then the additional disk on vm will also be deleted, this feature is only support on kvm now.

--list-vifList the virtual interface device in guest VM--list-pifList the interface device in the host--list-networkList the bridge/switch network in the host--list-SRList the storage repository information in the host

4.1). Config a VM's interface, add a VIF, delete a VIF, config a VIF(will delete old one if exist, otherwise create it newly), and the --ip, --device, --network is same as that when create vm

-

--add-vif=ADD_INDEXAdd a virtual interface device to guest VM -

--del-vif=DEL_INDEXDelete a virtual interface device from guest VM -

--vif=VIF_INDEXConfigure on a virtual interface deviceconfig_vm.py "test1" --vif=0 --ip=192.168.1.200 --device=eth0

config_vm.py "test1" --add-vif=1 --device=eth1 --ip=192.168.1.200 --netmask=255.255.255.0

-

--cpu-cores=CPU_CORESConfig the VCPU cores lively for a running VM or the number of startup VCPUs for a halted VM> config_vm.py "test1" --cpu-core=4

-

--memory=MEMORY_SIZEConfig the target memory size in GB.config_vm.py "test1" --memory=1

-

--add-disk=DISK_SIZEThe disk size(GB) add to the VM -

--storage=STORAGE_NAMEThe storage location where the virtual disk putconfig_vm.py "test1"--add-disk=2 --storage=data2

if no --storage, will use the storage which has a largest free volume

config_vm.py "test1"--add-disk=2

- power_on.py vm1 vm2 [--host=ip --user=user --pwd=passwd]

- power_off.py vm1 vm2 [--host=ip --user=user --pwd=passwd]

-

--listList the cpu and memory information -

--list-diskList the virtual disk size -

--list-vifsList all the VIFs informationif no options, will list all basic informations, include, cpu, memory, os, disk, interface mac and IP

Note: a problem is that the VIF index given to xenserver, but it is not always the index of eth in VM guest, it depend on the create sequence of virtual interface.

-

--list-srList all the storage information -

--list-pifList all the interface information -

--list-bondList all the bond information -

--list-vmList all the vms -

--list-templList all the templates in the server. -

--list-networkList the network in the host (in Xenserver, it is same as bridge) -

--list-bridgeList the bridge/switch names in the hostdump_host.py [--host=ip --user=user --pwd=passwd]

dump_host.py --list-sr [--host=ip --user=user --pwd=passwd]