git clone https://github.com/syamsv/Pyosint.git

cd PyOsint

pip3 install -r requirements.txtUsage :

python3 Pyosint.py [OPTIONS]

The main functionality of this program has been divided to 3 parts

- Find - Module to search For usenames form a list of 326 websites

- Scrap - To Scrap a website to extract all links form a given website and store it in a file

- Enum - To automate the search of subdomains of a given domain from different services

In Scrap module results are automatically stored in output/web folder wit he ip-address of the website as the filename

The services used are Virus Total,PassiveDns,CrtSearch,ThreatCrowd

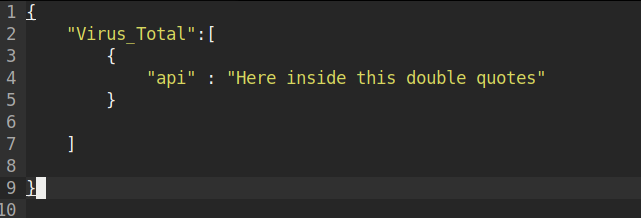

Enum module an Api key of Virus total that you can get from going Here

Paste the key inside api.json file:

* if this step is not done Virus total may block your request

The following are the sub-commands that work this program| Arguments | Shot form |

Long form |

Functionality |

|---|---|---|---|

| Name | -n | --name | To specify the domain name or username to use |

| Module | -m | --module | To specify which module to use |

| Output | -o | --output | To specify outputfile name |

| Thread | -t | --threads | To specify the number of threads to use [ Not applicable to web crawling ] |

| Limit | -l | --limit | to specify the maxium value of web urls to crawl [ Applicable only to web crawling ] |

| Verbose | -v | --verbose | To enable verbose mode [ Applicable only to Enumeration ] |

| Ports | -p | --ports | To specify the ports to scan [ Applicable only to Enumeration ] |

| Help | -h | --help | To Show the help options |

python3 pyosint.py -m find -n exampleuser <-- Username-huntdown

python3 pyosint.py -m scrap -n http://scanme.nmap.org <-- Scrapping using bot

python3 pyosint.py -m enum -n google.com <-- Subdomain enum

The project is still in development and will be added with additional functionality.

Happy to hear suggestions for improvement. This is only for educational and research purposes.The developers will not be held responsible for any harm caused by anyone who misuses the material. Pyosint is licensed under the GNU GPL license. take a look at the LICENSE for more information Rewritten the code completely ,Improved interface Subdomain enumeration module (enum) has been added

Find module code that has been optimised. The number of sites to automate has grown from 14 to 147, and connection error has been resolved.

- Program has been re-written to work with arguments

- Find module has been added threading Functionality

- Output functionality has been added to every module

- More Error handiling has been added

- Number of sites has been increased from 147 to 326

- Cross platform portable

- Reduced unused and unwanted codes

- Removed console mode