Attention Mechanism Exploits Temporal Contexts: Real-time 3D Human Pose Reconstruction (CVPR 2020 Oral)

More extensive evaluation andcode can be found at our lab website: (https://sites.google.com/a/udayton.edu/jshen1/cvpr2020)

PyTorch code of the paper "Attention Mechanism Exploits Temporal Contexts: Real-time 3D Human Pose Reconstruction". pdf

If you found this code useful, please cite the following paper:

@inproceedings{liu2020attention,

title={Attention Mechanism Exploits Temporal Contexts: Real-Time 3D Human Pose Reconstruction},

author={Liu, Ruixu and Shen, Ju and Wang, He and Chen, Chen and Cheung, Sen-ching and Asari, Vijayan},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={5064--5073},

year={2020}

}

The code is developed and tested on the following environment

- Python 3.6

- PyTorch 1.1 or higher

- CUDA 10

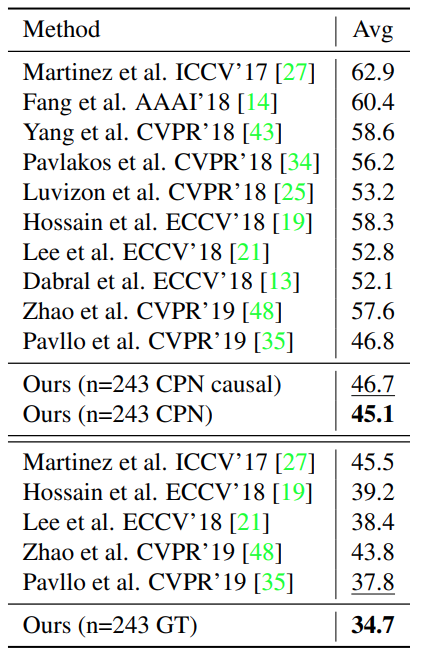

The source code is for training/evaluating on the Human3.6M dataset. Our code is compatible with the dataset setup introduced by Martinez et al. and Pavllo et al.. Please refer to VideoPose3D to set up the Human3.6M dataset (./data directory). We upload the training 2D cpn data here and the 3D gt data here. The 3D Avatar model and code are avaliable here.

To train a model from scratch, run:

python run.py -da -tta-da controls the data augments during training and -tta is the testing data augmentation.

For example, to train our 243-frame ground truth model or causal model in our paper, please run:

python run.py -k gtor

python run.py -k cpn_ft_h36m_dbb --causalIt should require 24 hours to train on one TITAN RTX GPU.

We provide the pre-trained cpn model here and ground truth model here. To evaluate them, put them into the ./checkpoint directory and run:

For cpn model:

python run.py -tta --evaluate cpn.binFor ground truth model:

python run.py -k gt -tta --evaluate gt.binWe keep our code consistent with VideoPose3D. Please refer to their project page for further information.