azure-k3s-cluster (WIP)

A (WIP) dynamically resizable k3s cluster for Azure, based on my azure-docker-swarm-cluster project.

What

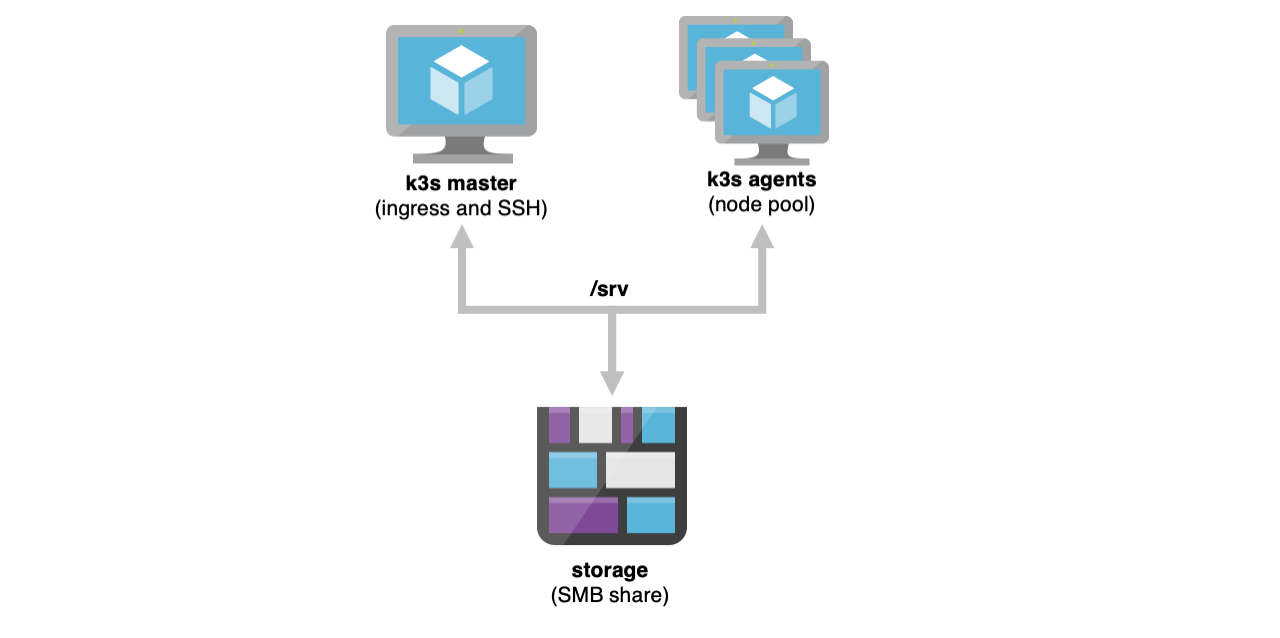

This is an Azure Resource Manager template that automatically deploys a k3s cluster atop Ubuntu 20.04. This cluster has a single master VM and a VM scaleset for workers/agents, plus required network infrastructure.

The template defaults to deploying B-Series VMs (B1ls) with the smallest possible managed disk size (S4, 32GB). It also deploys (and mounts) an Azure File Share on all machines with (very) permissive access at /srv, which makes it quite easy to run stateful services.

The key aspect of this template is that you can add and remove agents at will simply by resizing the VM scaleset, which is very handy when running the node pool as spot instances - the cluster comes with a few (very simple) helper scripts that allow nodes to join and leave the cluster as they are created/destroyed, and the k3s scheduler will redeploy pods as needed.

Why

This was originally built as a Docker Swarm template, and even though Azure has a perfectly serviceable Kubernetes managed service, I enjoy the challenge of building my own stuff and fine-tuning it.

k3s is a breath of fresh air, and an opportunity to play around with a simpler, slimmer version of Kubernetes--and break it to see what happens.

Also, a lot of the ARM templating involved (for metrics, managed identities, etc.) lacked comprehensive samples when I started the project, so this was also a way for me to provide a fully working example that other people can learn from.

Roadmap

- air-gapped (i.e., standalone) install without

curl - test metrics server

- document

cloud-config - clean

kubernetes-dashboarddeployment - WIP: sample deployments/pods/charts

- TODO: Leverage Instance Protection and Scale-In Policies

- WIP: simple Python scale-down helper (blog post on how I'm going to do that with managed service identities and the instance metadata service)

- support an (insecure) private registry hosted on the master node (requires using

dockerinstead ofcontainerd, but saves a lot of hassle when doing tests) - temporarily remove Docker support so that I can explore

k3c - temporarily remove advanced Azure Linux diagnostics extensions (3.0) due to incompatibility with Ubuntu 20.04 (extension looks for

pythoninstead ofpython3) - update Azure templates, helper scripts and

cloud-configfor Ubuntu 20.04 andpython3 - upgrade to

k3sv1.19.4+k3s1 - Handle eviction notifications

- Use Spot Instances for node pool

- upgrade to

k3sv1.17.0+k3s.1 - upgrade to

k3s1.0.1 - upgrade to

k3s1.0.0 - upgrade to

k3s0.8.0 - upgrade to

k3s0.7.0 - upgrade to

k3s0.6.0 - re-usable user-assigned service identity instead of system (per-machine)

- Managed Service Identity for master and role allocations to allow it to manage the scaleset (and the rest of the resource group)

- add Linux Monitoring Extension (3.x) to master and agents (visible in the "Guest (classic)" metrics namespace in Azure Portal)

- scratch folder on agents' temporary storage volume (on-hypervisor SSD), available as

/mnt/scratch - set timezone

-

bashcompletion forkubectlin master node - remove scale set load balancer (everything must go through

traefikon the master) - re-enable first-time reboot after OS package updates

- private registry on master node

- trivial ingress through master node (built-in)

- Set node role labels

- install

k3sviacloud-config - change

cloud-configto exposek3stoken to agents - remove unused packages from

cloud-config - remove unnecessary commands from

Makefile - remove unnecessary files from repo and trim history

- fork, new

README

Makefile commands

make keys- generates an SSH key for provisioningmake deploy-storage- deploys shared storagemake params- generates ARM template parametersmake deploy-compute- deploys cluster resources and pre-provisions Docker on all machinesmake view-deployment- view deployment progressmake list-agents- lists all agent VMsmake scale-agents-<number>- scales the agent VM scale set to<number>instances, i.e.,make scale-10will resize it (up or down) to 10 VMsmake stop-agents- stops all agentsmake start-agents- starts all agentsmake reimage-agents-parallel- nukes and paves all agentsmake reimage-agents-serial- reimages all agents in sequencemake chaos-monkey- restarts all agents in random ordermake proxy- opens an SSH session tomaster0and sets up TCP forwarding tolocalhostmake tail-helper- opens an SSH session tomaster0and tails thek3s-helperlogmake list-endpoints- list DNS aliasesmake destroy-cluster- destroys the entire cluster

Recommended Sequence

az login

make keys

make deploy-storage

make params

make deploy-compute

make view-deployment

# Go to the Azure portal and check the deployment progress

# Clean up after we're done working

make destroy-cluster

Requirements

- Python

- The Azure CLI (

pip install -U -r requirements.txtwill install it) - GNU

make(you can just read through theMakefileand type the commands yourself)

Internals

master0 runs a very simple HTTP server (only accessible inside the cluster) that provides tokens for new VMs to join the cluster and an endpoint for them to signal that they're leaving. That server also cleans up the node table once agents are gone.

Upon provisioning, all agents try to obtain a token and join the cluster. Upon rebooting, they signal they're leaving the cluster and re-join it again.

This is done in the simplest possible way, by using cloud-init to bootstrap a few helper scripts that are invoked upon shutdown and (re)boot. Check the YAML files for details.

Provisioning Flow

To avoid using VM extensions (which are nice, but opaque to most people used to using cloud-init) and to ensure each fresh deployment runs the latest Docker version, VMs are provisioned using customData in their respective ARM templates.

cloud-init files and SSH keys are then packed into the JSON parameters file and submitted as a single provisioning transaction, and upon first boot Ubuntu takes the cloud-init file and provisions the machine accordingly.

See azure-docker-swarm-cluster for more details.

Disclaimers

Keep in mind that this was written for conciseness and ease of experimentation -- look to AKS for a production service.