📈 Stock Exchange data analysis using PySpark.

This project is my "hello world" with PySpark, originated from a practice activity of IGTI's Data Science Bootcamp. It consists in application of PySpark to perform a simple data analysis using a Stock Exchange dataset, which was extracted from this Kaggle repository.

The easiest way to reproduce this analysis is by using Google Colab. You will just need to import quiz_colab.ipynb and all_stocks_5yr.csv files in a new Colab's session and run it.

I choose to create a environment with Jupyter and Spark in my local machine using a Docker Compose file, which uses Jupyter PySpark Notebook image. Details of Docker Compose installation can be found on it's official documentation.

Since you have it installed in your machine, all you need is run the following commands in a terminal window:

- Clone repository:

$ git clone https://github.com/lucasfusinato/pyspark-stock-exchange-analysis

- Open project's folder:

$ cd pyspark-stock-exchange-analysis

- Start containers:

$ docker-compose up -d

And that's all! Now, you should be able to access notebook (and also running it by yourself) by clicking on this link.

- Docker Compose: Docker container's run specification tool;

- Jupyter: notebook execution environment;

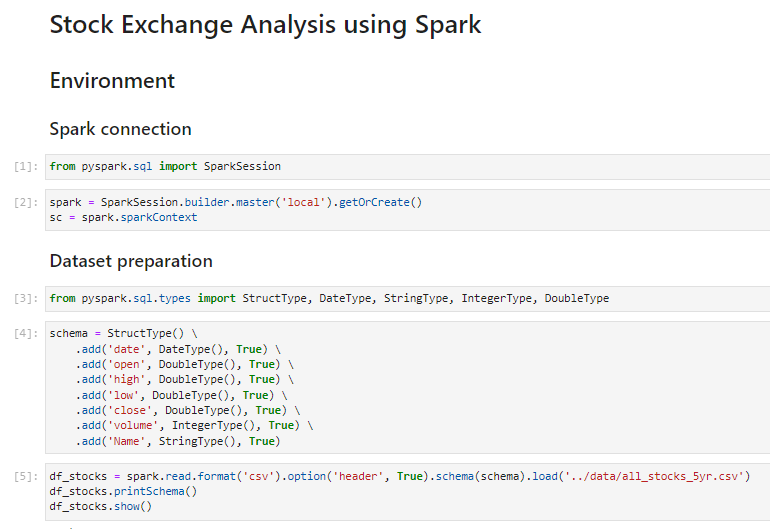

- Spark: engine for large-scale data analytics;

- PySpark: interface for Apache Spark in Python.