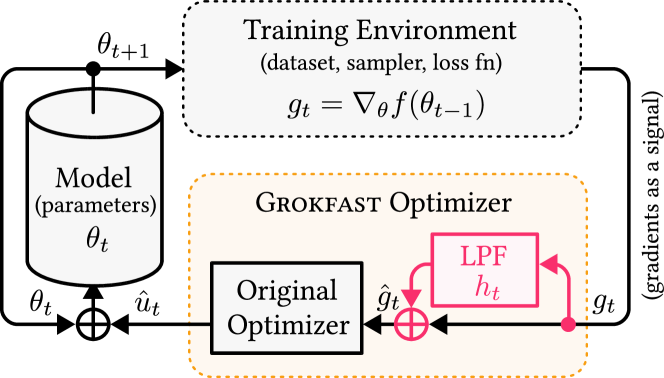

Explorations into "Grokfast, Accelerated Grokking by Amplifying Slow Gradients", out of Seoul National University in Korea. In particular, will compare it with NAdam on modular addition as well as a few other tasks, since I am curious why those experiments are left out of the paper. If it holds up, will polish it up into a nice package for quick use.

The official repository can be found here

$ pip install grokfast-pytorchimport torch

from torch import nn

# toy model

model = nn.Linear(10, 1)

# import GrokFastAdamW and instantiate with parameters

from grokfast_pytorch import GrokFastAdamW

opt = GrokFastAdamW(

model.parameters(),

lr = 1e-4,

weight_decay = 1e-2

)

# forward and backwards

loss = model(torch.randn(10))

loss.backward()

# optimizer step

opt.step()

opt.zero_grad()- run all experiments on small transformer

- modular addition

- pathfinder-x

- run against nadam and some other optimizers

- see if

exp_avgcould be repurposed for amplifying slow grads

- add the foreach version only if above experiments turn out well

@inproceedings{Lee2024GrokfastAG,

title = {Grokfast: Accelerated Grokking by Amplifying Slow Gradients},

author = {Jaerin Lee and Bong Gyun Kang and Kihoon Kim and Kyoung Mu Lee},

year = {2024},

url = {https://api.semanticscholar.org/CorpusID:270123846}

}@misc{kumar2024maintaining,

title = {Maintaining Plasticity in Continual Learning via Regenerative Regularization},

author = {Saurabh Kumar and Henrik Marklund and Benjamin Van Roy},

year = {2024},

url = {https://openreview.net/forum?id=lyoOWX0e0O}

}@inproceedings{anonymous2024the,

title = {The Complexity Dynamics of Grokking},

author = {Anonymous},

booktitle = {Submitted to The Thirteenth International Conference on Learning Representations},

year = {2024},

url = {https://openreview.net/forum?id=07N9jCfIE4},

note = {under review}

}