Update on 2018/12/06. Provide model trained on VOC and SBD datasets.

Update on 2018/11/24. Release newest version code, which fix some previous issues and also add support for new backbones and multi-gpu training. For previous code, please see in previous branch

- Support different backbones

- Support VOC, SBD, Cityscapes and COCO datasets

- Multi-GPU training

| Backbone | train/eval os | mIoU | Pretrained Model |

|---|---|---|---|

| ResNet | 16/16 | 78.43% | google drive |

| MobileNet | 16/16 | 70.81% | google drive |

| DRN | 16/16 | 78.87% | google drive |

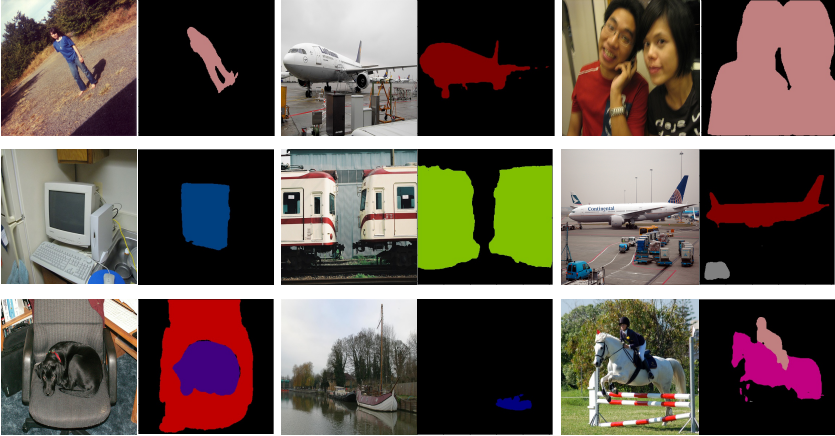

This is a PyTorch(0.4.1) implementation of DeepLab-V3-Plus. It can use Modified Aligned Xception and ResNet as backbone. Currently, we train DeepLab V3 Plus using Pascal VOC 2012, SBD and Cityscapes datasets.

The code was tested with Anaconda and Python 3.6. After installing the Anaconda environment:

-

Clone the repo:

git clone https://github.com/jfzhang95/pytorch-deeplab-xception.git cd pytorch-deeplab-xception -

Install dependencies:

For PyTorch dependency, see pytorch.org for more details.

For custom dependencies:

pip install matplotlib pillow tensorboardX tqdm

Fellow steps below to train your model:

-

Configure your dataset path in mypath.py.

-

Input arguments: (see full input arguments via python train.py --help):

usage: train.py [-h] [--backbone {resnet,xception,drn,mobilenet}] [--out-stride OUT_STRIDE] [--dataset {pascal,coco,cityscapes}] [--use-sbd] [--workers N] [--base-size BASE_SIZE] [--crop-size CROP_SIZE] [--sync-bn SYNC_BN] [--freeze-bn FREEZE_BN] [--loss-type {ce,focal}] [--epochs N] [--start_epoch N] [--batch-size N] [--test-batch-size N] [--use-balanced-weights] [--lr LR] [--lr-scheduler {poly,step,cos}] [--momentum M] [--weight-decay M] [--nesterov] [--no-cuda] [--gpu-ids GPU_IDS] [--seed S] [--resume RESUME] [--checkname CHECKNAME] [--ft] [--eval-interval EVAL_INTERVAL] [--no-val] -

To train deeplabv3+ using Pascal VOC dataset and ResNet as backbone:

bash train_voc.sh

-

To train deeplabv3+ using COCO dataset and ResNet as backbone:

bash train_coco.sh