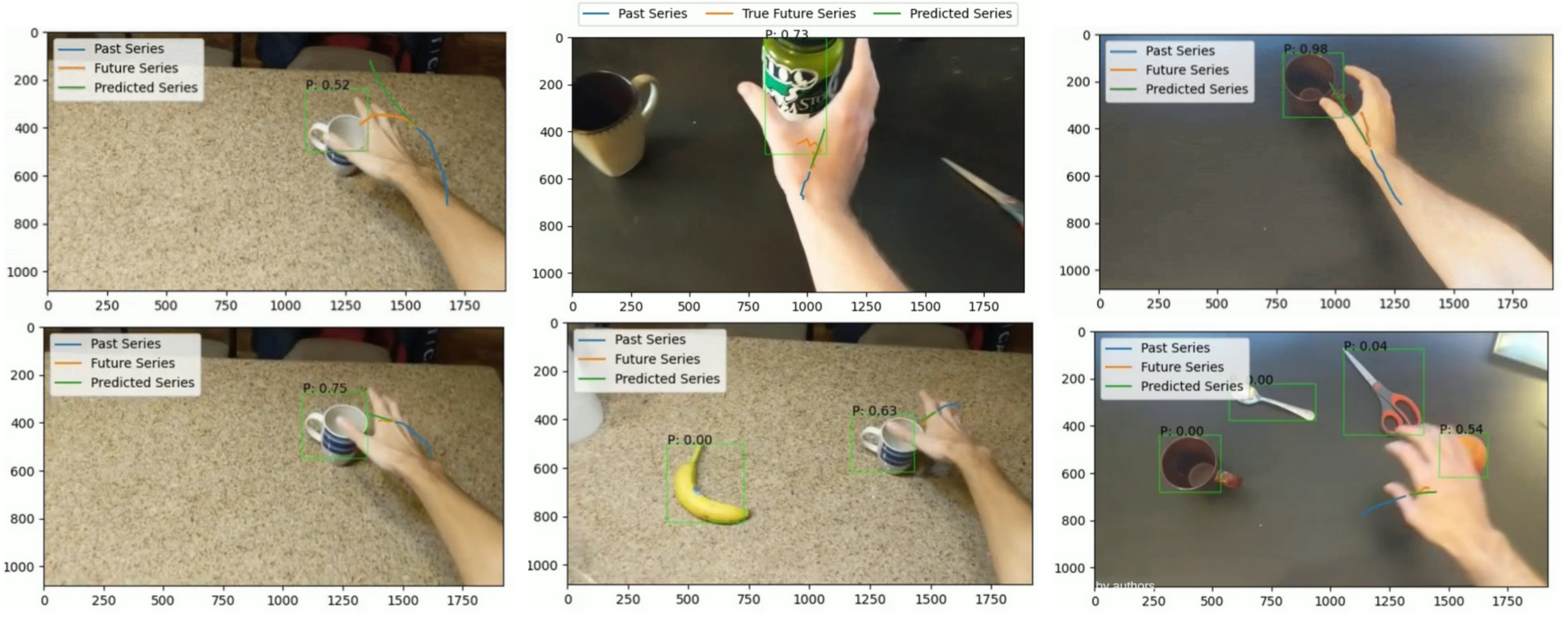

This project uses first-person video to predict hand-object contact using a MaskRCNN for object detection and LSTM for trajectory prediction. Also see the project report and project video.

The main functions can be run with python run_on_video/run_on_video.py.