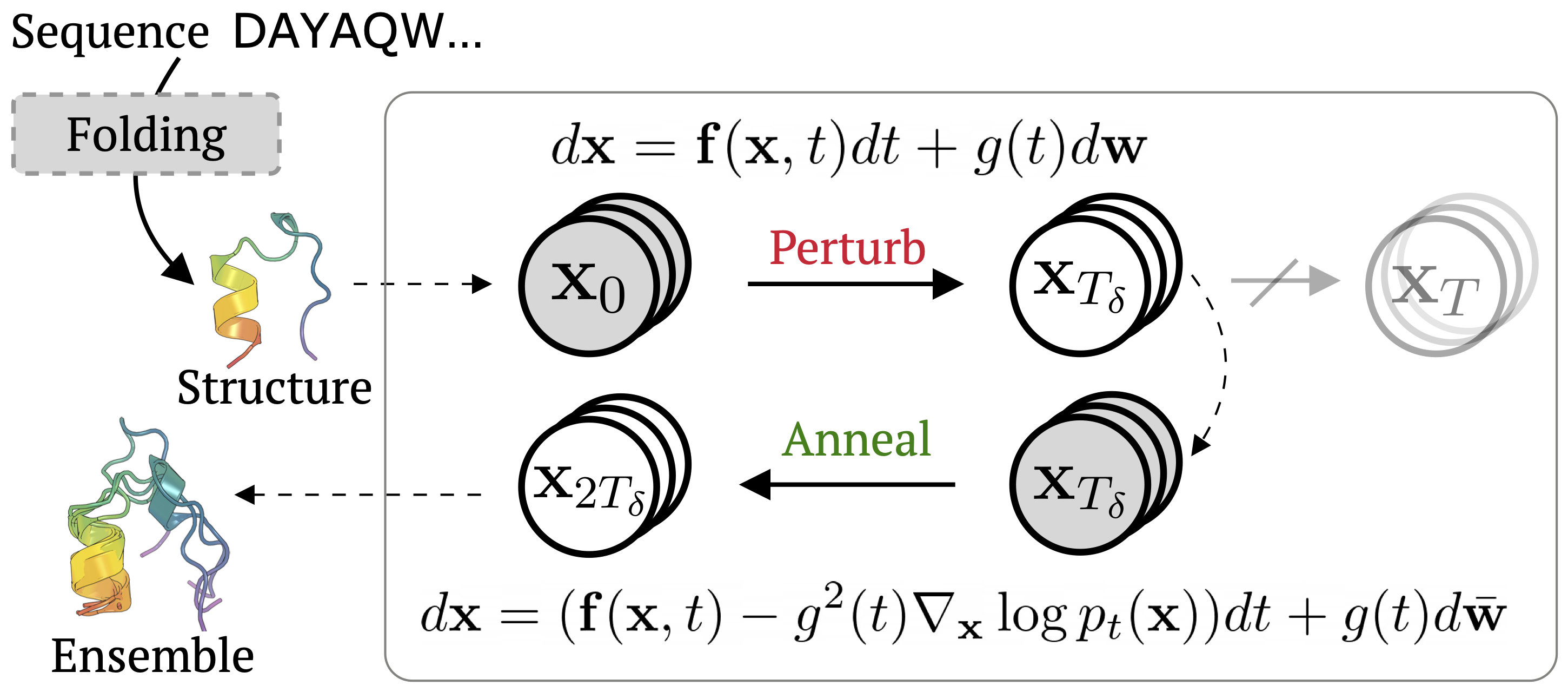

Str2Str is a score-based framework (which means it can accommodate any diffusion/flow matching architecture) for protein conformation sampling in a zero-shot manner. The idea behind is very straightforward: given an input protein structure, which can be obtained from your favorite folding module, such as AlphaFold2 or ESMFold, Str2Str perturbs the structure geometry in the corresponding conformation space, followed by a sequence of denoising steps as annealing.

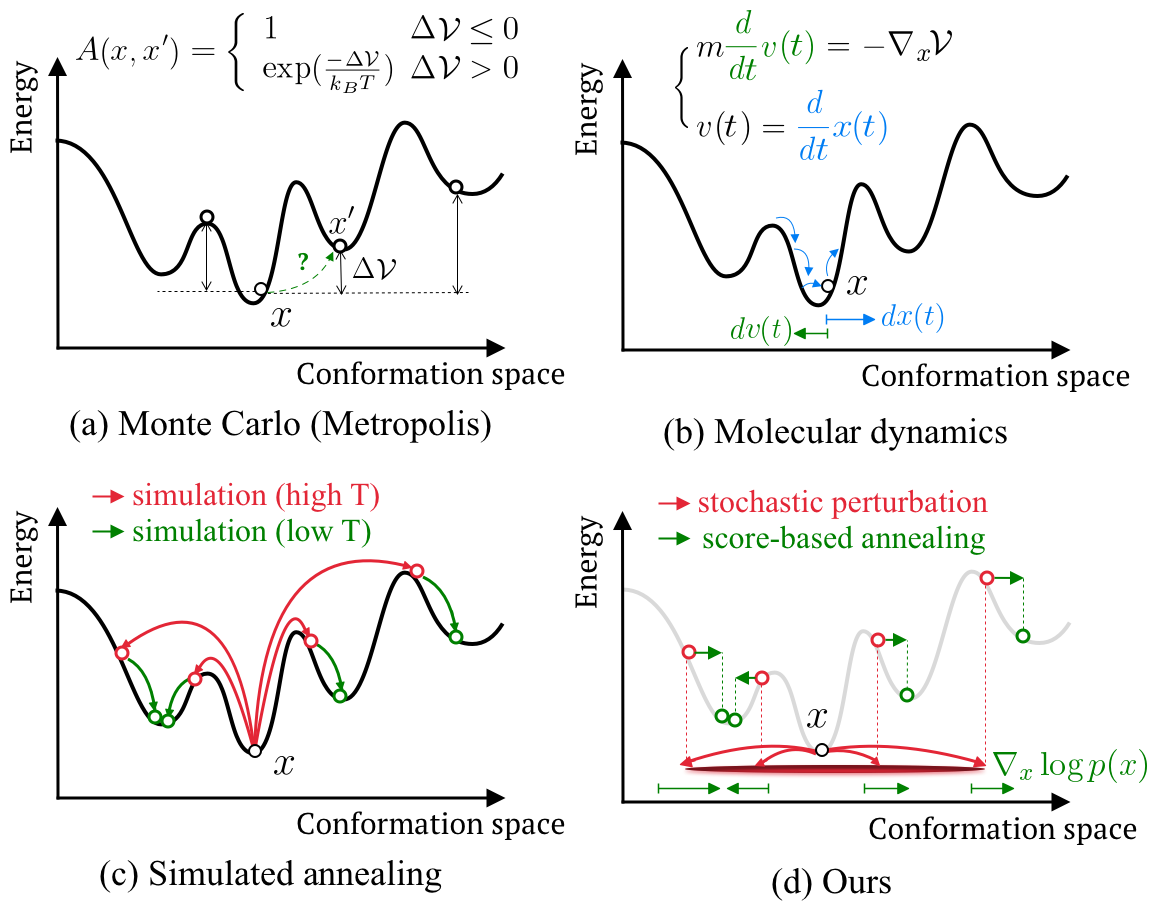

This simply yet effective sampling pipeline draws abundant inspiration from traditional methods to sample conformations. Differently, it is guided by a neural score network train on the PDB database.

git clone https://github.com/lujiarui/Str2Str.git

cd Str2Str

# Create conda environment.

conda env create -f environment.yml

conda activate myenv

# Support import as a package.

pip install -e .# Edit this accordingly.

DOWNLOAD_DIR=/path/to/download/directory

PROCESSED_DIR=/path/to/processing/directory

# Download.

bash scripts/pdb/download_pdb_mmcif.sh $DOWNLOAD_DIR

# Preprocess.

python scripts/pdb/preprocess.py --mmcif_dir $DOWNLOAD_DIR/pdb_mmcif/mmcif_files --output_dir $PROCESSED_DIRThe processed directory will be like:

/path/to/processing/directory

├── 00

│ └── 200l.pkl

├── 01

│ ├── 101m.pkl

│ └── 201l.pkl

├── 02

│ ├── 102l.pkl

│ └── 102m.pkl

├── 03

│ ├── 103l.pkl

│ └── 103m.pkl

└── <a metadata file>.csv

(Optional) To tailor the training set, a subset of proteins can be specified to to the preprocess.py, such as the culled list from PISCES. To enable this, simply download the target list and add flag --pisces:

PISCES_FILE=/path/to/pisces/file

python scripts/pdb/preprocess.py --mmcif_dir $DOWNLOAD_DIR/pdb_mmcif/mmcif_files --output_dir $PROCESSED_DIR --pisces $PISCES_FILEFirst thing first, the path to train / test data should be configured right. Simply rename .env.example -> .env and specify the top three environment variables.

# Training w/ default configs.

python src/train.py

# w/ single GPU.

python src/train.py trainer=gpu

# w/ DDP on 2 GPUS.

python src/train.py trainer=ddp trainer.devices=2 data.batch_size=4

# w/ the chosen experiment configuration.

python src/train.py experiment=example trainer.devices=2 data.batch_size=4

# limit training epochs (100) or time (12hrs)

python src/train.py +trainer.max_epochs=100

python src/train.py +trainer.max_time="00:12:00:00"To perform inference, a trained model checkpoint is required and can be specified using ckpt_path=/path/to/checkpoint. A pretrained PyTorch checkpoint can be accessed from Google Drive. Download and put it into data/ckpt.

# inference (sampling & evaluation on metrics)

python src/eval.py task_name=inference

# inference (if you want to load checkpoint elsewhere)

python src/eval.py task_name=inference ckpt_path=/path/to/some/checkpoint

# sampling only (skip evaluation)

python src/eval.py task_name=inference target_dir=null

# evaluation only (skip sampling)

python src/eval.py task_name=eval_only pred_dir=/path/to/some/pdb/directoryTo run evaluation, you may need to specify the REFERENCE_DATA variable in the .env file. This path (points to a directory) should contain the reference trajectory/ensembles, one .pdb file per target, aligned with the directory arch like TEST_DATA. In the paper, the long MD trajectories from the study in this paper are used as reference.

We also provide several useful scripts based on existing tools, which can be helpful along with Str2Str.

To run MD simulation using OpenMM:

python script/simulate.py --pdb_dir /path/to/some/pdb/directory --output_dir /path/to/output/directoryTo fold (sequence -> structure) using ESMFold.

python script/fold.py -i /path/to/some/fasta/or/pdb/directory -o /path/to/output/directoryTo pack (backbone atoms -> full heavy atoms) using FASPR.

python script/pack.py -i /path/to/some/pdb/directory -o /path/to/output/directory --n_cpu 4- jiaruilu27[at]gmail[dot]com

If you find this codebase inspiring in your research, please cite the following paper. 🥰

@inproceedings{lu2024strstr,

title={Str2Str: A Score-based Framework for Zero-shot Protein Conformation Sampling},

author={Lu, Jiarui and Zhong, Bozitao and Zhang, Zuobai and Tang, Jian},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024}

}