This is a code repository for structured argument mining experiment, including the corpus and the service used for data collection:

This project is part of my MSc thesis in Computer Science at the University of Copenhagen. More details on participant recruiting, the data collection process, corpus statistics, and the experimental results, using state-of-the-art ML model, can be found here.

The corpus consists of 20 opinion articles from 3 publishers:

- (5) Plant Based News (PBN)

- (5) The Guardian

- (10) Altinget (original and translated to English)

The umbrella topic is sustainable diets, which cover anything related to moving towards more sustainable or plant-based food options. The most common sub-topics in the dataset are the following:

- Innovation in food industry

- Meat alternative

- Cooking education

- Social and cultural challenges of encouraging plant-based diet

The list of article titles and links can be found here. The extracted article contents can be found under articles, while the annotations of 3 crowdsource workers under annotations.

It's a web application based on psiturk framework, which integrates with Amazon Mechanical Turk (MTurk) platform. See psiturk for how to work with this kind of project.

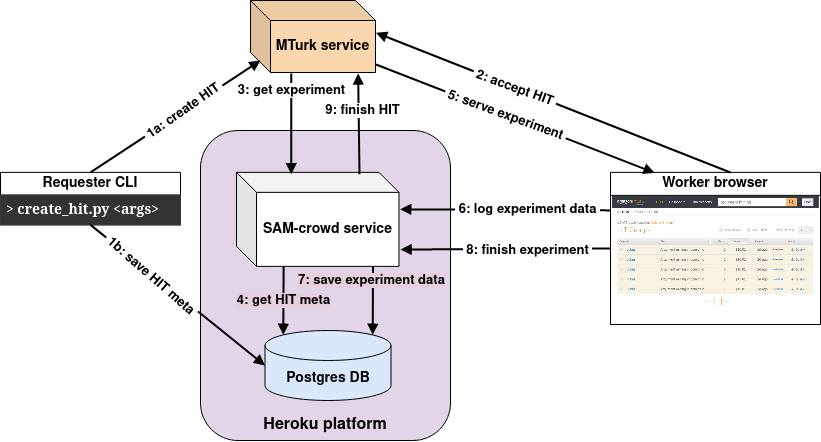

One difference is that HITs should be created via create_hit_wrapper.py as it additionally adds a feature for associating a HIT with a specific article content (it requires additional hit_configs table). The following figure illustrates the interactions between this service (SAM-crowd) and other systems.

A slightly modified version of recogito-js is used in the UI to capture the task of structured argument mining. Four types of components are supported: MajorClaim, ClaimFor, ClaimAgainst and Premise, according to the framework proposed by Stab and Gurevych. The annotation interface demo is shown below:

Repository also contains some useful scripts for: parsing HITs from DB dumps, converting brat format to mrp, prediction scoring and others.

There is also annotation guidelines for general use (any interface) and an interactive version, specifically tailored for this project (under templates/guidelines).

Reference annotations and explanations used for participant screening/training are under prep.