Tsinghua University

This is the code for the paper: CFDBench: A Large-Scale Benchmark for Machine Learning Methods in Fluid Dynamics.

CFDBench is the first large-scale benchmark for evaluating machine learning methods in fluid dynamics with varied boundary conditions (BCs), physical properties, and domain geometries. It consists of four classic problems in computational fluid dynamics (CFD), with many varying operating parameters, making it perfect for testing the inference-time generalization ability of surrogate models. Such generalizability is essential for avoiding expensive re-training when applying surrogate models to new problems.

Main download links:

Interpolated data (~13.4GB):

Raw data (~460GB):

- 🤗HuggingFace

- Baidu Drive (百度网盘) (提取码: cfd4)

You likely do not need the raw data if you are just trying to evaluate and/or train neural networks on our data.

The directory generation-code contains the code for creating the mesh (ICEM code) and the schema code for batch generation in ANSYS Fluent.

This part takes a lot of time, and you are better off using our generated data instead.

After generating data with numerical algorithms, it is then interpolated to a grid of 64x64. The raw data before interpolation is very large; the link below is the interpolated data.

Main download link: [click here]

Contains 4 problems:

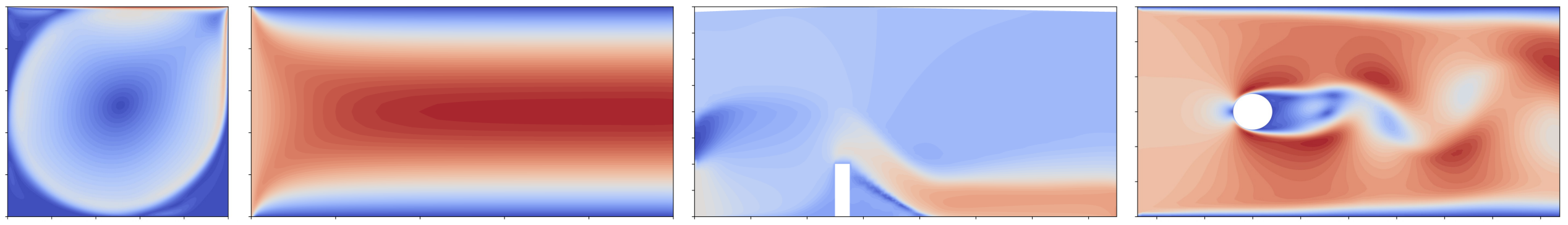

cavity: Lid-driven cavity flowtube: Flow through a circular tubedam: Flow over a damcylinder: Flow around a cylinder

The cylinder flow is separated into three files because the file size exceeds the upload limit.

Each dataset includes 3 subsets, corresponding to changing BCs, domain geometries, and physical properties.

The directory tree for the datasets:

▼ cavity/

▼ bc/

▼ case0000/

▼ u.npy

▼ v.npy

► case0001/

► geo/

► prop/

► tube/

► dam/

► cylinder/

The actual data for each velocity field is stored in u.npy and v.npy.

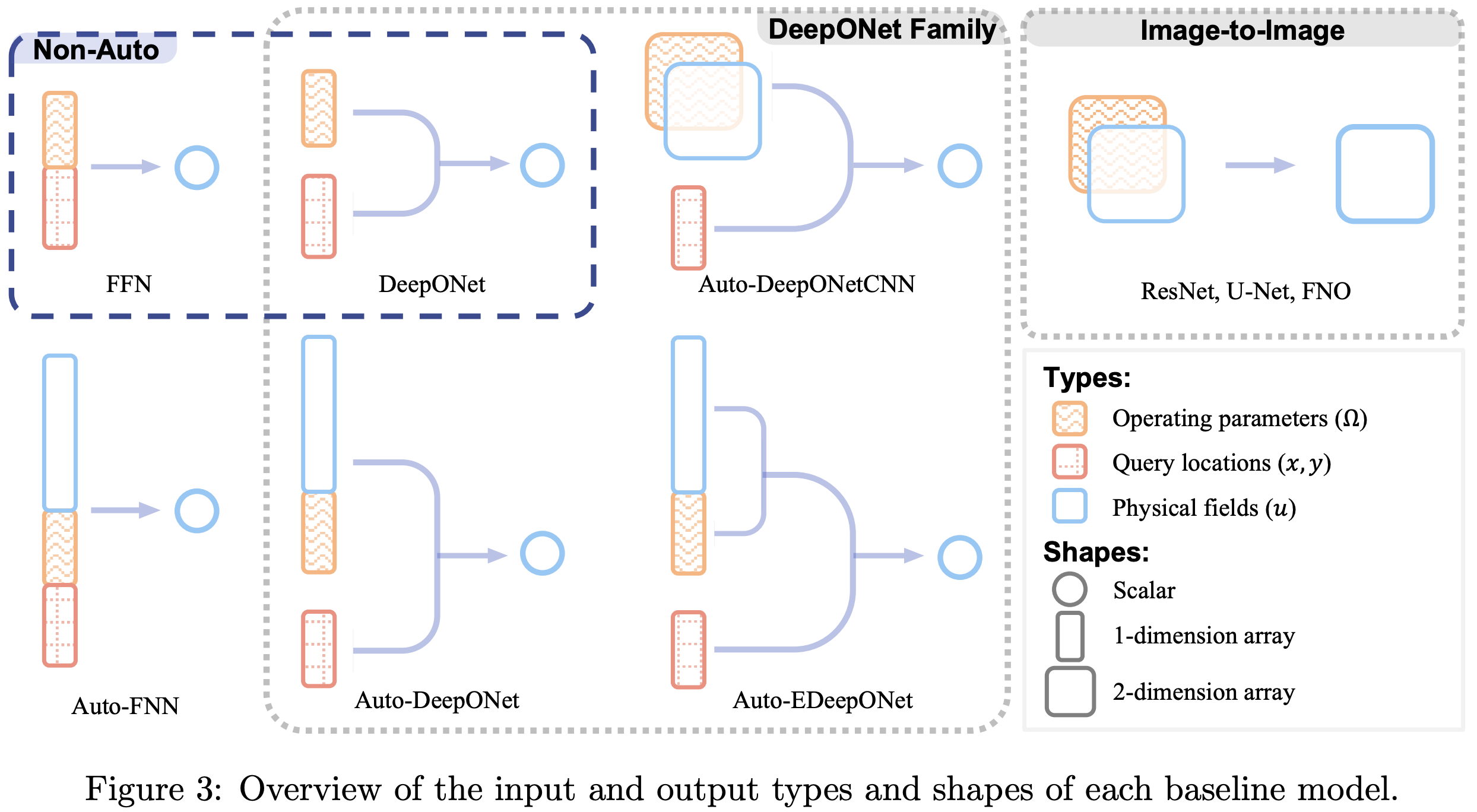

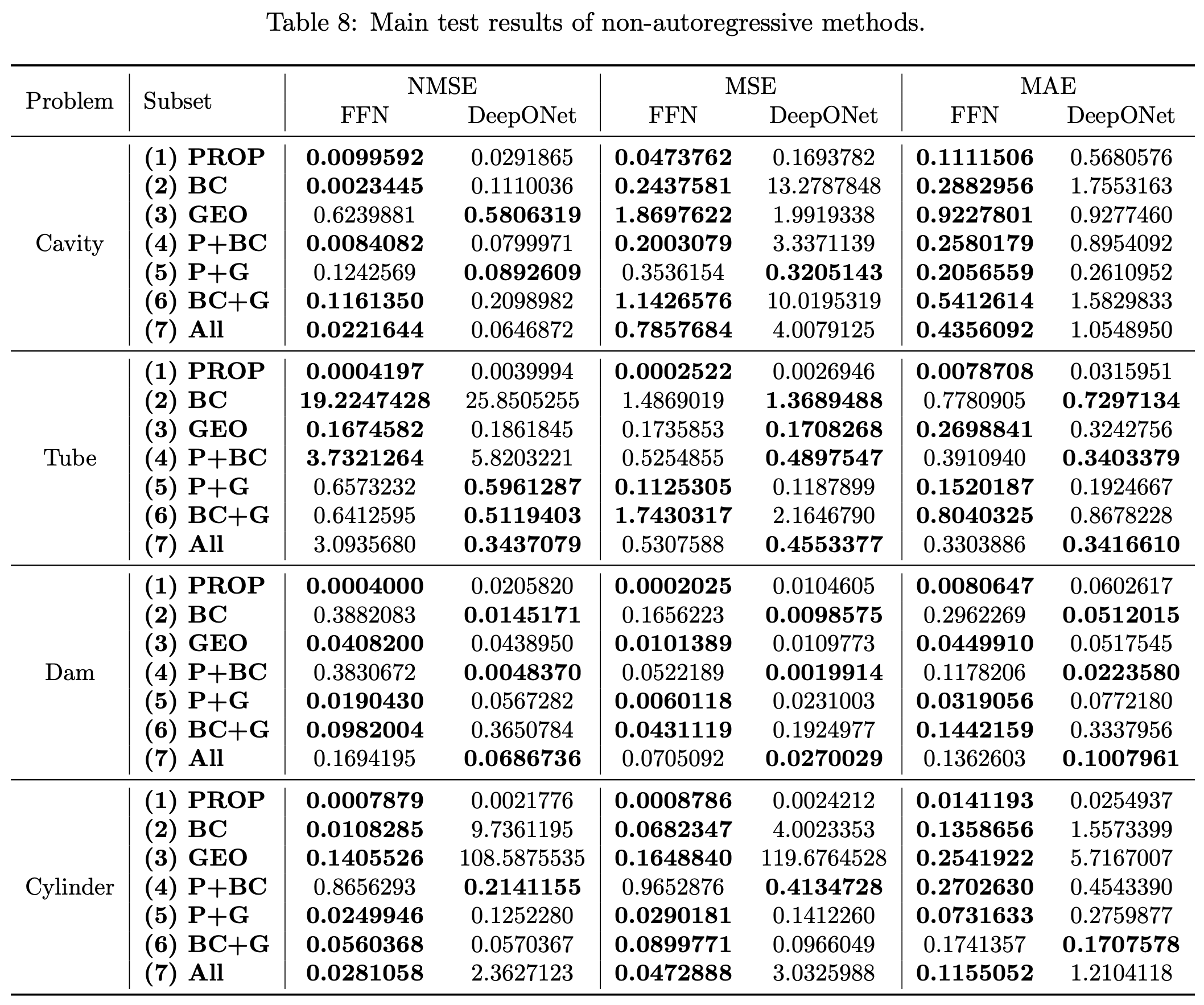

The basic types of models are autoregressive and non-autoregressive:

-

Autoregressive:

- Auto-FFN

- Auto-DeepONet

- Auto-EDeepONet

- Auto-DeepONetCNN

- ResNet

- U-Net

- FNO

-

Non-autoregressive

- FFN

- DeepONet

The implementation of the models is located in src/models

Tested on:

- PyTorch 1.13.3+cu117

- Python 3.9.0

Make sure you have access to CUDA GPU, then setup the environment using

pip install -r requirementsMove the downloaded data into a data directory next to src directory, such that the directory

looks like:

▼ data/

▼ cavity/

▼ bc/

▼ geo/

▼ prop/

► tube/

► dam/

► cylinder/

► generation-code/

► src/

.gitignore

README.md

In the src directory, run train.py or train_auto.py to train non-autoregressive or autoregressive models respectively. Specify the model with --model, it must be one of the following:

| Model | Value for --model |

Script |

|---|---|---|

| Non-autoregrssive FFN | ffn |

train.py |

| Non-autoregressive DeepONet | deeponet |

train.py |

| Autoregressive Auto-FFN | auto_ffn |

train_auto.py |

| Autoregressive Auto-DeepONet | auto_deeponet |

train_auto.py |

| Autoregressive Auto-EDeepONet | auto_edeeponet |

train_auto.py |

| Autoregressive Auto-DeepONetCNN | auto_deeponet_cnn |

train_auto.py |

| Autoregressive ResNet | resnet |

train_auto.py |

| Autoregressive U-Net | unet |

train_auto.py |

| Autoregressive FNO | fno |

train_auto.py |

For example, run FNO on the cavity flow subset with all cases:

python train_auto.py --model fno --data cavity_prop_bc_geoor, run DeepONet on the dam flow PROP + GEO subset:

python train.py --model deeponet --data dam_prop_geoResults will be saved to result/ directory by default, but can be customized with the --output_dir argument.

For more options, such as model hyperparameters, run python train.py -h or python train_auto.py -h.

Set --mode test when executing train.py or train_auto.py.

See the Results section in the paper. Reduce the batch size if you run out of VRAM.

Our code is highly extensible and modular, and it is very easy to add new datasets or models.

To add a new model, simply create a class that inherits one of the following base models:

CfdModel: If your model is nonautoregressiveAutoCfdModel: If your model is autoregressive

Then depending on which base model, you have to implement just 2 or 3 methods in addition to the model architecture itself.

- Nonautoregressive:

forward,generate_one. - Autoregressive:

forward,generate_one, andgenerate_many.

Then, if your model requires new hyperparameters, add them to the argument parsed in args.py.

Finally, you should create add a new elif in for the instantiation of your model. For autoregressive models, change init_model in utils_auto.py as follows.

def init_model(args: Args) -> AutoCfdModel:

# ...

elif args.model == "your_model_name":

model = YourModeClass(

in_chan=args.in_chan,

out_chan=args.out_chan,

n_case_params=n_case_params,

loss_fn=loss_fn,

some_arg=args.some_arg,

# ... more arguments for your model. (or you can just pass `args`)

).cuda()

return modelFor nonautoregressive models, change init_model in train.py in a similar manner.

You just have to implement a new subclass for CfdDataset or CfdAutoDataset in dataset/base.py. Like any PyTorch Dataset, it needs to implement __getitem__ and __len__. But for multi-step inference, it also has to load features into a member attribute named all_features which should be a list of Tensor or NumPy arrays.

Then, add a new elif in get_dataset in dataset/__init__.py for the instantiation of your data.

If you find this code useful, please cite our paper:

@article{CFDBench,

title={CFDBench: A Large-Scale Benchmark for Machine Learning Methods in Fluid Dynamics},

author={Yining, Luo and Yingfa, Chen and Zhen, Zhang},

url={https://arxiv.org/abs/2310.05963},

year={2023}

}