Luo, Yan and Wong, Yongkang and Kankanhalli, Mohan S and Zhao, Qi

This repository contains the code for the continual learning task. For the classification task, please refer to repository congruency.

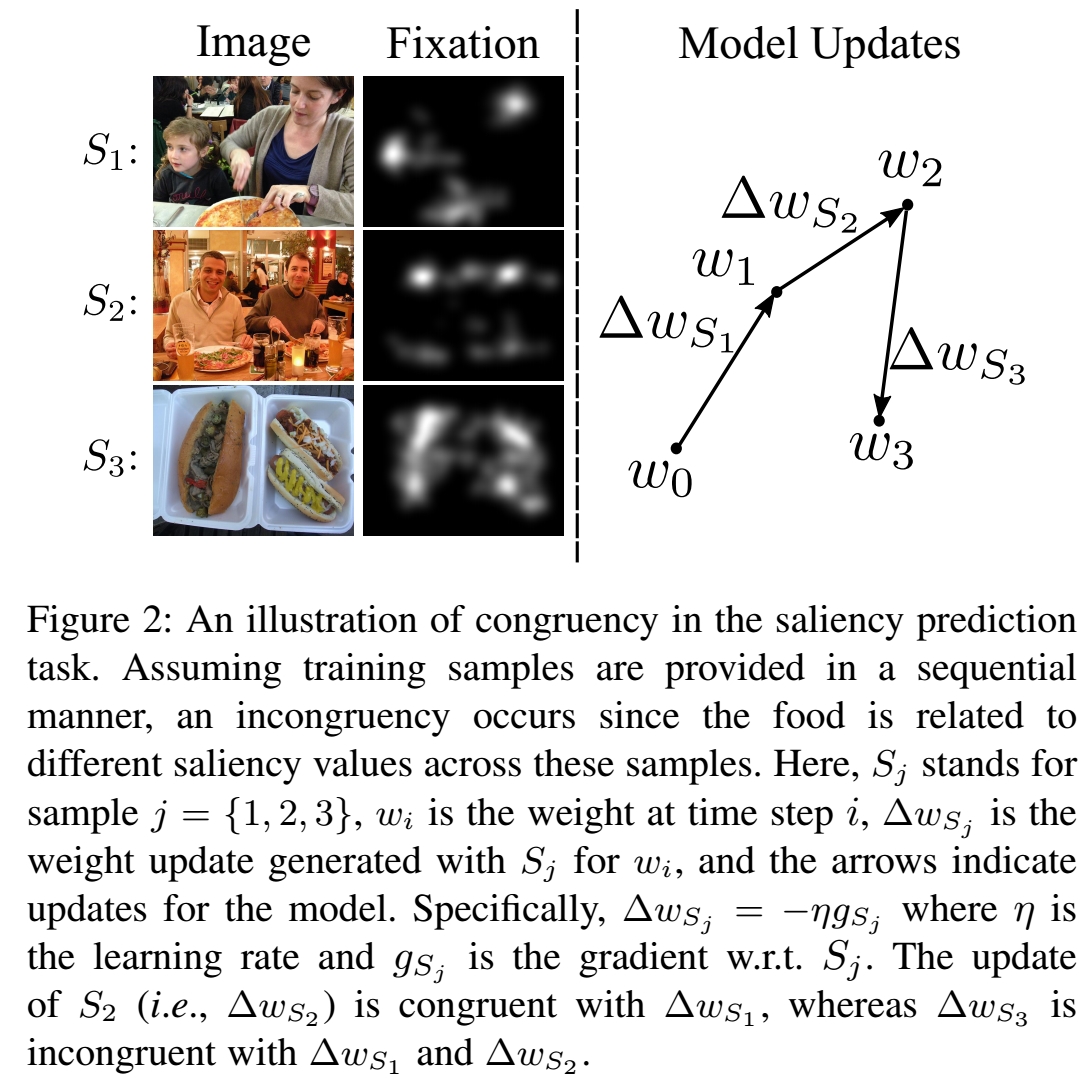

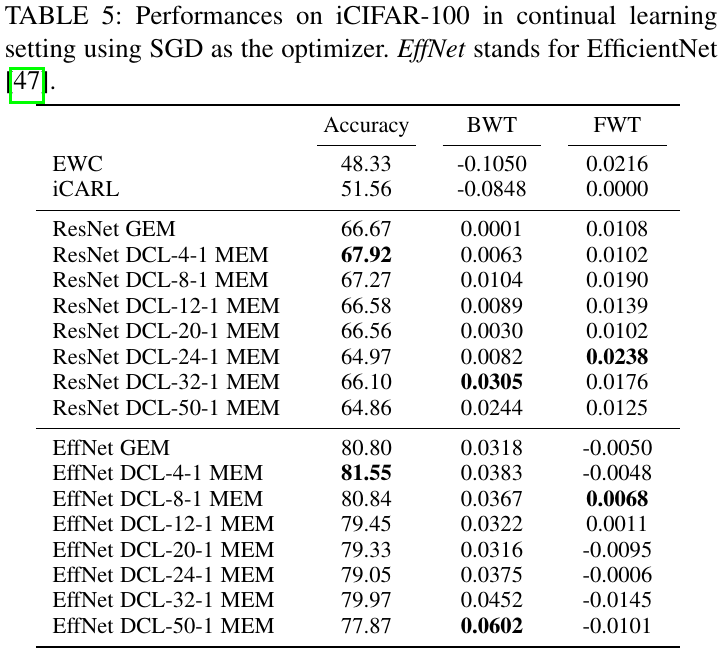

DCL (arXiv) is a work that studies the agreement between the learned knowledge and the new information in a learning process. The code is built upon PyTorch and GEM. It is tested under Ubuntu 1604 LTS with Python 3.6. State-of-the-art EfficientNets are included.

- PyTorch 0.4.1, e.g.,

conda install pytorch=0.4.1 cuda80 -c pytorch # for CUDA 8.0

conda install pytorch=0.4.1 cuda90 -c pytorch # for CUDA 9.0To use EfficientNet as the baseline model, it requires PyTorch 1.1.0+, e.g.,

conda install pytorch==1.1.0 torchvision==0.3.0 cudatoolkit=10.0 -c pytorch- torchvision 0.2.1+, e.g.,

pip install torchvision==0.2.1- quadprog, i.e.,

pip install msgpack

pip install Cython

pip install quadprog- EfficientNet (optinal), i.e.,

pip install efficientnet_pytorch- TensorboardX (optinal)

pip install tensorboardX==1.2To run the experiments on MNIST-R, MNIST-P, and iCIFAR-100, excute script run_experiments.sh

./run_experiments.shThe pre-trained models, i.e., EfficientNet-B1 w.r.t. various DCL effetive windows, and corresponding training log/performance are are available at the shared drive continual_pretrained.

If you find this work or the code useful in your research, please consider citing:

@article{Luo_DCL_2019,

author={Y. {Luo} and Y. {Wong} and M. {Kankanhalli} and Q. {Zhao}},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={Direction Concentration Learning: Enhancing Congruency in Machine Learning},

year={2019},

pages={1-1},

doi={10.1109/TPAMI.2019.2963387},

ISSN={1939-3539}

}

luoxx648 at umn.edu

Any discussions, suggestions, and questions are welcome!