Featured in Robotics Weekly and Mithi's Robotics Coursework!

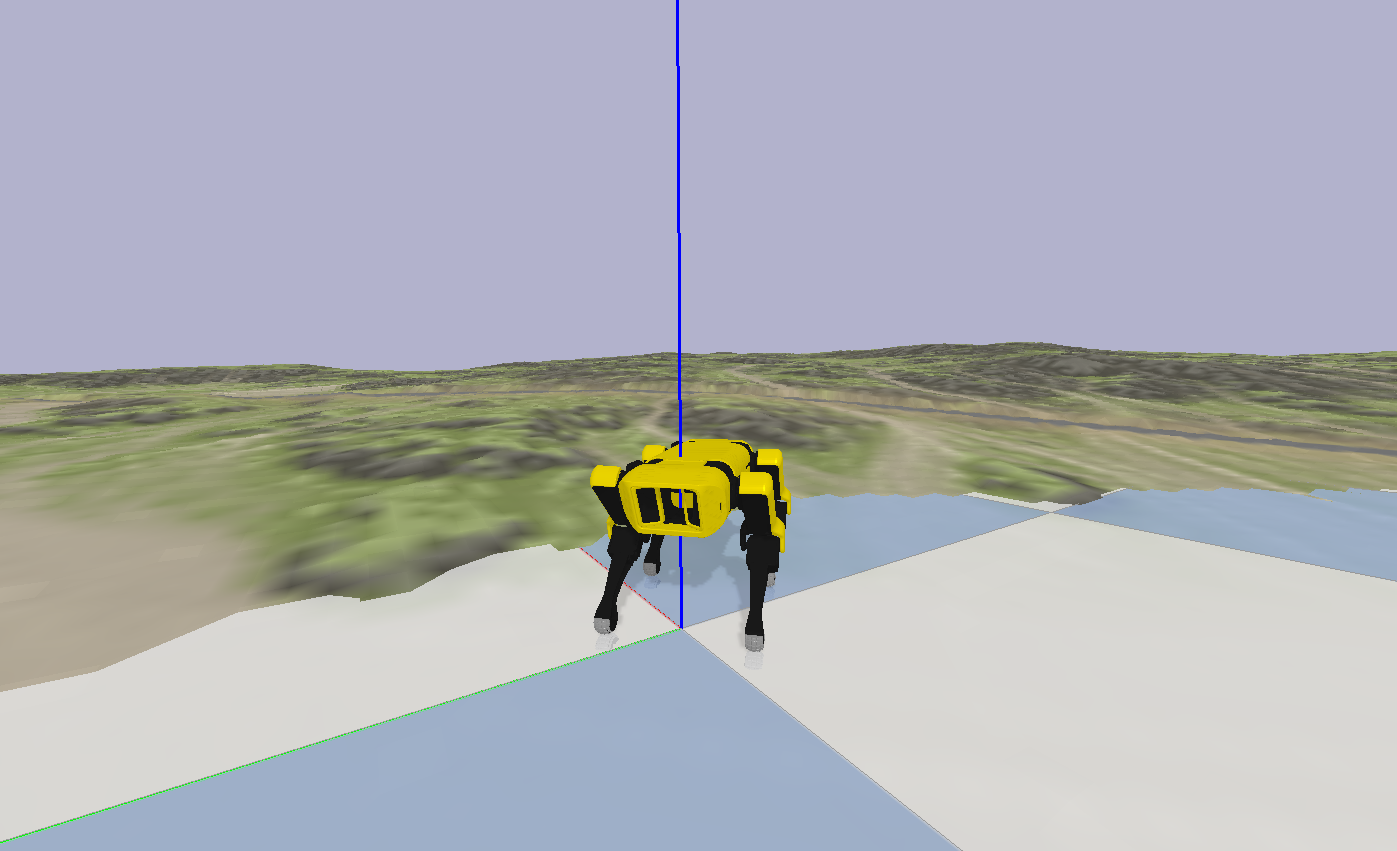

As part of the Spot Micro community, I saw the need for a reliable and versatile simulator for those who wanted to try things out without risking damage to their robots. To that end, I developed my own in Pybullet which can also be used as a Gym environment for Reinforcement Learning tasks.

You'll notice that there are gifs of the original SpotMicro as well a new version designed for added real world fidelity. The default branch simulates the new version, but you can work with SpotMicro in the spotmicroai branch of this repo. The new version also has a more reliable URDF, with more accurate inertial calculations.

If you don't need a Gym environment, that's okay too! env_tester.py works without RL or Gym, it is designed to accept any gait implementation, and provides a GUI for testing it out! In my case, I've implemented a 12-point Bezier gait.

Read the docs!

- Motivation

- Kinematics

- D^2 Gait Modulation with Bezier Curves

- Gait

- How To Run

- Hardware

- Citing Spot Mini Mini

- Credits

Body manipulation with leg IK and body IK descriptions.

I'm using this platform to validate a novel Reinforcement Learning method for locomotion by myself and my co-authors Matthew L. Elwin, Ian Abraham, and Todd D. Murphey. Instead of learning a gait from scratch, we propose using an existing scheme as a baseline over which we optimize via training. The method is called D^2 Gait Modulation with Bezier Curves. To learn more, visit our website

During training, simple Proportional controller was employed to deliver yaw correction as would be the case if the robot were teleoperated or able to localize itself. For increased policy robustness, the terrain, link masses and foot frictions are randomized on each environment reset.

Here, the action space is 14-dimensional, consisting of Clearance Height (1), Body Height (1), and Foot XYZ Residual modulations (12). Clearance Height is treated through an exponential filter (alpha = 0.7), but all other actions are processed directly. These results were trained with only 149 epochs.

Before training, the robot falls almost immediately:

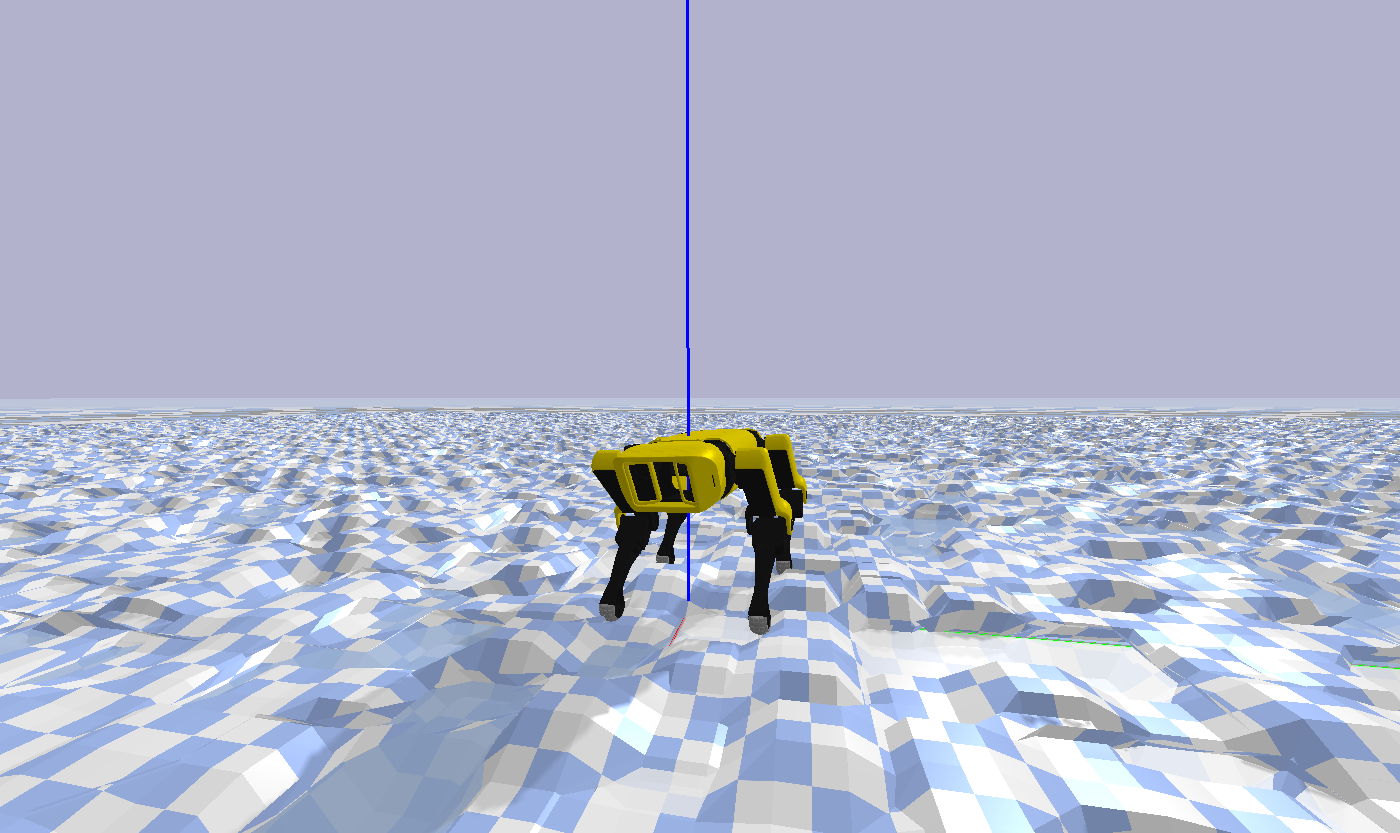

After training, the robot successfully navigates the terrain:

What's even better, is that the same agent #149 is able to adapt to unseen commands, making high-level system integration straightforward. Here it is being teleoperated using Forward, Lateral, and Yaw commands.

Here's an example of the new URDF being teleoperated with a trained agent on 2x higher terrain:

Here are some experimental results where the agent is on the right.

Open-Loop Gait using 12-Point Bezier Curves based on MIT Cheetah Paper with modifications for low step velocity discontinuity.

Forward and Lateral Motion:

Yaw logic based on 4-wheel steering car:

- ROS Melodic

- Gazebo

- Pytorch

- Pybullet

- Gym

- OpenCV

- Scipy

- Numpy

First, you're going to need a joystick (okay, not really, but it's more fun if you have one).

Setting Up The Joystick:

- Get Number (you will see something like jsX):

ls /dev/input/ - Make available to ROS:

sudo chmod a+rw /dev/input/jsX - Make sure

<param name="dev" type="string" value="/dev/input/jsX"/>matches your setup in the launchfile

Then simply: roslaunch mini_ros spot_move.launch

You can ignore this msg: [ERROR] [1591631380.406690714]: Couldn't open joystick force feedback! It just means your controller is missing some functionality, but this package doesn't use it.

Controls:

Assuming you have a Logitech Gamepad F310:

A: switch between stepping and RPY

X: E-STOP (engage and disengage)

Stepping Mode:

Right Stick Up/Down: Step LengthRight Stick Left/Right: Lateral FractionLeft Stick Up/Down: Robot HeightLeft Stick Left/Right: Yaw RateArrow Pad Up/Down(DISCRETE): Step HeightArrow Pad Left/Right(DISCRETE): Step DepthBottom Right/Left Bumpers: Step Velocity (modulate)Top Right/Left Bumpers: reset all to default

Viewing Mode:

Right Stick Up/Down: PitchRight Stick Left/Right: RollLeft Stick Up/Down: Robot HeightLeft Stick Left/Right: Yaw

Changing Step Velocity while moving forward:

Changing Step Length while moving forward:

Yaw In Place: Slightly push the Right Stick forward while pushing the Left Stick maximally in either direction:

If you don't have a joystick, go to spot_bullet/src and do ./env_tester.py. A Pybullet sim will open up for you with the same controls you would have on the joystick, except each is on its own scrollbar. You may also use the following optional arguments:

-h, --help show this help message and exit

-hf, --HeightField Use HeightField

-r, --DebugRack Put Spot on an Elevated Rack

-p, --DebugPath Draw Spot's Foot Path

-ay, --AutoYaw Automatically Adjust Spot's Yaw

-ar, --AutoReset Automatically Reset Environment When Spot Falls

Go to spot_bullet/src and do ./spot_ars.py. Models will be saved every 9th episode to spot_bullet/models/. I will add some more arguments in the future to give you finer control of the heightfield mesh from the command line.

Go to spot_bullet/src and do ./spot_ars_eval.py. You may also use the following optional arguments. Note that if you don't use the -a argument, no agent will be loaded, so you will be using the open-loop policy. For example, if you enter 149 after -a, you will see the first successful policy, but if you enter 2229, you will see a much more aggressive policy.

-h, --help show this help message and exit

-hf, --HeightField Use HeightField

-r, --DebugRack Put Spot on an Elevated Rack

-p, --DebugPath Draw Spot's Foot Path

-gui, --GUI Control The Robot Yourself With a GUI

-a, --AgentNum Agent Number To Load (followed by number)

Navigate to spotmicro/heightfield.py and take a look at useProgrammatic and useTerrainFromPNG (you can play around with the mesh scales for each) to experiment with different terrains. Make sure that the spotBezierEnv instance has height_field=True in env_tester.py and spot_pybullet_interface depending on whether you're using the joystick/ROS version. The same goes for the RL environments. Note: these were adapted from the pybullet source code.

useTerrainFromPNG

useProgrammatic

With this terrain type, I programmed in a randomizer that triggers upon reset. This, along with the body randomizer from Pybullet's Minitaur increases your RL Policy's robustness.

@software{spotminimini2020github,

author = {Maurice Rahme and Ian Abraham and Matthew Elwin and Todd Murphey},

title = {SpotMiniMini: Pybullet Gym Environment for Gait Modulation with Bezier Curves},

url = {https://github.com/moribots/spot_mini_mini},

version = {2.1.0},

year = {2020},

}

-

Original Spot Design and CAD files: Spot Micro AI Community

-

Collaborator on

OpenQuadrupeddesign, including mechanical parts, custom PCB, and Teensy interface: Adham Elarabawy -

OpenAI Gym and Heightfield Interface: Minitaur Environment

-

Deprecated URDF for earlier development: Rex Gym