Inspired by net2net, network distillation.

Contributor: @luzai, @miibotree

[TOC]

- keras 2.0

- backend: tensorflow 1.1

- image_data_format: 'channel_last'

@luzai

- single model may be trained multiple time, have a nice event logger(csv or tfevents)

- logger for model mutations and training event

- Dataset switcher (mnist, cifar100 or others)

- write doc

- Name

- Vis

@miibotree

-

The ratio of widening propto depth

-

finish add group function, and rand select group number in [2,3,4,5]

-

test different way to initialize group layer's weights.(for example, use identity)

-

skip layer use add operation, skip layer use 1 * 1 conv to keep the same channel number.

-

The propobility of adding Maxpooling layer inversely propto depth (constrain the number of MaxPooling layers)

-

add a conv with maxpooling layer will drop acc by a large margin, how to fix this problem? (maxpooling should be added early)

-

Propobility of 5 mutation operations

-

to improve val acc and avoid overfitting

- try regularizers(kernel regularizer, output regularizer), (Yes, effective)

- dropout, (Yes, effective)

- BN

-

group layer's wider operation

-

Distribute/ parallel Training

-

Mayavi

-

Summary at running time

-

Use kd loss

- Train(65770): hard label + transfer label; Test(10000): cifar-10 hard label

- use

functional APIrather thansequence - hard label + soft-target (tune hyper-parameter T)

-

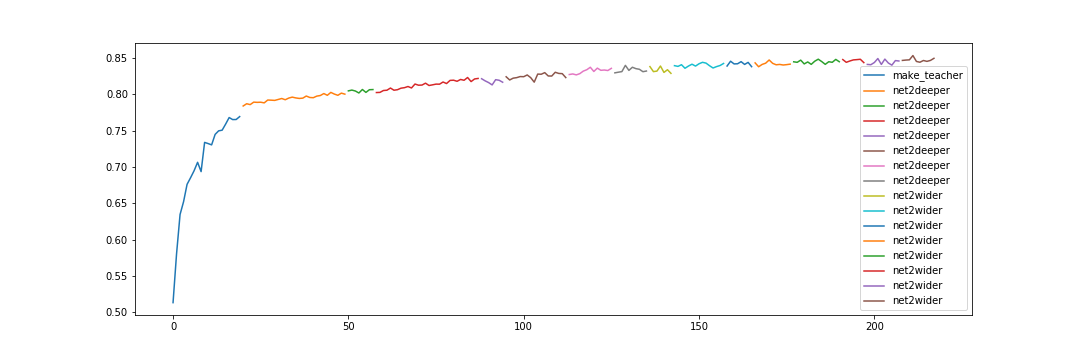

experiments on comparing two type models: Final Accuracies are similar.

- Deeper(Different orders) -> Wider: Accuracy grows stable; Train fast

- Wider -> Deeper

-

write

net2branchfunction, imitating inception module -

net2deeper for pooling and dropout layer

-

net2wider for conv layer on kernel size dimension, i.e., 3X3 to 5X5

- Grow Architecture to VGG-like

- Exp: what accuracy can vgg-19 achieve

- Fixed: slight downgrade of net2wider conv8

- compare on accuracy and training time

| Vgg16 | Vgg8 | Vgg8+Dropout | Vgg8-net2net(no dropout) |

|---|---|---|---|

| 10.00% | 83.56% | 90.05% | 87.45% |

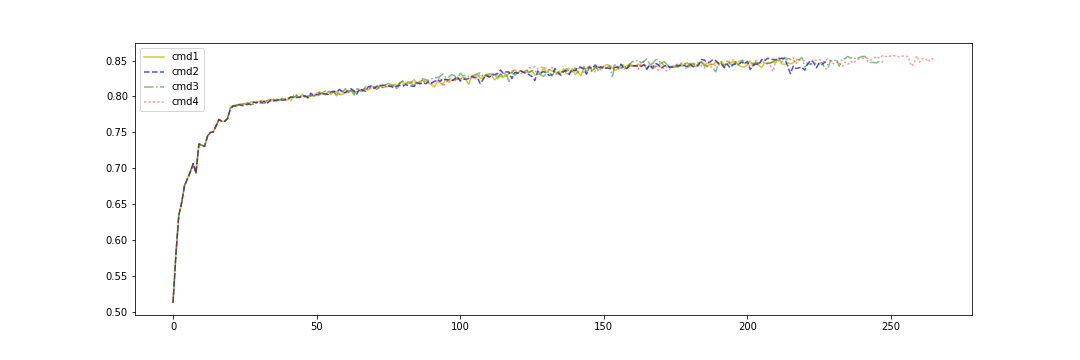

Figure 1 Vgg8-net2net(no dropout, epoch 0-250)

Figure 1 Vgg8-net2net(no dropout, epoch 0-250)

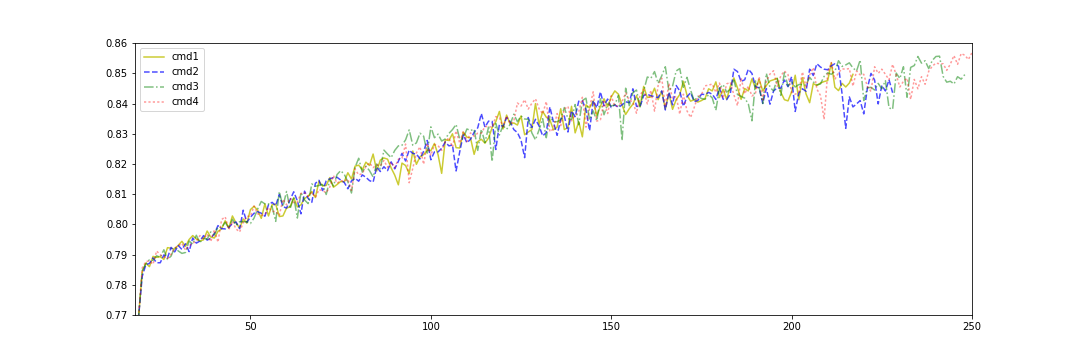

Figure 2 Vgg8-net2net(no dropout, epoch 20-250)

Figure 2 Vgg8-net2net(no dropout, epoch 20-250)

Figure 3 Vgg-net2net(cmd1, in different stage)

Figure 3 Vgg-net2net(cmd1, in different stage)

- kd loss -[x] soft-target

- transfer data

- experiments on random generate model

- generate random feasible command

- check the completeness, run code in parallel

- find some rules: Gradient explosion happens when fc is too deep

- Data-augmentation is better than Dropout