Yuan Wu1*, Zhiqiang Yan1*† , Zhengxue Wang1, Xiang Li2, Le Hui3, Jian Yang1†

*equal contribution

†corresponding author

1Nanjing University of Science and Technology

2Nankai University

3Northwestern Polytechnical University

[Paper] [Project Page]

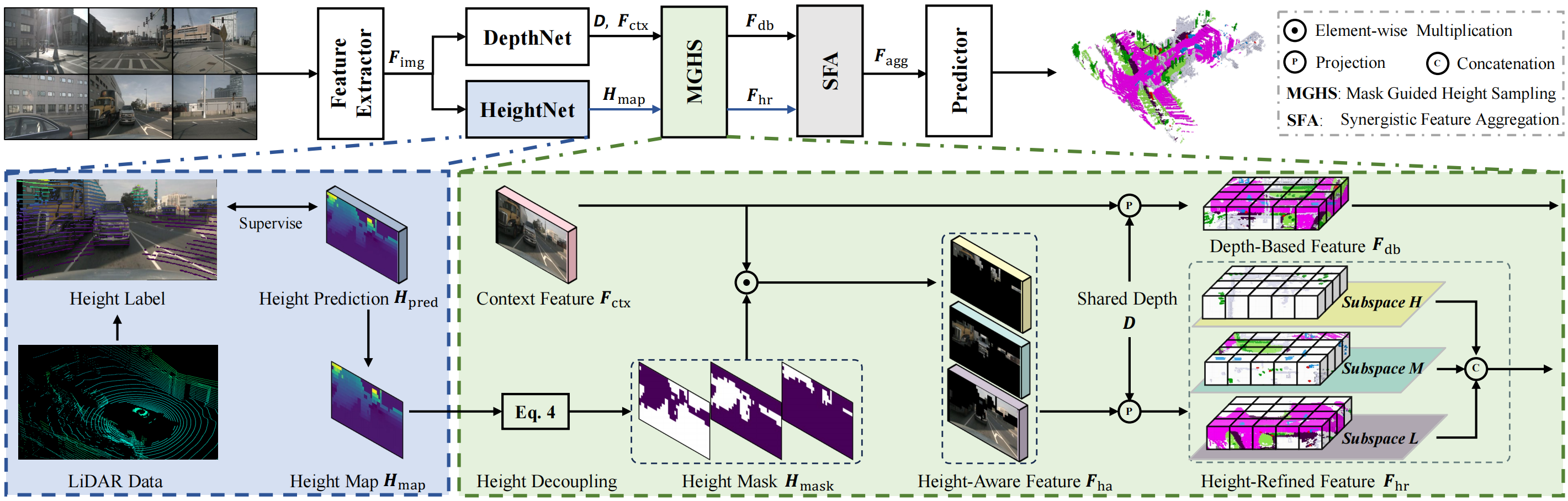

DHD comprises a feature extractor, HeightNet, DepthNet, MGHS, SFA, and predictor. The feature extractor first acquires 2D image feature. Then, DepthNet extracts context feature and depth prediction. HeightNet generates the height map to determine the height value at each pixel. Next, MGHS integrates the output of HeightNet and DepthNet, acquiring height-refined feature and depth-based feature. Finally, the dual features are fed into the SFA to obtain the aggregated feature, which serves as input for the predictor.

Step1、Prepare environment as that in Install.

Step2、Prepare nuScene and generate pkl file by runing:

python tools/create_data_bevdet.pyThe finnal directory structure for 'data' folder is like

└── data

└── nuscenes

├── v1.0-trainval

├── sweeps

├── samples

├── gts

├── bevdetv2-nuscenes_infos_train.pkl

└── bevdetv2-nuscenes_infos_val.pkl# train:

tools/dist_train.sh ${config} ${num_gpu}

# train DHD-S:

tools/dist_train.sh projects/configs/DHD/DHD-S.py 4

# test:

tools/dist_test.sh ${config} ${ckpt} ${num_gpu} --eval mAP

# test DHD-S:

tools/dist_test.sh projects/configs/DHD/DHD-S.py model_weight/DHD-S.pth 4 --eval mAPThe pretrained weights in 'ckpts' folder can be found here.

All DHD model weights can be found here.