This repository contains the source code for our ICASSP2022 paper Pseudo strong labels for large scale weakly supervised audio tagging.

Highlights:

- State-of-the-art on the balanced Audioset subset.

- Simple MobileNetV2 model, don't need expensive GPU to run.

- Quick training, since only 60h of balanced Audioset is required.

- Achieves an mAP of 35.48 (more or less), useable for most real-world applications.

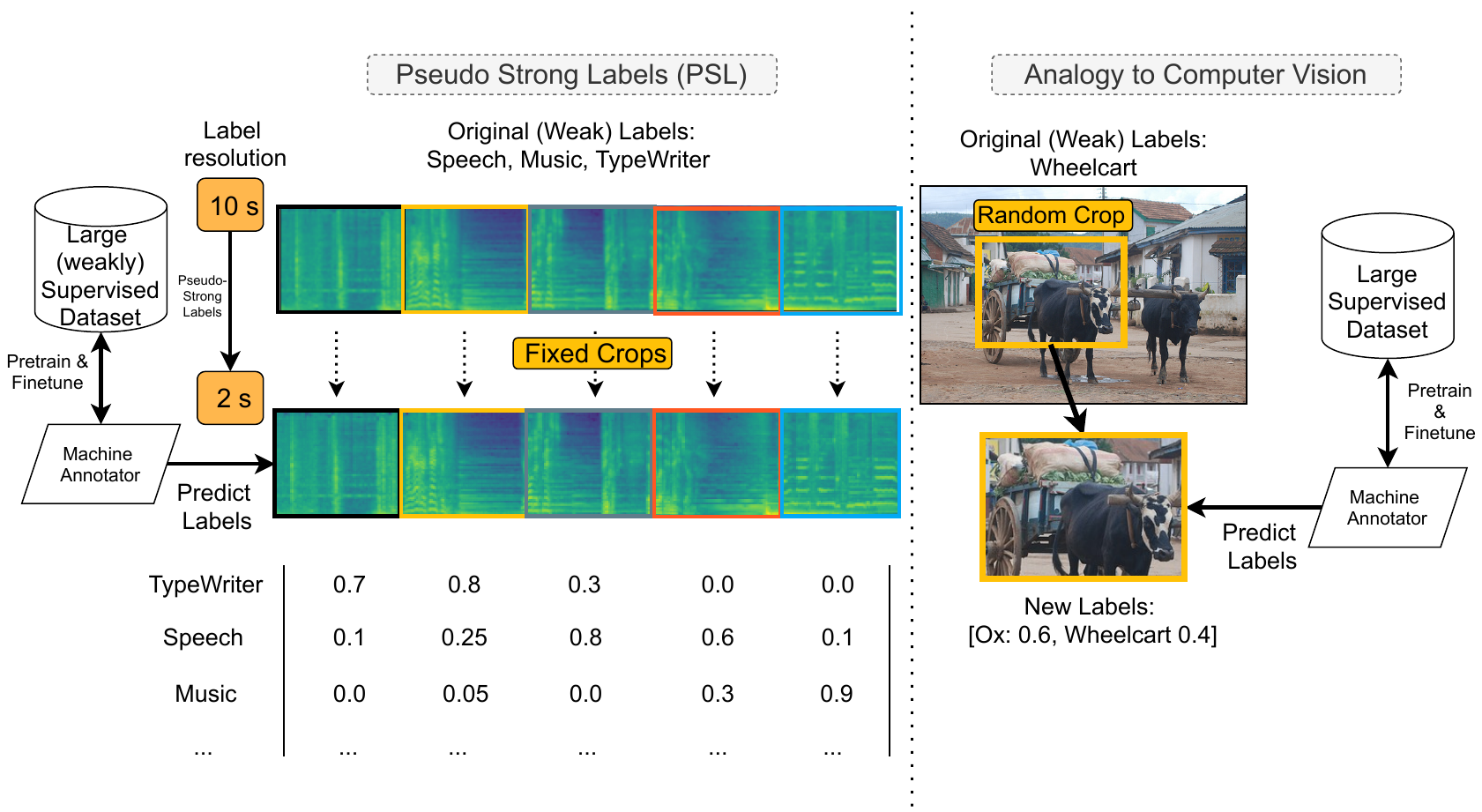

The aim of this work is to show that by adding automatic supervision on a fixed scale from a machine annotator (or teacher) to a student model, performance gains can be observed on Audioset.

Specifically, our method outperforms other approaches in literature on the balanced subset of Audioset, while using a rather simple MobileNetV2 architecture.

| Method | Label | mAP | |

|---|---|---|---|

| Baseline (Weak) | Weak | 17.69 | 1.994 |

| PSL-10s (Proposed) | PSL-10s | 31.13 | 2.454 |

| PSL-5s (Proposed) | PSL-5s | 34.11 | 2.549 |

| PSL-2s (Proposed) | PSL-2s | 35.48 | 2.588 |

| ----------------------------------- | ---------------------------------------- | ------------- | ------------- |

| CNN14 [@Kong2020d] | Weak | 27.80 | 1.850 |

| EfficientNet-B0 [@gong2021psla] | Weak | 33.50 | - |

| EfficientNet-B2 [@gong2021psla] | Weak | 34.06 | - |

| ResNet-50 [@gong2021psla] | Weak | 31.80 | - |

| AST [@gong21b_interspeech] | Weak | 34.70 | - |

gnu-parallel for the preprocessing, which can be installed using conda:

conda install parallelIf you have root rights you can:

# On Arch distros

sudo pacman -S parallel

# On Debian

sudo apt install parallelFurther, the download script in scripts/1_download_audioset.sh uses Proxychains to download the data. You might want to disable proxychains by simply removing the line or configure your own proxychains proxy.

This script has been tested using python=3.8 on a Centos 5 and Manjaro.

To install the python dependencies just run:

python3 -m pip install -r requirements.txtThe structure of this repo is as follows:

.

├── configs

├── data

│ ├── audio

│ │ ├── balanced

│ │ └── eval

│ ├── csvs

│ └── logs

├── figures

├── scripts

│ └── utils

If already have downloaded audioset, please put the raw data of the balanced and eval subsets in data/audio/balanced and data/audio/eval respectively.

Then put balanced_train_segments.csv, eval_segments.csv and class_labels_indices.csv into data/csvs.

Firstly, you need the balanced and evaluation subsets of audioset. These can be downloaded using the following script:

./scripts/1_download_audioset.shIn order to speed up IO, we pack the data into hdf5 files. This can be done by:

./scripts/2_prepare_data.shFor the experiments in Table 2, run:

## For the 10s PSL training

./train_psl.sh configs/psl_balanced_chunk_10sec.yaml

## For the 5s PSL training

./train_psl.sh configs/psl_balanced_chunk_5sec.yaml

## For the 2s PSL training

./train_psl.sh configs/psl_balanced_chunk_2sec.yamlFor the experiments in Table 3, run:

## For the 10s PSL training

./train_psl.sh configs/teacher_student_chunk_10sec.yaml

## For the 5s PSL training

./train_psl.sh configs/teacher_student_chunk_5sec.yaml

## For the 2s PSL training

./train_psl.sh configs/teacher_student_chunk_2sec.yamlNote that this repo can be easily extended to run the experiments in Table 4, i.e., using the full Audioset dataset.