Panoptic-PartFormer ECCV-2022 [Introduction Video], [Poster]

Xiangtai Li, Shilin Xu, Yibo Yang, Guangliang Cheng, Yunhai Tong, Dacheng Tao.

Source Code and model of our ECCV-2022 paper. Our re-implementation achieved slightly better results than the original submitted paper.

We find the ground truth bugs of the PPP dataset in our local machine. The results are higher than the previous version. Thanks for the reminder by the original PPS dataset author Daan de Geus

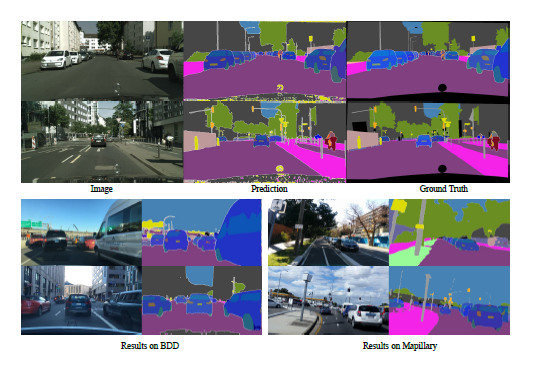

Panoptic Part Segmentation (PPS) aims to unify panoptic segmentation and part segmentation into one task. Previous work mainly utilizes separated approaches to handle thing, stuff, and part predictions individually without performing any shared computation and task association. In this work, we aim to unify these tasks at the architectural level, designing the first end-to-end unified method named Panoptic- PartFormer.

We model things, stuff, and part as object queries and directly learn to optimize all three predictions as unified mask prediction and classification problems. We design a decoupled decoder to generate the part feature and thing/stuff feature respectively.

It requires the following OpenMMLab packages:

- MMCV-full == v1.3.18

- MMDetection == v2.18.0

- panopticapi

The packages that in the requirement.txt file.

The basic data formats are as follows:

Prepare data following MMDetection. The data structure looks like below:

For Cityscapes Panoptic Part (CPP) and PascalContext Panoptic Part (PPP) dataset:

The Extra Anotations for PPP dataset can be downloaded here.

data/

├── VOCdevkit # pascal context part dataset

│ ├── gt_panoptic

│ ├── labels_57part

│ ├── VOC2010

│ │ ├── JPEGImages

├── cityscapes # cityscape pascal part dataset

│ ├── annotations

│ │ ├── cityscapes_panoptic_{train,val}.json

│ │ ├── instancesonly_filtered_gtFine_{train,val}.json

│ │ ├── val_images.json

│ ├── gtFine

│ ├── gtFinePanopticParts

│ ├── leftImg8bit

Note that The cityscapes model results can be improved via large crop finetuning see the config city_r50_fam_ft.py.

R-50: link

Swin-base: link

R-50: link

Swin-base: link

R-50 link, PartPQ: 57.5

Swin-base link PartPQ: 61.9

R-50 link PartPQ: 45.1

R-101 link PartPQ: 45.6

Swin-base link PartPQ: 52.3

For a single machine with multi GPUs. To reproduce the performance. Make sure you have loaded the ckpt correctly.

# train

sh ./tools/dist_train.sh $CONFIG $NUM_GPU

# test

sh ./tools/dist_test.sh $CONFIG $CHECKPOINT --eval panoptic partNote for ppp dataset training, better to add --no-validate flag since the long evaluation period and more cpu/memory cost. And then eval the model in an offline manner.

for multi machines, we use slurm

sh ./tools/slurm_train.sh $PARTITION $JOB_NAME $CONFIG $WORK_DIR

sh ./tools/slurm_test.sh $PARTITION $JOB_NAME $CONFIG $CHECKPOINT --eval panoptic, part- PARTITION: the slurm partition you are using

- CHECKPOINT: the path of the checkpoint downloaded from our model zoo or trained by yourself

- WORK_DIR: the working directory to save configs, logs, and checkpoints

- CONFIG: the config files under the directory

configs/ - JOB_NAME: the name of the job that are necessary for slurm

You can also run training and testing without slurm by directly mim for instance/semantic segmentation or tools/train.py for panoptic segmentation like below:

For cityscapes panoptic part

python demo/image_demo.py $IMG_FILE $CONFIG $CHECKPOING $OUT_FILEFor Pascal Panoptic part

python demo/image_demo.py $IMG_FILE $CONFIG $CHECKPOING $OUT_FILE --datasetspec_path=$1 --evalspec_path=$2We build our codebase based on K-Net and mmdetection. Much thanks for their open-sourced code. Mainly refer to the implementation of thing/stuff kernels (query) interaction part.

If you find this repo useful for your research, please consider citing our paper:

@article{li2022panoptic,

title={Panoptic-partformer: Learning a unified model for panoptic part segmentation},

author={Li, Xiangtai and Xu, Shilin and Cheng, Yibo Yang and Tong, Yunhai and Tao, Dacheng and others},

journal={ECCV},

year={2022}

}

MIT license