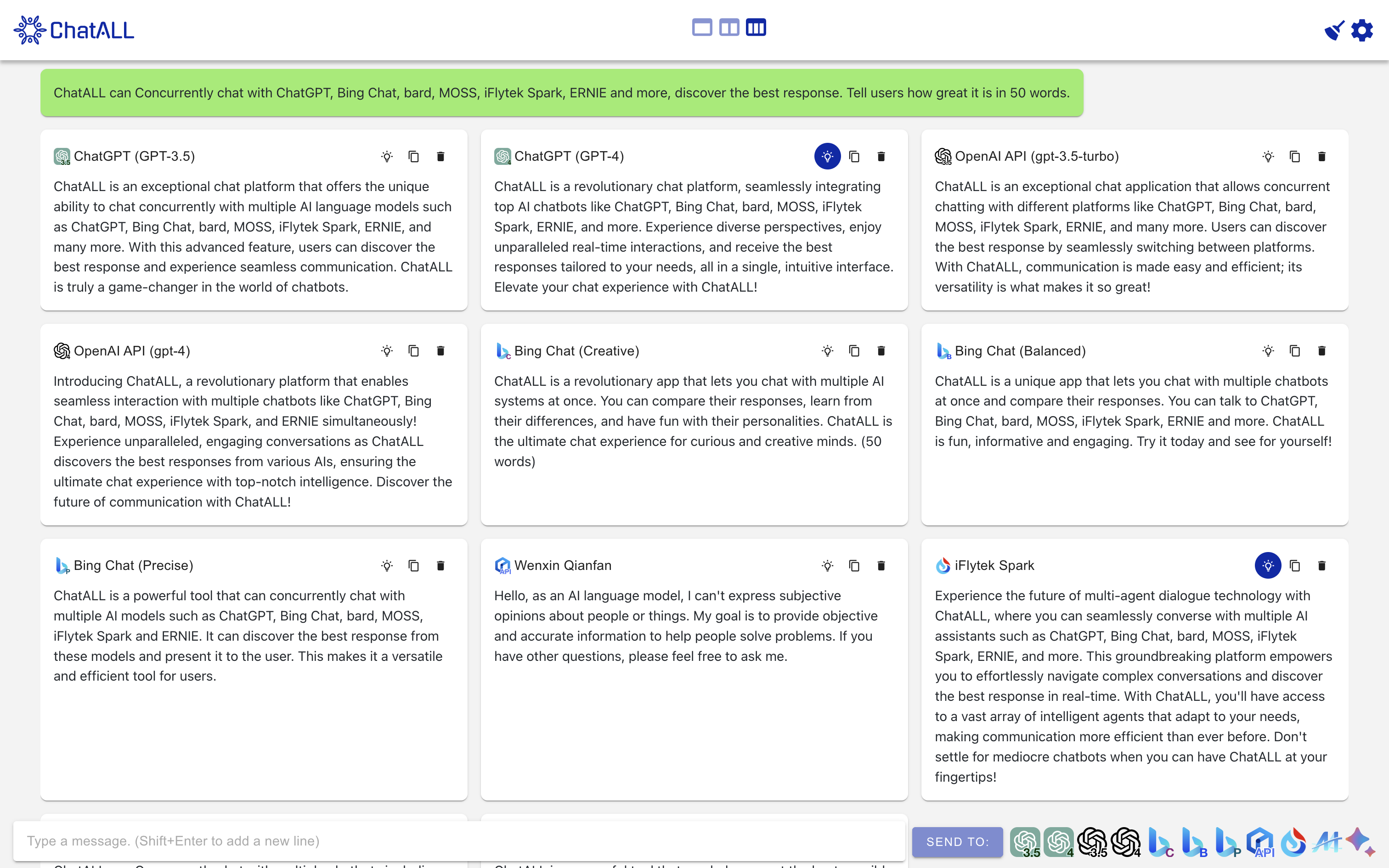

Large Language Models (LLMs) based AI bots are amazing. However, their behavior can be random and different bots excel at different tasks. If you want the best experience, don't try them one by one. ChatALL (Chinese name: 齐叨) can send prompt to severl AI bots concurrently, help you to discover the best results.

| AI Bots | Web Access | API |

|---|---|---|

| ChatGPT | Yes | Yes |

| Bing Chat | Yes | No API |

| Baidu ERNIE | No | Yes |

| Bard | Yes | No API |

| Poe | Coming soon | Coming soon |

| MOSS | Yes | No API |

| Tongyi Qianwen | Coming soon | Coming soon |

| Dedao Learning Assistant | Coming soon | No API |

| iFLYTEK SPARK | Yes | Coming soon |

| Alpaca | Yes | No API |

| Vicuna | Yes | No API |

| ChatGLM | Yes | No API |

| Claude | Yes | No API |

| Gradio for self-deployed models | Yes | No API |

And more...

- Quick-prompt mode: send the next prompt without waiting for the previous request to complete

- Store chat history locally, protect your privacy

- Highlight the response you like, delete the bad

- Automatically keep ChatGPT session alive

- Enable/disable any bots at any time

- Switch between one, two, or three-column view

- Supports multiple languages (en, zh)

- [TODO] Best recommendations

ChatALL is a client, not a proxy. Therefore, you must:

- Have working accounts and/or API tokens for the bots.

- Have reliable network connections to the bots.

- If you are using a VPN, it must be set as system/global proxy.

Download from https://github.com/sunner/ChatALL/releases

Just download the *-win.exe file and proceed with the setup.

For Apple Silicon Mac (M1, M2 CPU), download the *-mac-arm64.dmg file.

For other Macs, download *-mac-x64.dmg file.

Download the .AppImage file, make it executable, and enjoy the click-to-run experience.

The guide may help you.

npm install

npm run electron:serveBuild for your current platform:

npm run electron:buildBuild for all platforms:

npm run electron:build -- -wml --x64 --arm64- GPT-4 contributed much of the code

- ChatGPT, Bing Chat and Google provide many solutons (ranked in order)

- Inspired by ChatHub. Respect!