This is PyTorch re-implementation of GALD-net and Dual-Seg. Both papers were accepted by BMVC-2019 and achieve state-of-the-art results on the Cityscapes and Pascal Context datasets.

There is also a co-current repo for Fast Road Scene Semantic Segmentation:Fast_Seg ⚡ and thanks for your attention 😃

pytorch >= 1.1.0 apex opencv-python

Note that we use apex to speed up training process.

At least 8 gpus with 12GB are needed since we need batch size at least 8 and crop size at least 800 on Cityscapes dataset.

Please see train_distribute.py for the details.

sh ./exp/train_dual_seg_r50_city_finetrain.shYou will get the model with 79.6~79.8 mIoU.

sh ./exp/train_dual_seg_r101_city_finetrain.shYou will get the model with 80.3~80.4 mIoU.

sh ./exp/tes_dualseg_r50_city_finetrain.shModel trained with the Cityscapes fine dataset:

Dual-Seg-net: ResNet 50, ResNet 101

Please see the Common.md for the details for using the coarse data training. Or you can refer to our GLAD paper(last part) for reference.

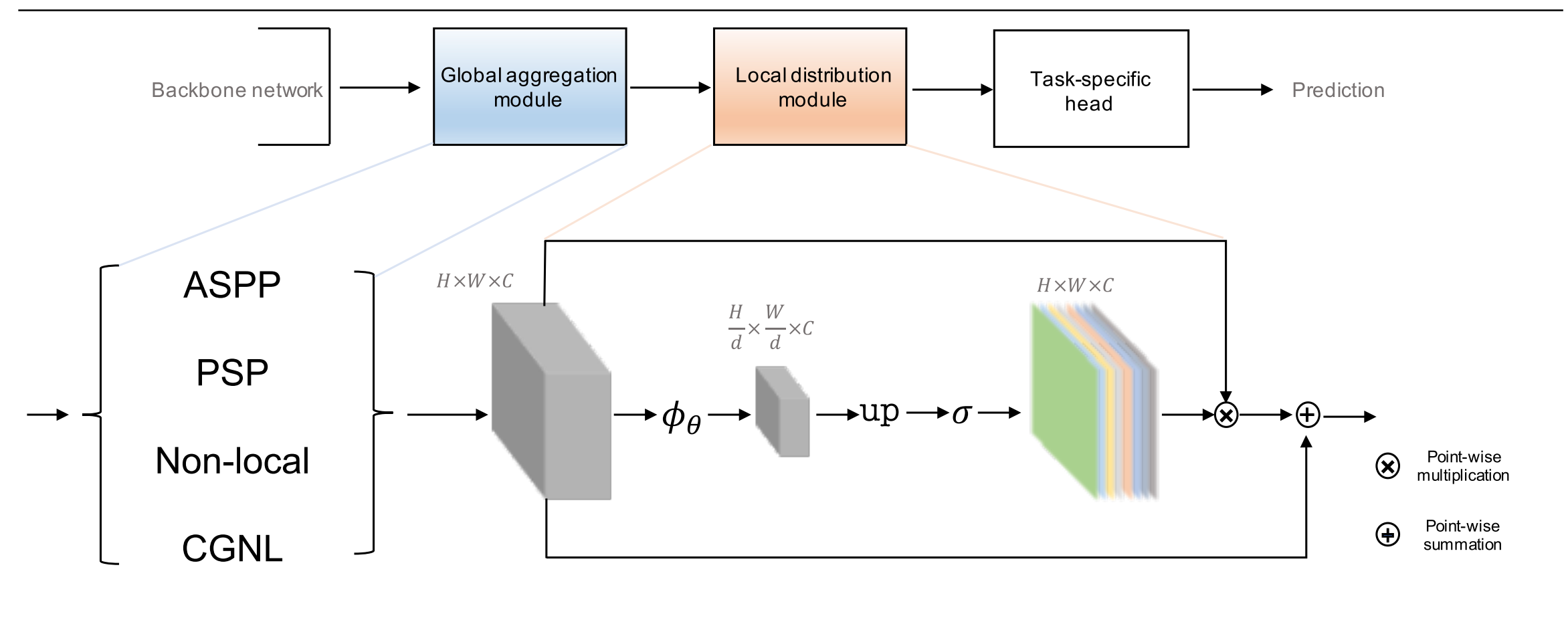

GALD-Net (BMVC 2019,arxiv)

We propose Global Aggregation then Local Distribution (GALD) scheme to distribute global information to each position adaptively according to the local information around the position. GALD net achieves top performance on Cityscapes dataset. Both source code and models will be available soon. The work was done at DeepMotion AI Research

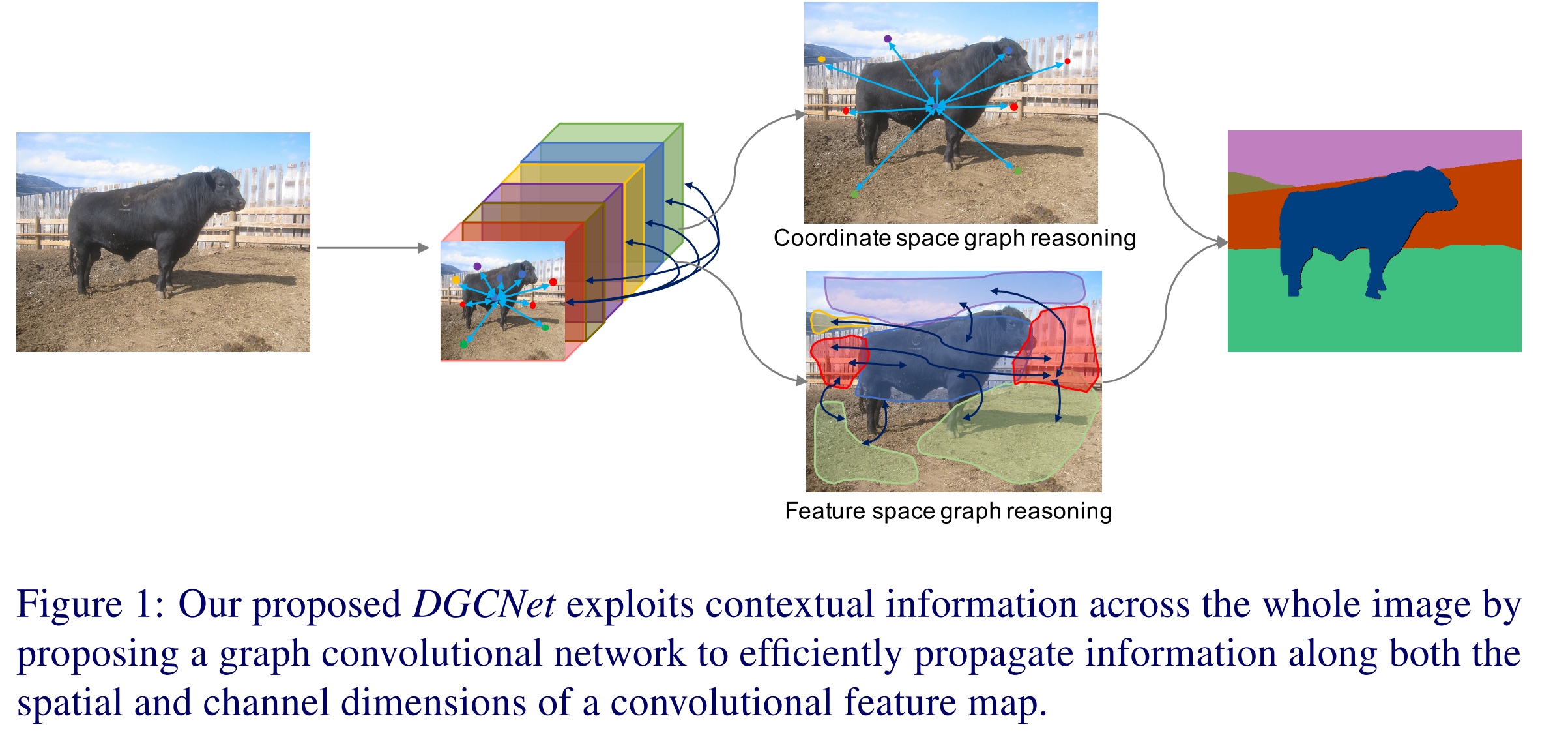

DGCNet (BMVC 2019,arxiv)

We propose Dual Graph Convolutional Network (DGCNet) to model the global context of the input feature by modelling two orthogonal graphs in a single framework. (Joint work: University of Oxford, Peking University and DeepMotion AI Research)

| Method | Conference | Backbone | mIoU(%) |

|---|---|---|---|

| RefineNet | CVPR2017 | ResNet-101 | 73.6 |

| SAC | ICCV2017 | ResNet-101 | 78.1 |

| PSPNet | CVPR2017 | ResNet-101 | 78.4 |

| DUC-HDC | WACV2018 | ResNet-101 | 77.6 |

| AAF | ECCV2018 | ResNet-101 | 77.1 |

| BiSeNet | ECCV2018 | ResNet-101 | 78.9 |

| PSANet | ECCV2018 | ResNet-101 | 80.1 |

| DFN | CVPR2018 | ResNet-101 | 79.3 |

| DSSPN | CVPR2018 | ResNet-101 | 77.8 |

| DenseASPP | CVPR2018 | DenseNet-161 | 80.6 |

| OCNet | - | ResNet-101 | 81.7 |

| CCNet | ICCV2019 | ResNet-101 | 81.4 |

| GALD-Net | BMVC2019 | ResNet50 | 80.8 |

| GALD-Net | BMVC2019 | ResNet101 | 81.8 |

| DGCN-Net | BMVC2019 | ResNet101 | 82.0 |

| GALD-Net(use coarse data) | BMVC2019 | ResNet101 | 82.9 |

| GALD-Net(use Mapillary) | BMVC2019 | ResNet101 | 83.3 |

GALD-Net:

here

GFF-Net:here

Both are (Single Model Result)

Please refer our paper for more detail. If you find the codebase useful, please consider citing our paper.

@inproceedings{xiangtl_gald

title={Global Aggregation then Local Distribution in Fully Convolutional Networks},

author={Li, Xiangtai and Zhang, Li and You, Ansheng and Yang, Maoke and Yang, Kuiyuan and Tong, Yunhai},

booktitle={BMVC2019},

}

@inproceedings{zhangli_dgcn

title={Dual Graph Convolutional Network for Semantic Segmentation},

author={Zhang, Li(*) and Li, Xiangtai(*) and Arnab, Anurag and Yang, Kuiyuan and Tong, Yunhai and Torr, Philip HS},

booktitle={BMVC2019},

}

MIT License

Thanks to previous open-sourced repo:

Encoding

CCNet

TorchSeg

pytorchseg