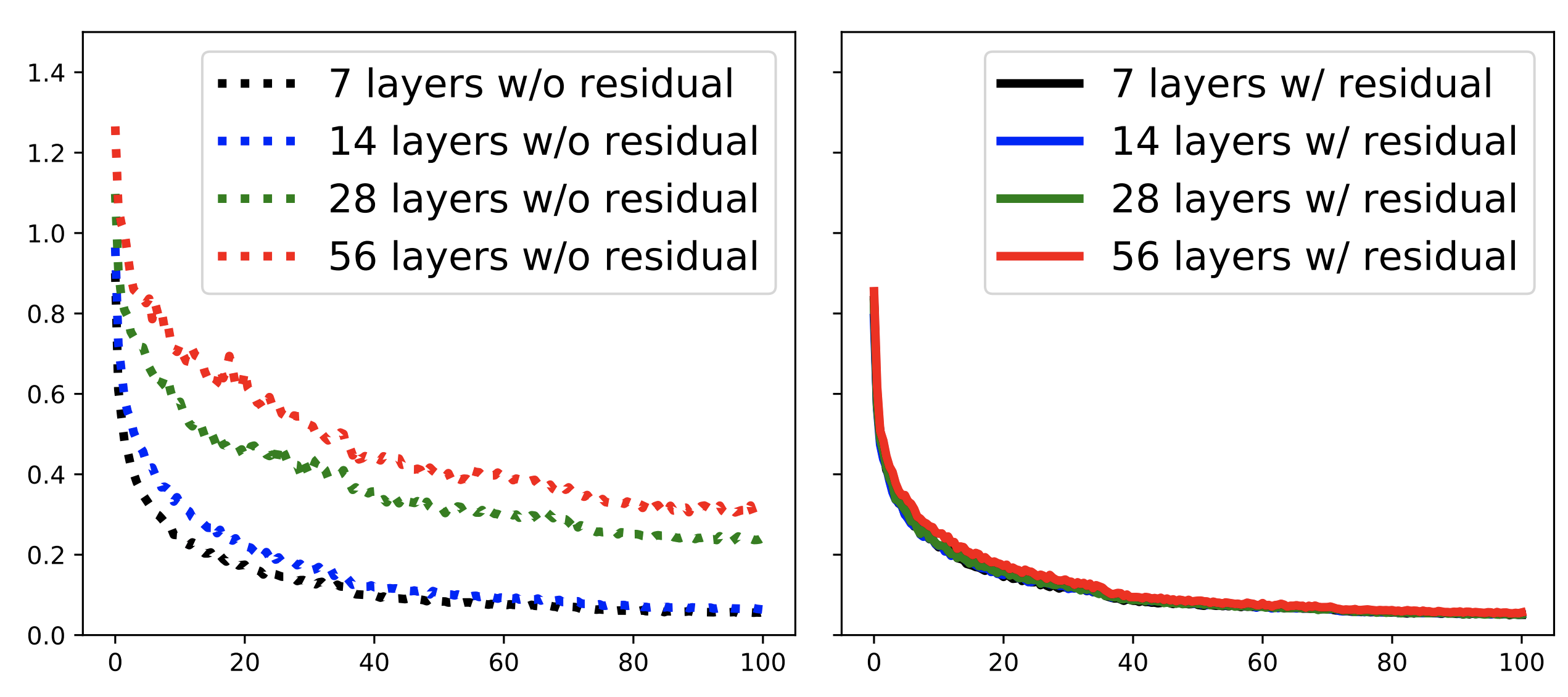

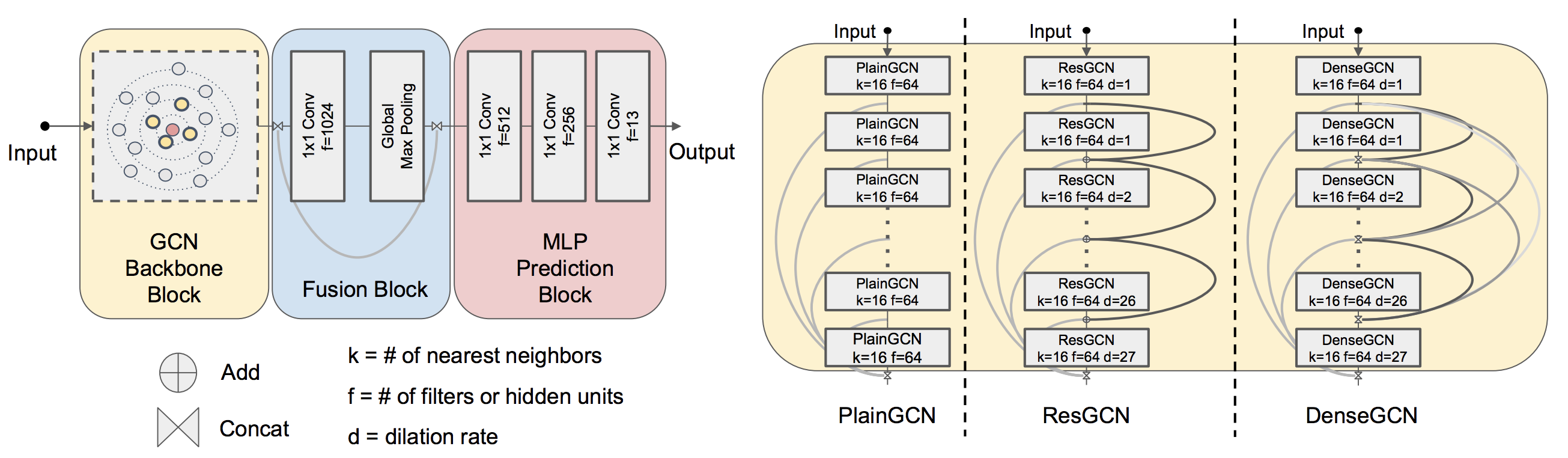

In this work, we present new ways to successfully train very deep GCNs. We borrow concepts from CNNs, mainly residual/dense connections and dilated convolutions, and adapt them to GCN architectures. Through extensive experiments, we show the positive effect of these deep GCN frameworks.

[Project] [Paper] [Slides] [Tensorflow Code] [Pytorch Code]

We do extensive experiments to show how different components (#Layers, #Filters, #Nearest Neighbors, Dilation, etc.) effect DeepGCNs. We also provide ablation studies on different type of Deep GCNs (MRGCN, EdgeConv, GraphSage and GIN).

Further information and details please contact Guohao Li and Matthias Muller.

source deepgcn_env_install.sh

.

├── misc # Misc images

├── utils # Common useful modules

├── gcn_lib # gcn library

│ ├── dense # gcn library for dense data (B x C x N x 1)

│ └── sparse # gcn library for sparse data (N x C)

├── examples

│ ├── sem_seg # code for point clouds semantic segmentation on S3DIS

│ ├── part_sem_seg # code for part segmentation on PartNet

│ └── ppi # code for node classification on PPI dataset

└── ...

Please look the details in Readme.md of each task inside examples folder.

All the information of code, data, and pretrained models can be found there.

Please cite our paper if you find anything helpful,

@InProceedings{li2019deepgcns,

title={DeepGCNs: Can GCNs Go as Deep as CNNs?},

author={Guohao Li and Matthias Müller and Ali Thabet and Bernard Ghanem},

booktitle={The IEEE International Conference on Computer Vision (ICCV)},

year={2019}

}

MIT License

Thanks for Guocheng Qian for the implementation of the Pytorch version.