ITACLIP: Boosting Training-Free Semantic Segmentation with Image, Text, and Architectural Enhancements

[paper]

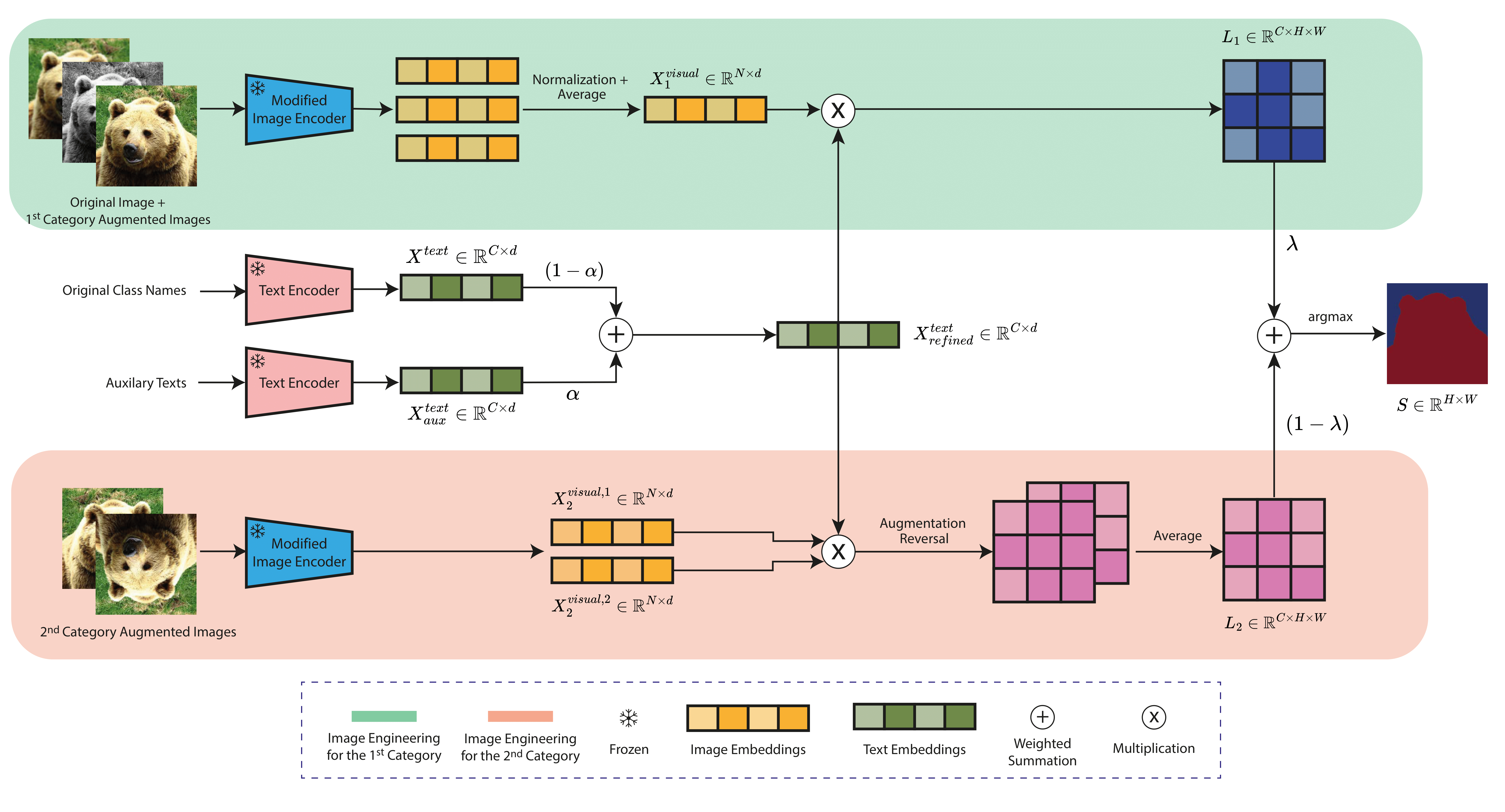

Abstract: Recent advances in foundational Vision Language Models (VLMs) have reshaped the evaluation paradigm in computer vision tasks. These foundational models, especially CLIP, have accelerated research in open-vocabulary computer vision tasks, including Open-Vocabulary Semantic Segmentation (OVSS). Although the initial results are promising, the dense prediction capabilities of VLMs still require further improvement. In this study, we enhance the semantic segmentation performance of CLIP by introducing new modules and modifications: 1) architectural changes in the last layer of ViT and the incorporation of attention maps from the middle layers with the last layer, 2) Image Engineering: applying data augmentations to enrich input image representations, and 3) using Large Language Models (LLMs) to generate definitions and synonyms for each class name to leverage CLIP's open-vocabulary capabilities. Our training-free method, ITACLIP, outperforms current state-of-the-art approaches on segmentation benchmarks such as COCO-Stuff, COCO-Object, Pascal Context, and Pascal VOC.

2024/11/18 Our paper and code are publicly available.

Our code is built on top of MMSegmentation. Please follow the instructions to install MMSegmentation. We used Python=3.9.17, torch=2.0.1, mmcv=2.1.0, and mmseg=1.2.2 in our experiments.

We support four segmentation benchmarks: COCO-Stuff, COCO-Object, Pascal Context, and Pascal VOC. For the dataset preparation, please follow the MMSeg Dataset Preparation document. The COCO-Object dataset can be derived from COCO-Stuff by running the following command

python datasets/cvt_coco_object.py PATH_TO_COCO_STUFF164K -o PATH_TO_COCO164K

Additional datasets can be seamlessly integrated following the same dataset preparation document. Please modify the dataset (data_root) and class name (name_path) paths in the config files.

For reproducibility, we provide the LLM-generated auxiliary texts. Please update the auxiliary path (auxiliary_text_path) in the config files. We also provide the definition and synonym generation codes (llama3_definition_generation.pyand llama3_synonym_generation.py). For the supported datasets, running these files is unnecessary, as we have already included the LLaMA-generated texts.

To evaluate ITACLIP on a dataset, run the following command updating the dataset_name.

python eval.py --config ./configs/cfg_{dataset_name}.py

To evaluate ITACLIP on a single image, run the demo.ipynb Jupyter Notebook

With the default configurations, you should achieve the following results (mIoU).

| Dataset | mIoU |

|---|---|

| COCO-Stuff | 27.0 |

| COCO-Object | 37.7 |

| PASCAL VOC | 67.9 |

| PASCAL Context | 37.5 |

If you find our project helpful, please consider citing our work.

@article{aydin2024itaclip,

title={ITACLIP: Boosting Training-Free Semantic Segmentation with Image, Text, and Architectural Enhancements},

author={Ayd{\i}n, M Arda and {\c{C}}{\i}rpar, Efe Mert and Abdinli, Elvin and Unal, Gozde and Sahin, Yusuf H},

journal={arXiv preprint arXiv:2411.12044},

year={2024}

}

This implementation builds upon CLIP, SCLIP, and MMSegmentation. We gratefully acknowledge their valuable contributions.