This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions from term 2

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images

Outside of requirements.txt, here is information on other driver/library versions used in the simulator and Carla:

Specific to these libraries, the simulator grader and Carla use the following:

| Simulator | Carla | |

|---|---|---|

| Nvidia driver | 384.130 | 384.130 |

| CUDA | 8.0.61 | 8.0.61 |

| cuDNN | 6.0.21 | 6.0.21 |

| TensorRT | N/A | N/A |

| OpenCV | 3.2.0-dev | 2.4.8 |

| OpenMP | N/A | N/A |

We are working on a fix to line up the OpenCV versions between the two.

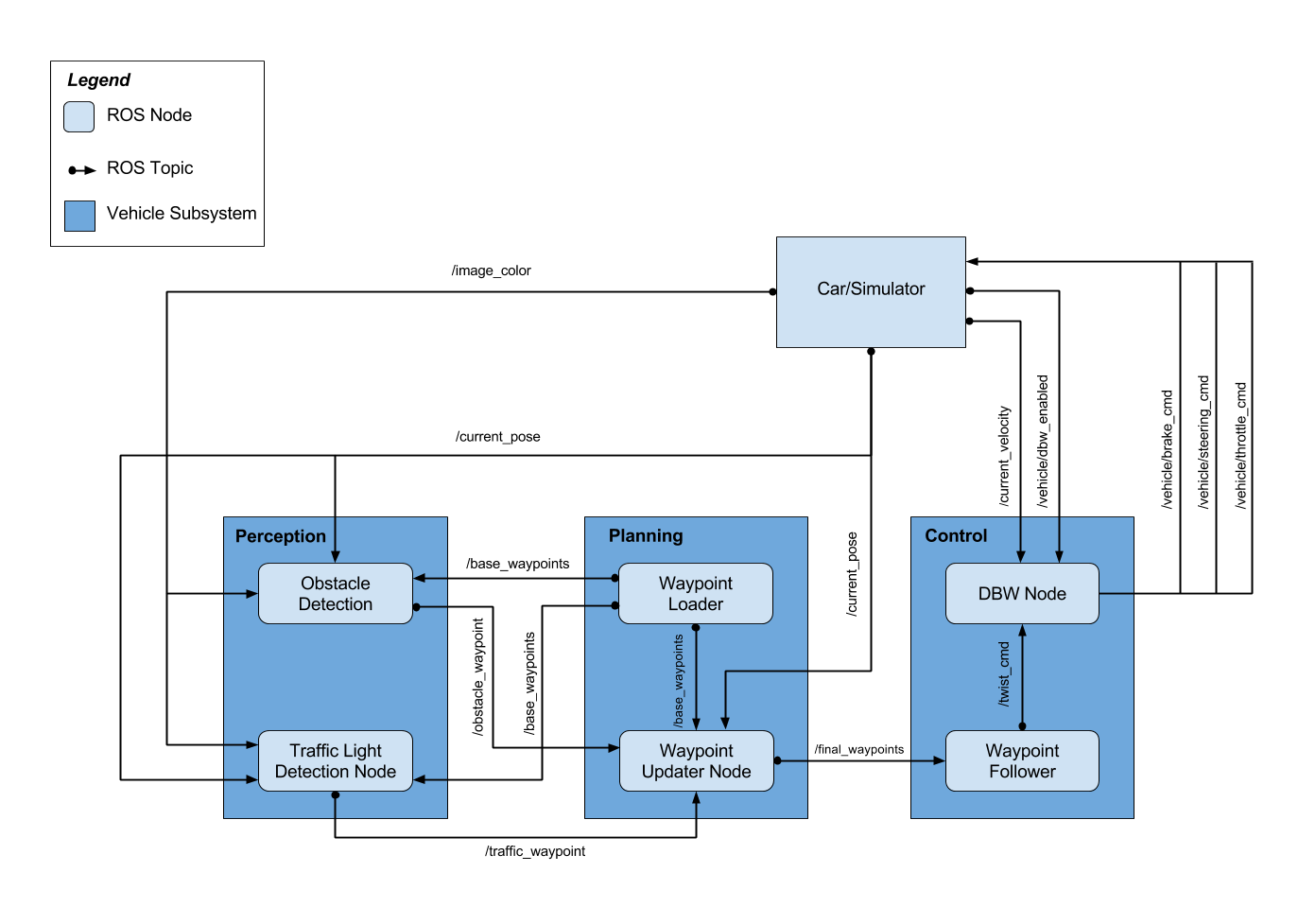

This project is composed of three parts. First one is object detection that is made of retrained inference model with pre-trained. Second one is communication line with ROS. Every node has communication line of publish and subscribe. They get or receive data using ROS communication system. Last one is driving of car with PID control.

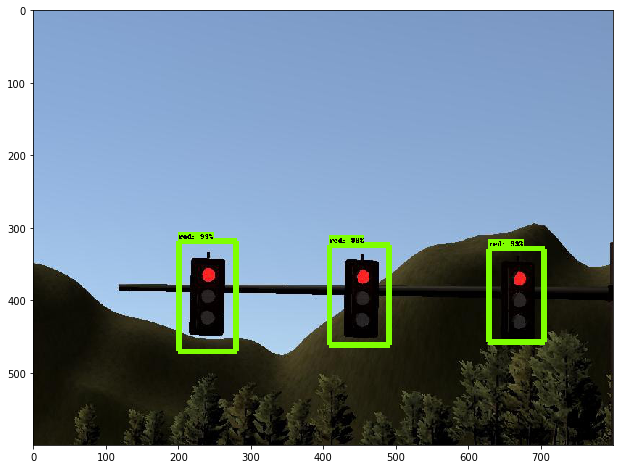

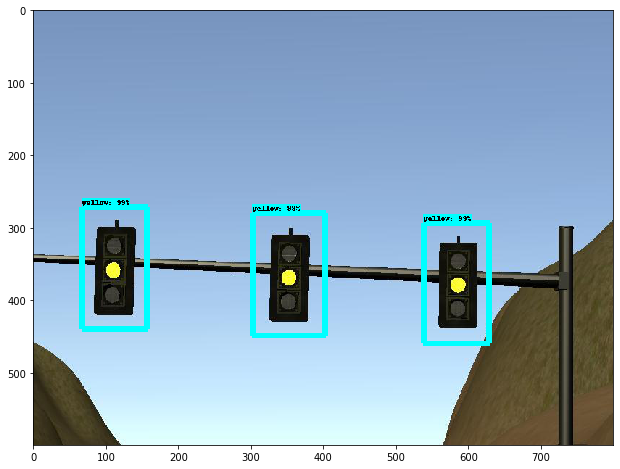

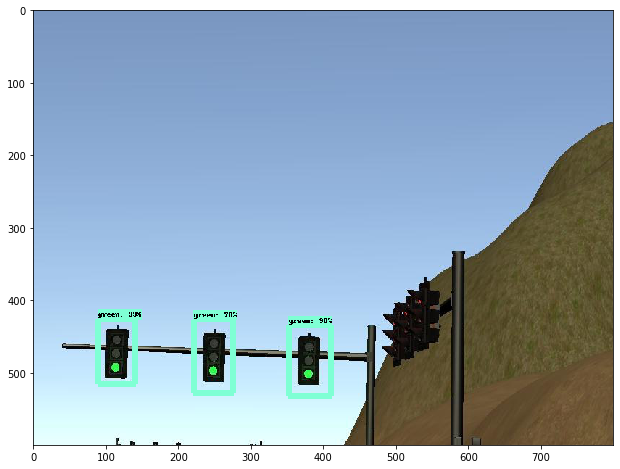

We need to get and select the files to detect. Next, we set the labels to sort for traffic signs in each pictures. signs are like red, yellow, green. It's easy to make labels in xml formats below links reference : https://github.com/tzutalin/labelImg

We have to convert xmls of label information to csv for making TFRecords file. This helps to train easier. To do complete,

- download git : https://github.com/tensorflow/models/ => tree/master/research/object_detection

- run "python xmltocsv.py" in root of upper git installed

to create train data: python generate_tfrecord.py --csv_input=data/train_labels.csv --output_path=data/train.record to create test data: python generate_tfrecord.py --csv_input=data/test_labels.csv --output_path=data/test.record

I choose 'ssd_mobilenet_v1_coco_2018_01_28.config' for trainining. for training : 10,000 times

simulator : python legacy/train.py --logtostderr --pipeline_config_path=train_carnd_sim/ssd_mobilenet_v1_coco_2018_01_28.config --train_dir=train_carnd_sim/

real : python legacy/train.py --logtostderr --pipeline_config_path=train_carnd_real/ssd_mobilenet_v1_coco_2018_01_28.config --train_dir=train_carnd_real/

for exporting graph : 100,000 times

simulator : python export_inference_graph.py --input_type image_tensor --pipeline_config_path train_carnd_sim/ssd_mobilenet_v1_coco_2018_01_28.config --trained_checkpoint_prefix train_carnd_sim/model.ckpt-10000 --output_directory carnd_capstone_sim_graph

real : python export_inference_graph.py --input_type image_tensor --pipeline_config_path train_carnd_real/ssd_mobilenet_v1_coco_2018_01_28.config --trained_checkpoint_prefix train_carnd_real/model.ckpt-100000 --output_directory carnd_capstone_realgraph

| Simulator | Real |

|---|---|

|

|

| :-------------------------: | :-------------------------: |

|

|

| :-------------------------: | :-------------------------: |

|

|

In simulation, It's really important to synchronize message between modules. Because camera image processing, object detection, message processing, network performance and system performace make delay for communication, So I had to modify parameters.

in bridge.py : publish_camera => 1 of 10th

dbw_node.py : loop => 10 Hz

waypoint_updater.py : loop => 10 Hz

In simulation, It's really important to synchronize message between modules. Because camera image processing, object detection, message processing, network performance and system performace make delay for communication, So I had to modify parameters.

in bridge.py : publish_camera => 1 of 10th

dbw_node.py : loop => 10 Hz

waypoint_updater.py : loop => 10 Hz

DBW node sends throttle, brake and steering to simulator If throttle value is over than 0.12, then it's disconnected with server.