Re-implementation of our paper Voice Conversion from Non-parallel Corpora Using Variational Auto-encoder.

(for VAWGAN, please switch to vawgan branch)

Linux Ubuntu 16.04

Python 3.5

- Tensorflow-gpu 1.2.1

- Numpy

- Soundfile

- PyWorld

- Cython

For example,

conda create -n py35tf121 -y python=3.5

source activate py35tf121

pip install -U pip

pip install -r requirements.txtsoundfilemight requiresudo apt-get install.- You can use any virtual environment packages (e.g.

virtualenv) - If your Tensorflow is the CPU version, you might have to replace all the

NCHWops in my code because Tensorflow-CPU only supportsNHWCop and will report an error:InvalidArgumentError (see above for traceback): Conv2DCustomBackpropInputOp only supports NHWC. - I recommend installing Tensorflow from the link on their Github repo.

pip install -U [*.whl link on the Github page]

- Run

bash download.shto prepare the VCC2016 dataset. - Run

analyzer.pyto extract features and write features into binary files. (This takes a few minutes.) - Run

build.pyto record some stats, such as spectral extrema and pitch. - To train a VAE, for example, run

python main.py \

--model ConvVAE \

--trainer VAETrainer \

--architecture architecture-vae-vcc2016.json- You can find your models in

./logdir/train/[timestamp] - To convert the voice, run

python convert.py \

--src SF1 \

--trg TM3 \

--model ConvVAE \

--checkpoint logdir/train/[timestamp]/[model.ckpt-[id]] \

--file_pattern "./dataset/vcc2016/bin/Testing Set/{}/*.bin"*Please fill in timestampe and model id.

7. You can find the converted wav files in ./logdir/output/[timestamp]

Voice Conversion Challenge 2016 (VCC2016): download page

- Conditional VAE

dataset

vcc2016

bin

wav

Training Set

Testing Set

SF1

SF2

...

TM3

etc

speakers.tsv (one speaker per line)

(xmax.npf)

(xmin.npf)

util (submodule)

model

logdir

architecture*.json

analyzer.py (feature extraction)

build.py (stats collecting)

trainer*.py

main.py (main script)

(validate.py) (output converted spectrogram)

convert.py (conversion)

The WORLD vocdoer features and the speaker label are stored in binary format.

Format:

[[s1, s2, ..., s513, a1, ..., a513, f0, en, spk],

[s1, s2, ..., s513, a1, ..., a513, f0, en, spk],

...,

[s1, s2, ..., s513, a1, ..., a513, f0, en, spk]]

where

s_i is spectral envelop magnitude (in log10) of the ith frequency bin,

a_i is the corresponding "aperiodicity" feature,

f0 is the pitch (0 for unvoice frames),

en is the energy,

spk is the speaker index (0 - 9) and s is the sp.

Note:

- The speaker identity

spkwas stored innp.float32but will be converted intotf.int64by thereaderinanalysizer.py. - I shouldn't have stored the speaker identity per frame; it was just for implementation simplicity.

- Define a new model (and an accompanying trainer) and then specify the

--modeland--trainerofmain.py. - Tip: when creating a new trainer, override

_optimize()and the main loop intrain(). - Code orgainzation

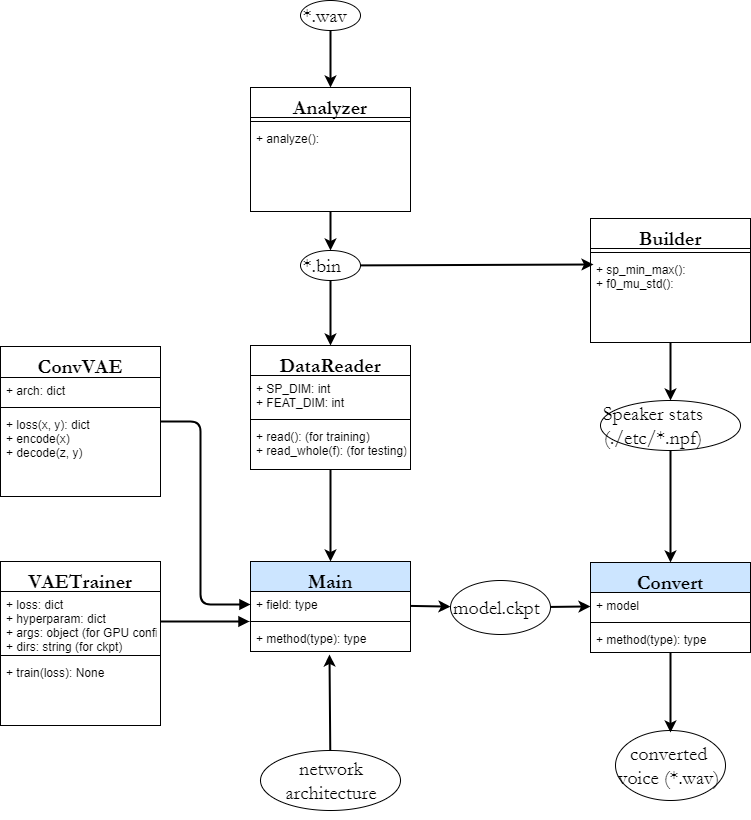

This isn't a UML; rather, the arrows indicates input-output relations only.

This isn't a UML; rather, the arrows indicates input-output relations only.

- WORLD vocoder is chosen in this repo instead of STRAIGHT because the former is open-sourced whereas the latter isn't.

I use pyworld, Python wrapper of the WORLD, in this repo. - Global variance post-filtering was not included in this repo.

- In our VAE-NPVC paper, we didn't apply the [-1, 1] normalization; we did in our VAWGAN-NPVC paper.

The original code base was originally built in March, 2016.

Tensorflow was in version 0.10 or earlier, so I decided to refactor my code and put it in this repo.

-

utilsubmodule (add to README) - GV

-

build.pyshould accept subsets of speakers

In this propose a flexible framework for spectral conversion (SC) that facilitates training with unaligned corpora. Many SC frameworks require parallel corpora, phonetic alignments, or explicit frame-wise correspondence for learning conversion functions or for synthesizing a target spectrum with the aid of alignments. However, these requirements gravely limit the scope of practical applications of SC due to scarcity or even unavailability of parallel corpora. We propose an SC framework based on variational auto-encoder which enables us to exploit non-parallel corpora. The framework comprises an encoder that learns speaker-independent phonetic representations and a decoder that learns to reconstruct the designated speaker. It removes the requirement of parallel corpora or phonetic alignments to train a spectral conversion system. We report objective and subjective evaluations to validate our proposed method and compare it to SC methods that have access to aligned corpora.

To improve the performance of this transformation function, another transformation can be used using the Fourier transformation function, which is as follows.

def convert_f0(f0, src, trg): mu_s, std_s = np.fromfile(os.path.join('./etc', '{}.npf'.format(src)), np.float32) mu_t, std_t = np.fromfile(os.path.join('./etc', '{}.npf'.format(trg)), np.float32) lf0 = tf.where(f0 > 1., tf.log(f0), f0) lf0 = tf.where(lf0 > 1., (lf0 - mu_s)/std_s * std_t + mu_t, lf0) lf0 = tf.where(lf0 > 1., tf.exp(lf0), lf0) return lf0

By moving the transformations, the output result was palpable in the resolution of the original sound, and the encoding can be considered valid for this audio data sample.

Chin-Cheng Hsu�, Hsin-Te Hwang�, Yi-Chiao Wu�, Yu Tsaoy and Hsin-Min Wang� � Institute of Information Science, Academia Sinica, Taipei, Taiwan Voice Conversion from Non-parallel Corpora Using Variational Auto-encoder.

My name is Nima Azimi and I'm M.sc of biomedical engineering student , I've choosen this type of project becuase of the importance of the seceurity of the signal data and it's nessecarry to keep them safe .

In the end, I would like to thank Dr. Islami, who introduced me to this field and guided me.