Extract main article, main image and meta data from URL.

-

Node.js

npm i article-parser # pnpm pnpm i article-parser # yarn yarn add article-parser

import { extract } from 'article-parser'

// with CommonJS environments

// const { extract } = require('article-parser/dist/cjs/article-parser.js')

const url = 'https://www.binance.com/en/blog/markets/15-new-years-resolutions-that-will-make-2022-your-best-year-yet-421499824684903249'

extract(url).then((article) => {

console.log(article)

}).catch((err) => {

console.trace(err)

})Since Node.js v14, ECMAScript modules have became the official standard format. Just ensure that you are using module system and enjoy with ES6 import/export syntax.

Load and extract article data. Return a Promise object.

Example:

import { extract } from 'article-parser'

const getArticle = async (url) => {

try {

const article = await extract(url)

return article

} catch (err) {

console.trace(err)

return null

}

}

getArticle('https://domain.com/path/to/article')If the extraction works well, you should get an article object with the structure as below:

{

"url": URI String,

"title": String,

"description": String,

"image": URI String,

"author": String,

"content": HTML String,

"published": Date String,

"source": String, // original publisher

"links": Array, // list of alternative links

"ttr": Number, // time to read in second, 0 = unknown

}Click here for seeing an actual result.

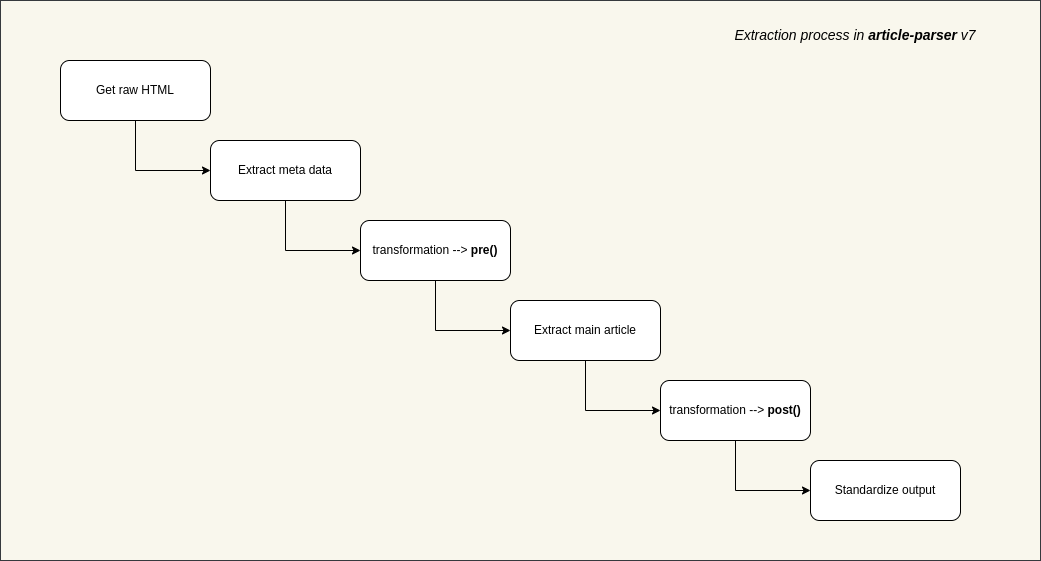

Sometimes the default extraction algorithm may not work well. That is the time when we need transformations.

By adding some functions before and after the main extraction step, we aim to come up with a better result as much as possible.

transformation is available since article-parser@7.0.0, as the improvement of queryRule in the older versions.

To play with transformations, article-parser provides 2 public methods as below:

addTransformations(Object transformation | Array transformations)removeTransformations(Array patterns)

At first, let's talk about transformation object.

In article-parser, transformation is an object with the following properties:

patterns: required, list of URLPattern objectspre: optional, a function to process raw HTMLpost: optional, a function to proces extracted article

Basically, the meaning of transformation can be interpreted like this:

with the urls which match these

patterns

let's runprefunction to normalize HTML content

then extract main article content with normalized HTML, and if success

let's runpostfunction to normalize extracted article content

Here is an example transformation:

{

patterns: [

'*://*.domain.tld/*',

'*://domain.tld/articles/*'

],

pre: (document) => {

// remove all .advertise-area and its siblings from raw HTML content

document.querySelectorAll('.advertise-area').forEach((element) => {

if (element.nodeName === 'DIV') {

while (element.nextSibling) {

element.parentNode.removeChild(element.nextSibling)

}

element.parentNode.removeChild(element)

}

})

return document

},

post: (document) => {

// with extracted article, replace all h4 tags with h2

document.querySelectorAll('h4').forEach((element) => {

const h2Element = document.createElement('h2')

h2Element.innerHTML = element.innerHTML

element.parentNode.replaceChild(h2Element, element)

})

// change small sized images to original version

document.querySelectorAll('img').forEach((element) => {

const src = element.getAttribute('src')

if (src.includes('domain.tld/pics/150x120/')) {

const fullSrc = src.replace('/pics/150x120/', '/pics/original/')

element.setAttribute('src', fullSrc)

}

})

return document

}

}- Regarding the syntax for patterns, please view URL Pattern API.

- To write better transformation logic, please refer Document Object.

Add a single transformation or a list of transformations. For example:

import { addTransformations } from 'article-parser'

addTransformations({

patterns: [

'*://*.abc.tld/*'

],

pre: (document) => {

// do something with document

return document

},

post: (document) => {

// do something with document

return document

}

})

addTransformations([

{

patterns: [

'*://*.def.tld/*'

],

pre: (document) => {

// do something with document

return document

},

post: (document) => {

// do something with document

return document

}

},

{

patterns: [

'*://*.xyz.tld/*'

],

pre: (document) => {

// do something with document

return document

},

post: (document) => {

// do something with document

return document

}

}

])The transformations without patterns will be ignored.

To remove transformations that match the specific patterns.

For example, we can remove all added transformations above:

import { removeTransformations } from 'article-parser'

removeTransformations([

'*://*.abc.tld/*',

'*://*.def.tld/*',

'*://*.xyz.tld/*'

])Calling removeTransformations() without parameter will remove all current transformations.

While processing an article, more than one transformation can be applied.

Suppose that we have the following transformations:

[

{

patterns: [

'*://google.com/*',

'*://goo.gl/*'

],

pre: function_one,

post: function_two

},

{

patterns: [

'*://goo.gl/*',

'*://google.inc/*'

],

pre: function_three,

post: function_four

}

]As you can see, an article from goo.gl certainly matches both them.

In this scenario, article-parser will execute both transformations, one by one:

function_one -> function_three -> extraction -> function_two -> function_four

In addition, this lib provides some methods to customize default settings. Don't touch them unless you have reason to do that.

- getParserOptions()

- setParserOptions(Object parserOptions)

- getRequestOptions()

- setRequestOptions(Object requestOptions)

- getSanitizeHtmlOptions()

- setSanitizeHtmlOptions(Object sanitizeHtmlOptions)

- getHtmlCrushOptions(Object htmlCrushOptions)

- setHtmlCrushOptions()

Here are default properties/values:

{

wordsPerMinute: 300, // to estimate "time to read"

urlsCompareAlgorithm: 'levenshtein', // to find the best url from list

descriptionLengthThreshold: 40, // min num of chars required for description

descriptionTruncateLen: 156, // max num of chars generated for description

contentLengthThreshold: 200 // content must have at least 200 chars

}Read string-comparison docs for more info about urlsCompareAlgorithm.

{

headers: {

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:102.0) Gecko/20100101 Firefox/102.0',

accept: 'text/html; charset=utf-8'

},

responseType: 'text',

responseEncoding: 'utf8',

timeout: 6e4,

maxRedirects: 3

}Read axios' request config for more info.

{

allowedTags: [

'h1', 'h2', 'h3', 'h4', 'h5',

'u', 'b', 'i', 'em', 'strong', 'small', 'sup', 'sub',

'div', 'span', 'p', 'article', 'blockquote', 'section',

'details', 'summary',

'pre', 'code',

'ul', 'ol', 'li', 'dd', 'dl',

'table', 'th', 'tr', 'td', 'thead', 'tbody', 'tfood',

'fieldset', 'legend',

'figure', 'figcaption', 'img', 'picture',

'video', 'audio', 'source',

'iframe',

'progress',

'br', 'p', 'hr',

'label',

'abbr',

'a',

'svg'

],

allowedAttributes: {

a: ['href', 'target', 'title'],

abbr: ['title'],

progress: ['value', 'max'],

img: ['src', 'srcset', 'alt', 'width', 'height', 'style', 'title'],

picture: ['media', 'srcset'],

video: ['controls', 'width', 'height', 'autoplay', 'muted'],

audio: ['controls'],

source: ['src', 'srcset', 'data-srcset', 'type', 'media', 'sizes'],

iframe: ['src', 'frameborder', 'height', 'width', 'scrolling'],

svg: ['width', 'height']

},

allowedIframeDomains: ['youtube.com', 'vimeo.com']

}Read sanitize-html docs for more info.

{

removeLineBreaks: true,

removeHTMLComments: 2

}For more options, please refer html-crush docs.

git clone https://github.com/ndaidong/article-parser.git

cd article-parser

npm install

npm test

# quick evaluation

npm run eval {URL_TO_PARSE_ARTICLE}The MIT License (MIT)