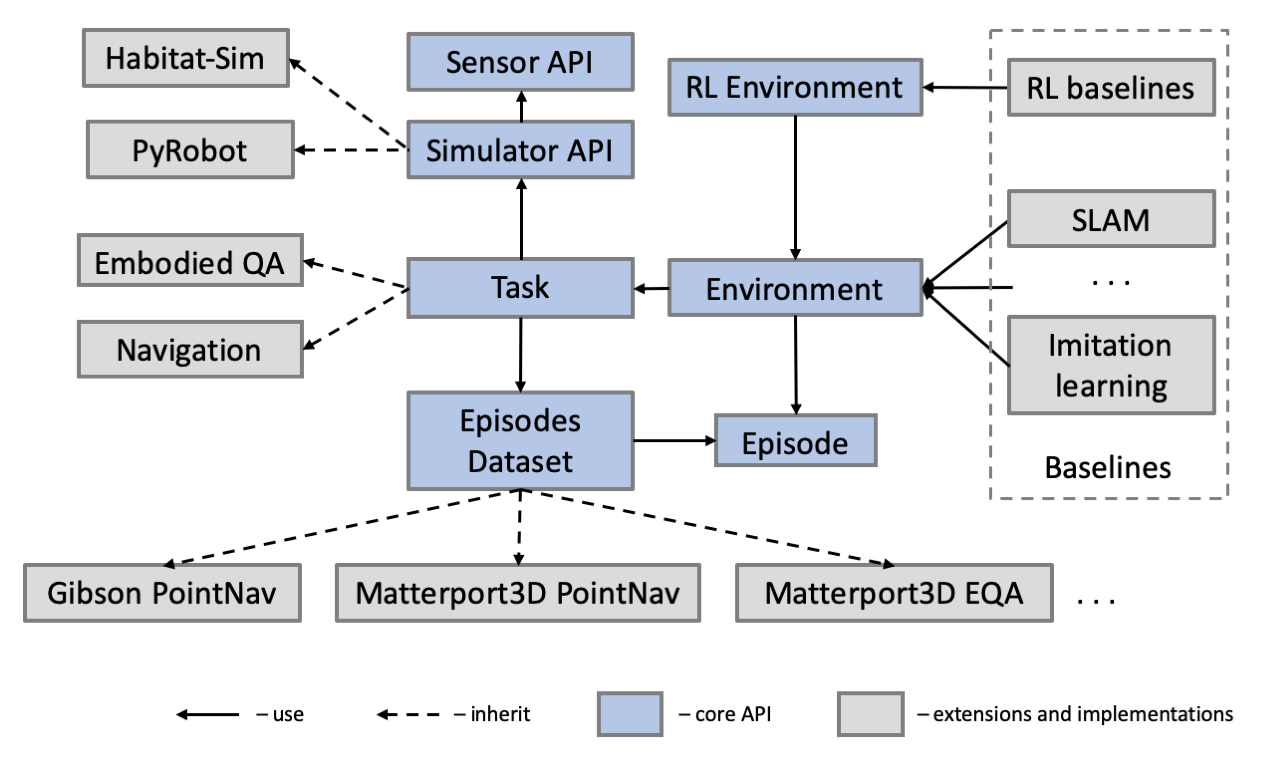

Habitat Lab is a modular high-level library for end-to-end development in embodied AI – defining embodied AI tasks (e.g. navigation, instruction following, question answering), configuring embodied agents (physical form, sensors, capabilities), training these agents (via imitation or reinforcement learning, or no learning at all as in classical SLAM), and benchmarking their performance on the defined tasks using standard metrics.

Habitat Lab currently uses Habitat-Sim as the core simulator, but is designed with a modular abstraction for the simulator backend to maintain compatibility over multiple simulators. For documentation refer here.

We also have a dev slack channel, please follow this link to get added to the channel. If you want to contribute PRs or face issues with habitat please reach out to us either through github issues or slack channel.

- Motivation

- Citing Habitat

- Installation

- Example

- Documentation

- Docker Setup

- Details

- Data

- Baselines

- License

- Acknowledgments

- References

While there has been significant progress in the vision and language communities thanks to recent advances in deep representations, we believe there is a growing disconnect between ‘internet AI’ and embodied AI. The focus of the former is pattern recognition in images, videos, and text on datasets typically curated from the internet. The focus of the latter is to enable action by an embodied agent in an environment (e.g. a robot). This brings to the forefront issues of active perception, long-term planning, learning from interaction, and holding a dialog grounded in an environment.

To this end, we aim to standardize the entire ‘software stack’ for training embodied agents – scanning the world and creating highly photorealistic 3D assets, developing the next generation of highly efficient and parallelizable simulators, specifying embodied AI tasks that enable us to benchmark scientific progress, and releasing modular high-level libraries to train and deploy embodied agents.

If you use the Habitat platform in your research, please cite the Habitat and Habitat 2.0 papers:

@inproceedings{szot2021habitat,

title = {Habitat 2.0: Training Home Assistants to Rearrange their Habitat},

author = {Andrew Szot and Alex Clegg and Eric Undersander and Erik Wijmans and Yili Zhao and John Turner and Noah Maestre and Mustafa Mukadam and Devendra Chaplot and Oleksandr Maksymets and Aaron Gokaslan and Vladimir Vondrus and Sameer Dharur and Franziska Meier and Wojciech Galuba and Angel Chang and Zsolt Kira and Vladlen Koltun and Jitendra Malik and Manolis Savva and Dhruv Batra},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2021}

}

@inproceedings{habitat19iccv,

title = {Habitat: {A} {P}latform for {E}mbodied {AI} {R}esearch},

author = {Manolis Savva and Abhishek Kadian and Oleksandr Maksymets and Yili Zhao and Erik Wijmans and Bhavana Jain and Julian Straub and Jia Liu and Vladlen Koltun and Jitendra Malik and Devi Parikh and Dhruv Batra},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2019}

}

-

Clone a stable version from the github repository and install habitat-lab using the commands below. Note that python>=3.7 is required for working with habitat-lab. All the development and testing was done using python3.7. Please use 3.7 to avoid possible issues.

git clone --branch stable https://github.com/facebookresearch/habitat-lab.git cd habitat-lab pip install -e .

The command above will install only core of Habitat Lab. To include habitat_baselines along with all additional requirements, use the command below instead:

git clone --branch stable https://github.com/facebookresearch/habitat-lab.git cd habitat-lab pip install -r requirements.txt python setup.py develop --all # install habitat and habitat_baselines

-

Install

habitat-sim:For a machine with an attached display,

conda install habitat-sim withbullet -c conda-forge -c aihabitat

For a machine with multiple GPUs or without an attached display (i.e. a cluster),

conda install habitat-sim withbullet headless -c conda-forge -c aihabitat

See habitat-sim's installation instructions for more details. MacOS does not work with

headlessso exclude that argument if on MacOS. -

Run the example script

python examples/example.pywhich in the end should print out number of steps agent took inside an environment (eg:Episode finished after 18 steps.).

-

Download the habitat-test-scenes dataset for pointnav task.

python -m habitat_sim.utils.datasets_download --uids habitat_test_scenes --data-path /path/to/data/ python -m habitat_sim.utils.datasets_download --uids habitat_example_objects --data-path /path/to/data/ python -m habitat_sim.utils.datasets_download --uids habitat_test_pointnav_dataset --data-path /path/to/data/

-

Set PythonPath

export PYTHONPATH=<path to habitat-sim>:$PYTHONPATH

-

Using Shortest Path Follower

python examples/shortest_path_follower_example.py --out_dir <directory to store data> --num_episodes <# episodes to collect>

This code collects data according to the episode structure specified by the dataset that is loaded for the particular task.

A separate code has been added as ipynb file(data_collection_test_script.ipynb) which can collect data automatically/randomly in a specified glb file / environment.

-

Using RL

-

Ensure that you have habitat-lab installed with habitat-baselines.

python -u habitat_baselines/run.py --exp-config <path-to-training-config-file> --run-type train

Currently tested for

python -u habitat_baselines/run.py --exp-config habitat_baselines/config/pointnav/ppo_pointnav_example.yaml --run-type train

Currently collects data only according to the specified episode structure in the task dataset. More work is needed to make it generalizable to a specified scene/glb file.

🆕Example code-snippet which uses configs/tasks/rearrange/pick.yaml for configuration of task and agent.

import habitat

# Load embodied AI task (RearrangePick) and a pre-specified virtual robot

env = habitat.Env(

config=habitat.get_config("configs/tasks/rearrange/pick.yaml")

)

observations = env.reset()

# Step through environment with random actions

while not env.episode_over:

observations = env.step(env.action_space.sample())See examples/register_new_sensors_and_measures.py for an example of how to extend habitat-lab from outside the source code.

Habitat Lab documentation is available here.

For example, see this page for a quickstart example.

We also provide a docker setup for habitat. This works on machines with an NVIDIA GPU and requires users to install nvidia-docker. The following Dockerfile was used to build the habitat docker. To setup the habitat stack using docker follow the below steps:

-

Pull the habitat docker image:

docker pull fairembodied/habitat:latest -

Start an interactive bash session inside the habitat docker:

docker run --runtime=nvidia -it fairhabitat/habitat:v1 -

Activate the habitat conda environment:

source activate habitat -

Benchmark a forward only agent on the test scenes data:

cd habitat-api; python examples/benchmark.py. This should print out an output like:

2019-02-25 02:39:48,680 initializing sim Sim-v0

2019-02-25 02:39:49,655 initializing task Nav-v0

spl: 0.000An important objective of Habitat Lab is to make it easy for users to set up a variety of embodied agent tasks in 3D environments. The process of setting up a task involves using environment information provided by the simulator, connecting the information with a dataset (e.g. PointGoal targets, or question and answer pairs for Embodied QA) and providing observations which can be used by the agents. Keeping this primary objective in mind the core API defines the following key concepts as abstractions that can be extended:

-

Env: the fundamental environment concept for Habitat. All the information needed for working on embodied tasks with a simulator is abstracted inside an Env. This class acts as a base for other derived environment classes. Env consists of three major components: a Simulator, a Dataset (containing Episodes), and a Task, and it serves to connects all these three components together. -

Dataset: contains a list of task-specific episodes from a particular data split and additional dataset-wide information. Handles loading and saving of a dataset to disk, getting a list of scenes, and getting a list of episodes for a particular scene. -

Episode: a class for episode specification that includes the initial position and orientation of an Agent, a scene id, a goal position and optionally shortest paths to the goal. An episode is a description of one task instance for the agent.

Architecture of Habitat Lab

-

Task: this class builds on top of the simulator and dataset. The criteria of episode termination and measures of success are provided by the Task. -

Sensor: a generalization of the physical Sensor concept provided by a Simulator, with the capability to provide Task-specific Observation data in a specified format. -

Observation: data representing an observation from a Sensor. This can correspond to physical sensors on an Agent (e.g. RGB, depth, semantic segmentation masks, collision sensors) or more abstract sensors such as the current agent state.

Note that the core functionality defines fundamental building blocks such as the API for interacting with the simulator backend, and receiving observations through Sensors. Concrete simulation backends, 3D datasets, and embodied agent baselines are implemented on top of the core API.

To make things easier we expect data folder of particular structure or symlink presented in habitat-lab working directory.

| Scenes models | Extract path | Archive size |

|---|---|---|

| Habitat test scenes | data/scene_datasets/habitat-test-scenes/{scene}.glb |

89 MB |

| 🆕ReplicaCAD | data/scene_datasets/replica_cad/configs/scenes/{scene}.scene_instance.json |

123 MB |

| 🆕HM3D | data/scene_datasets/hm3d/{split}/00\d\d\d-{scene}/{scene}.basis.glb |

130 GB |

| Gibson | data/scene_datasets/gibson/{scene}.glb |

1.5 GB |

| MatterPort3D | data/scene_datasets/mp3d/{scene}/{scene}.glb |

15 GB |

These datasets can be downloaded follow the instructions here.

To download the point goal navigation episodes for the habitat test scenes, use the download api from habitat-sim.

python -m habitat_sim.utils.datasets_download --uids habitat_test_pointnav_dataset --data-path data/

To use an episode dataset provide related config to the Env in the example or use the config for RL agent training.

Habitat Lab includes reinforcement learning (via PPO) and classical SLAM based baselines. For running PPO training on sample data and more details refer habitat_baselines/README.md.

Habitat Lab supports deployment of models on a physical robot through PyRobot (https://github.com/facebookresearch/pyrobot). Please install the python3 version of PyRobot and refer to habitat.sims.pyrobot.pyrobot for instructions. This functionality allows deployment of models across simulation and reality.

ROS-X-Habitat (https://github.com/ericchen321/ros_x_habitat) is a framework that bridges the AI Habitat platform (Habitat Lab + Habitat Sim) with other robotics resources via ROS. Compared with Habitat-PyRobot, ROS-X-Habitat places emphasis on 1) leveraging Habitat Sim v2's physics-based simulation capability and 2) allowing roboticists to access simulation assets from ROS. The work has also been made public as a paper.

Note that ROS-X-Habitat was developed, and is maintained by the Lab for Computational Intelligence at UBC; it has not yet been officially supported by the Habitat Lab team. Please refer to the framework's repository for docs and discussions.

The Habitat project would not have been possible without the support and contributions of many individuals. We would like to thank Dmytro Mishkin, Xinlei Chen, Georgia Gkioxari, Daniel Gordon, Leonidas Guibas, Saurabh Gupta, Or Litany, Marcus Rohrbach, Amanpreet Singh, Devendra Singh Chaplot, Yuandong Tian, and Yuxin Wu for many helpful conversations and guidance on the design and development of the Habitat platform.

Habitat Lab is MIT licensed. See the LICENSE file for details.

The trained models and the task datasets are considered data derived from the correspondent scene datasets.

- Matterport3D based task datasets and trained models are distributed with Matterport3D Terms of Use and under CC BY-NC-SA 3.0 US license.

- Gibson based task datasets, the code for generating such datasets, and trained models are distributed with Gibson Terms of Use and under CC BY-NC-SA 3.0 US license.

- 🆕Habitat 2.0: Training Home Assistants to Rearrange their Habitat Andrew Szot, Alex Clegg, Eric Undersander, Erik Wijmans, Yili Zhao, John Turner, Noah Maestre, Mustafa Mukadam, Devendra Chaplot, Oleksandr Maksymets, Aaron Gokaslan, Vladimir Vondrus, Sameer Dharur, Franziska Meier, Wojciech Galuba, Angel Chang, Zsolt Kira, Vladlen Koltun, Jitendra Malik, Manolis Savva, Dhruv Batra. Advances in Neural Information Processing Systems (NeurIPS), 2021.

- Habitat: A Platform for Embodied AI Research. Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh, Dhruv Batra. IEEE/CVF International Conference on Computer Vision (ICCV), 2019.