The official PyTorch implementation for the following paper:

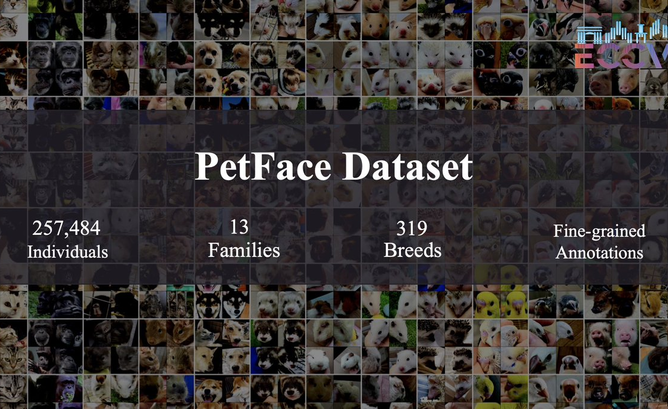

PetFace: A Large-Scale Dataset and Benchmark for Animal Identification,

Risa Shionoda* and Kaede Shiohara* (*Equal contribution),

ECCV 2024 Oral (with three Strong Accepts!!!)

TL;DR: We established a large-scale animal identification dataset with more than 250k IDs across 13 families

Our PetFace dataset, code in this repository, and pretrained models are for non-commercial research purpose only.

[2024/10/07] The code for face alignment on your own images was released.

[2024/09/02] Installation instruction was updated.

[2024/08/14] PetFace was selected as an ORAL presentation at ECCV2024🎉

[2024/07/27] Pretrained models, training code, and evaluation were released. Also, "split" folder is updated.

[2024/07/19] This repository was released.

Fill in a google form for access to the dataset.

- We manually confirm the submitted forms and therefore it may take some time. Please be patient while the dataset link arrives.

- If you do not receive the link after 3 days, we may have missed your form or the form was not filled in correctly. We are sorry, but please submit the proper form again. (Please do not start a thread on Issue)

Place the dataset as follows:

. (Root of this repository)

└── data

└── PetFace

├── images

│ └── cat

│ └── 000000

│ └── 00.png

├── split

│ └── cat

│ ├── train.csv

│ ├── val.txt

│ ├── test.txt

│ ├── reidentification.csv

│ └── verification.csv

└── annotations

└── cat.csv

train.csv: file names and id labels for training

val.txt: file names for validation (not used in this codebase)

test.txt: file names for verification (not used in this codebase)

verification.csv: pairs of file names to verify and labels indicating whether the pairs have the same ID

reidentification.csv: file names and id labels for re-identification

- Pull a docker image:

docker pull pytorch/pytorch:1.12.0-cuda11.3-cudnn8-runtime

- Replace the path in

exec.sh. - Execute the dokcer image:

bash exec.sh

- Install packages:

bash install.sh

Install packages:

pip install -r requirements.txt

Pretrained weights are provided on google drive.

For example, you can run the evaluation of re-identification for cat as follows:

CUDA_VISIBLE_DEVICES=0 python3 src/reidentification.py -m arcface -w pretrained/arcface/cat.pt -i data/PetFace/split/cat/reidentification.csv -o results/reidentification/arcface/cat.csv

Then, you can compute the top-k (k={1,2,3,4,5}) accuracy:

python3 src/compute_topk_acc.py --topk 5 -i results/reidentification/arcface/cat.csv

For example, you can run the evaluation of re-identification for cat as follows:

CUDA_VISIBLE_DEVICES=0 python3 src/verification.py -w pretrained/arcface/cat.pt -i data/PetFace/split/cat/verification.csv -o results/verification/arcface/cat.csv

Then, you can compute AUC:

python3 src/compute_auc.py -i results/verification/arcface/cat.csv

We provide the source key points in keypoints folder to align images.

First, you need to detect 5 keypoints of your own image by AnyFace and save them as a .npy file.

Then, you can align the images by:

python3 src/face_align.py --tgt /path/to/your/keypoints.npy --img /path/to/your/image.jpeg --src /path/to/src/keypoints.npy --out /path/to/output/image.jpgFor example, you can run the training for cat as follows:

CUDA_VISIBLE_DEVICES=0 python3 src/train_arcface.py src/configs/cat.py --output outputs/cat/arcface

Also, you can train an arcface model on all the species (families):

CUDA_VISIBLE_DEVICES=0 python3 src/train_unified.py src/configs/unified.py --output outputs/unified

We borrow some code from insightface, pytorch-center-loss, and triplet-loss-with-pytorch.

If you find our work useful for your research, please consider citing our paper:

@inproceedings{shinoda2025petface,

title={PetFace: A large-scale dataset and benchmark for animal identification},

author={Shinoda, Risa and Shiohara, Kaede},

booktitle={European Conference on Computer Vision},

pages={19--36},

year={2025},

organization={Springer}

}