A synthetic data generator for text recognition

Generating text image samples to train an OCR software. Now supporting non-latin text! For a more thorough tutorial see the official documentation.

Install the pypi package

pip install trdg

Afterwards, you can use trdg from the CLI. I recommend using a virtualenv instead of installing with sudo.

If you want to add another language, you can clone the repository instead. Simply run pip install -r requirements.txt

If you would rather not have to install anything to use TextRecognitionDataGenerator, you can pull the docker image.

docker pull belval/trdg:latest

docker run -v /output/path/:/app/out/ -t belval/trdg:latest trdg [args]

The path (/output/path/) must be absolute.

- Add python module

- Move

run.pyto an executable python file (trdg/bin/trdg) - Add

--fontto use only one font for all the generated images (Thank you @JulienCoutault!) - Add

--fitand--marginsfor finer layout control - Change the text orientation using the

-orparameter - Specify text color range using

-tc '#000000,#FFFFFF', please note that the quotes are necessary - Add support for Simplified and Traditional Chinese

Words will be randomly chosen from a dictionary of a specific language. Then an image of those words will be generated by using font, background, and modifications (skewing, blurring, etc.) as specified.

The usage as a Python module is very similar to the CLI, but it is more flexible if you want to include it directly in your training pipeline, and will consume less space and memory. There are 4 generators that can be used.

from trdg.generators import (

GeneratorFromDict,

GeneratorFromRandom,

GeneratorFromStrings,

GeneratorFromWikipedia,

)

# The generators use the same arguments as the CLI, only as parameters

generator = GeneratorFromStrings(

['Test1', 'Test2', 'Test3'],

blur=2,

random_blur=True

)

for img, lbl in generator:

# Do something with the pillow images here.You can see the full class definition here:

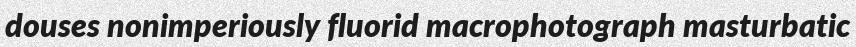

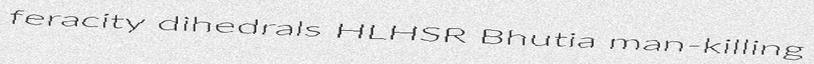

trdg -c 1000 -w 5 -f 64

You get 1,000 randomly generated images with random text on them like:

By default, they will be generated to out/ in the current working directory.

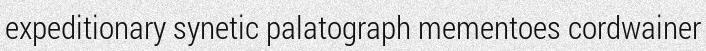

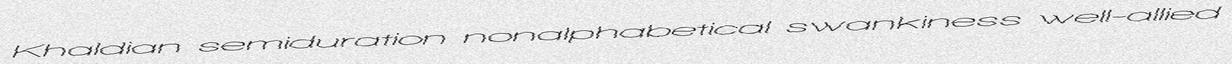

What if you want random skewing? Add -k and -rk (trdg -c 1000 -w 5 -f 64 -k 5 -rk)

You can also add distorsion to the generated text with -d and -do

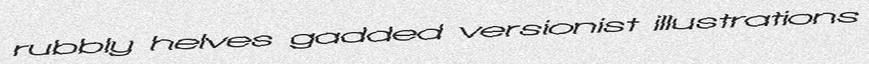

But scanned document usually aren't that clear are they? Add -bl and -rbl to get gaussian blur on the generated image with user-defined radius (here 0, 1, 2, 4):

Maybe you want another background? Add -b to define one of the three available backgrounds: gaussian noise (0), plain white (1), quasicrystal (2) or picture (3).

When using picture background (3). A picture from the pictures/ folder will be randomly selected and the text will be written on it.

Or maybe you are working on an OCR for handwritten text? Add -hw! (Experimental)

It uses a Tensorflow model trained using this excellent project by Grzego.

The project does not require TensorFlow to run if you aren't using this feature

The text is chosen at random in a dictionary file (that can be found in the dicts folder) and drawn on a white background made with Gaussian noise. The resulting image is saved as [text]_[index].jpg

There are a lot of parameters that you can tune to get the results you want, therefore I recommend checking out trdg -h for more information.

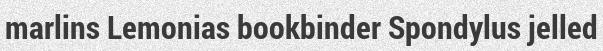

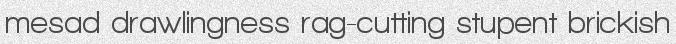

It is simple! Just do trdg -l cn -c 1000 -w 5!

Generated texts come both in simplified and traditional Chinese scripts.

Traditional:

Simplified:

The script picks a font at random from the fonts directory.

| Directory | Languages |

|---|---|

| fonts/latin | English, French, Spanish, German |

| fonts/cn | Chinese |

Simply add/remove fonts until you get the desired output.

If you want to add a new non-latin language, the amount of work is minimal.

- Create a new folder with your language two-letters code

- Add a .ttf font in it

- Edit

bin/trdgto add an if statement inload_fonts() - Add a text file in

dictswith the same two-letters code - Run the tool as you normally would but add

-lwith your two-letters code

It only supports .ttf for now.

Number of images generated per second.

- Intel Core i7-4710HQ @ 2.50Ghz + SSD (-c 1000 -w 1)

-t 1: 363 img/s-t 2: 694 img/s-t 4: 1300 img/s-t 8: 1500 img/s

- AMD Ryzen 7 1700 @ 4.0Ghz + SSD (-c 1000 -w 1)

-t 1: 558 img/s-t 2: 1045 img/s-t 4: 2107 img/s-t 8: 3297 img/s

- Create an issue describing the feature you'll be working on

- Code said feature

- Create a pull request

If anything is missing, unclear, or simply not working, open an issue on the repository.

- Better background generation

- Better handwritten text generation

- More customization parameters (mostly regarding background)