Project 2 of the Data Streaming Nanodegree Program.

-

Install requirements using

./start.shif you use conda for Python. If you use pip rather than conda, then usepip install -r requirements.txt. -

Navigate to the folder where you unzipped your Kafka download:

- Begin with starting Zookeeper

bin/zookeeper-server-start.sh config/zookeeper.properties - Then start Kafka server

bin/kafka-server-start.sh config/server.properties

- Begin with starting Zookeeper

-

Start the bootstrap Kafka producer b

python kafka_server.py

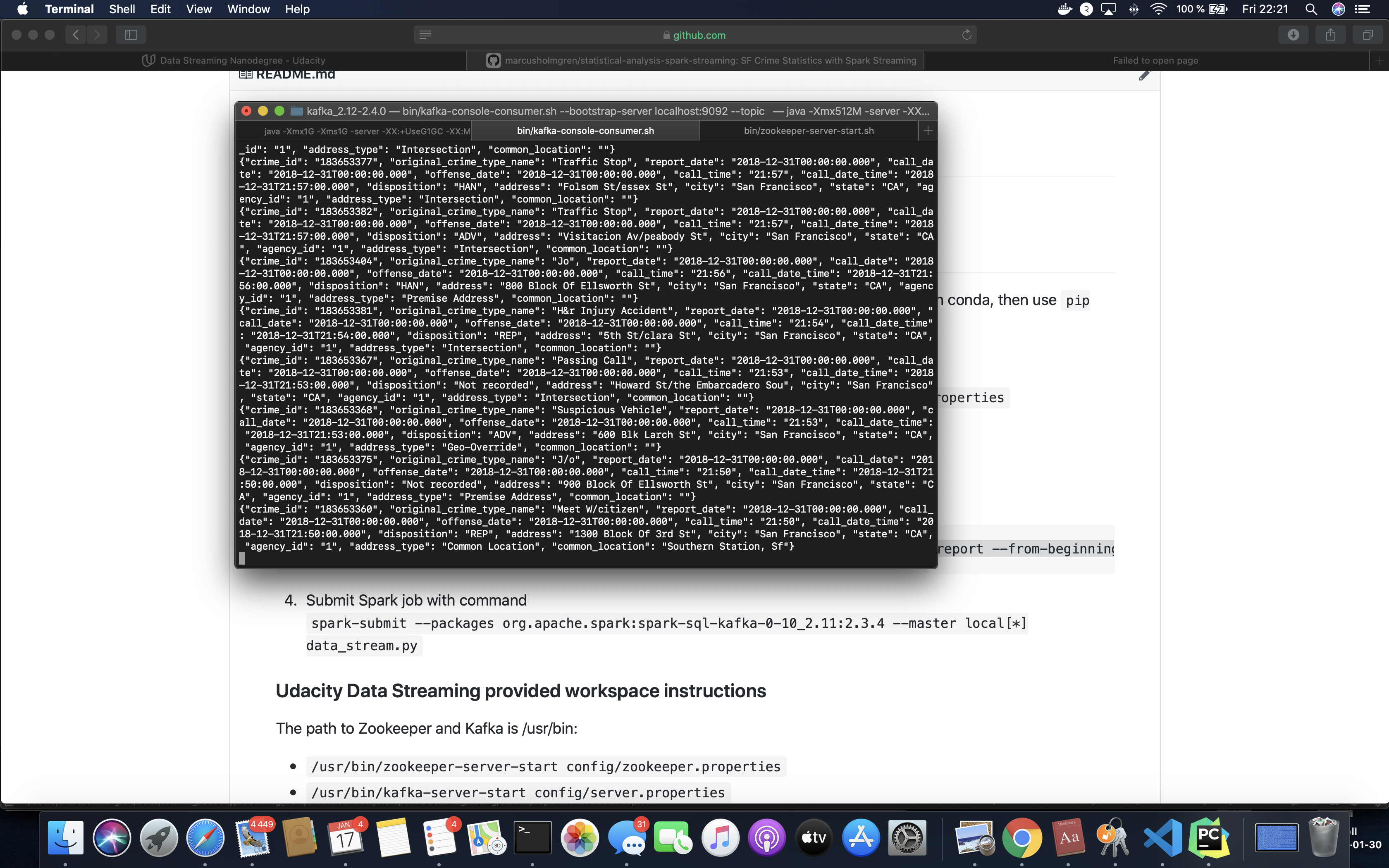

You can test the Kafka producer with the Kafka Console Reader

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic mh.crime.report --from-beginning

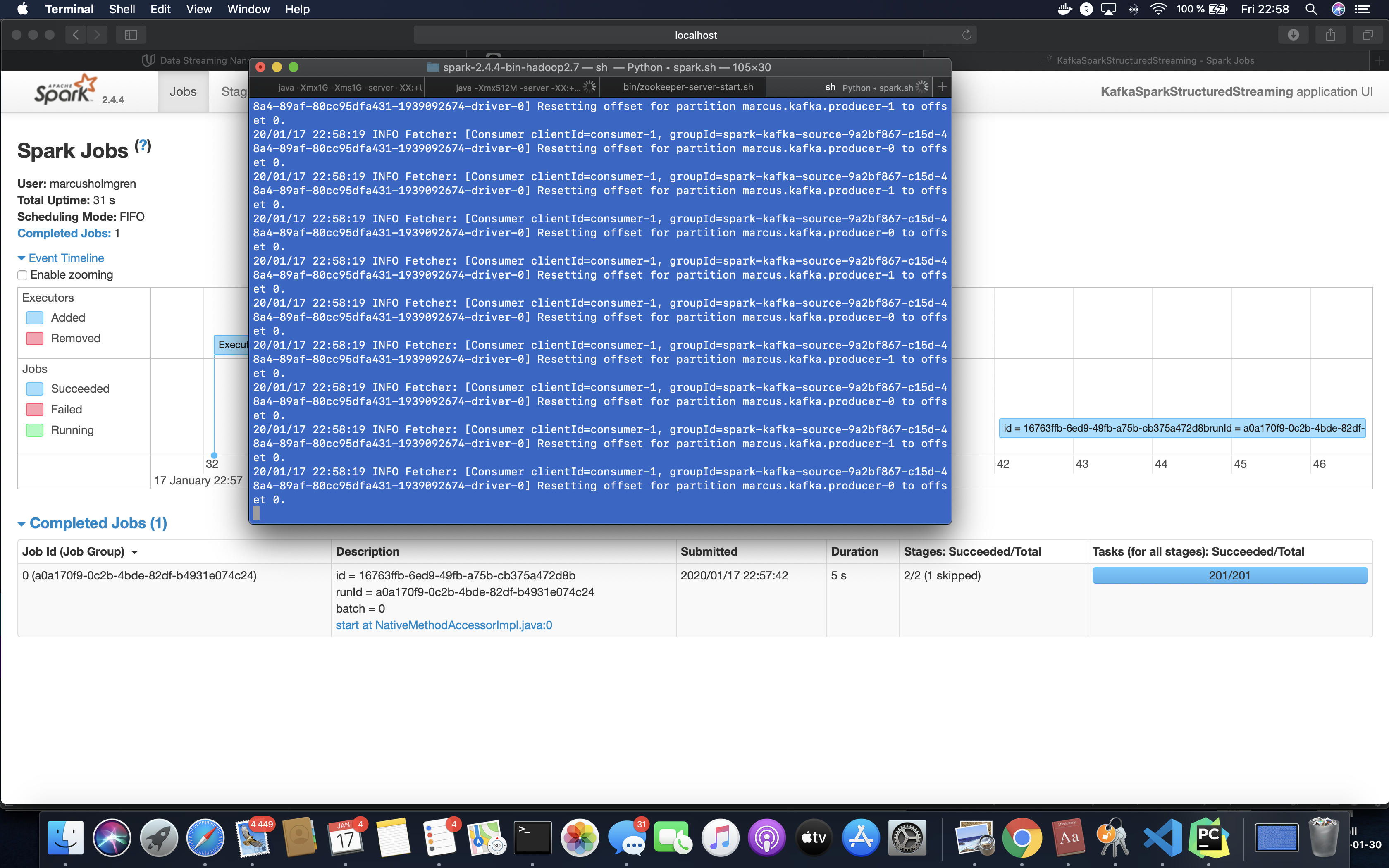

- Submit Spark job with command

It is important to get the packages version correct. For spark-2.4.4-bin-hadoop2.7 the command to submit job is:

spark-submit --packages org.apache.spark:spark-sql-kafka-0-10_2.11:2.4.4 --master local[*] data_stream.py

The path to Zookeeper and Kafka is /usr/bin:

/usr/bin/zookeeper-server-start config/zookeeper.properties/usr/bin/kafka-server-start config/server.properties

Running the Kafka Console Consumer in the Data Streaming provided workspace

/usr/bin/kafka-console-consumer --bootstrap-server localhost:9092 --topic mh.crime.report --from-beginning

Submit spark job in the provided environment

spark-submit --packages org.apache.spark:spark-sql-kafka-0-10_2.11:2.3.4 --master local[*] data_stream.py

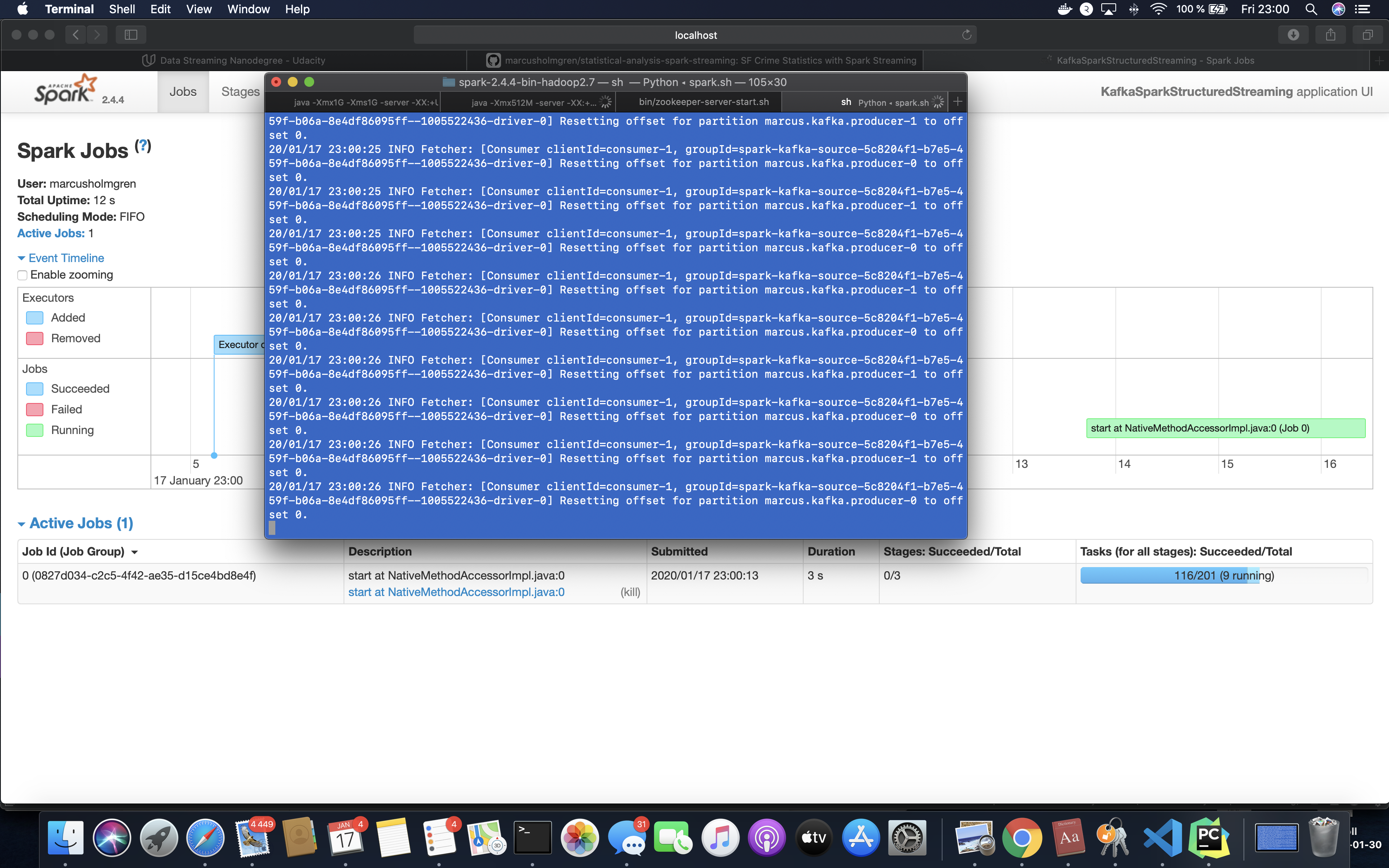

1. How did changing values on the SparkSession property parameters affect the throughput and latency of the data?

When setting the maxOffsetPerTrigger to 1000 there where larger dumps of events processed.

But the where more delay when nothing was printed to the console.

1. What were the 2-3 most efficient SparkSession property key/value pairs? Through testing multiple variations on values, how can you tell these were the most optimal?

- Without

kafka.bootstrap.serversproperty Kafka streaming won't run, so it is important! - Either

subscribefor a specific Kafka topic orsubscribePatternfor subscribing to a wildcard pattern is needed. - The

endingOffsetswith default value latest also worked out great.

When these properties where configured corretly with the Kafka IP address, port and Kafka topic the spark job could connect and process data.

Apache Spark Structured Streaming + Kafka Integration Guide documentation.