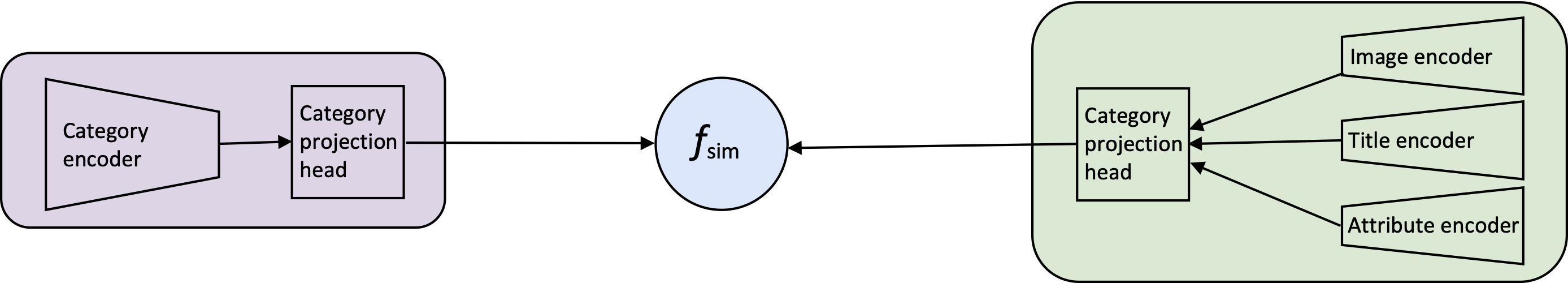

This repository contains the code used for the experiments in "Extending CLIP for Category-to-image Retrieval in E-commerce" published at ECIR 2022.

The contents of this repository are licensed under the MIT license. If you modify its contents in any way, please link back to this repository.

First off, install the dependencies:

pip install -r requirements.txtDownload the CLIP_data.zip from this repository.

After unzipping CLIP_data.zip put the resulting data folder in the root:

data/

datasets/

results/

sh jobs/evaluation/evaluate_cub.job

sh jobs/evaluation/evaluate_abo.job

sh jobs/evaluation/evaluate_fashion200k.job

sh jobs/evaluation/evaluate_mscoco.job

sh jobs/evaluation/evaluate_flickr30k.job

# printing the results for CLIP in one file

sh jobs/postprocessing/results_printer.jobIf you find this repository helpful, feel free to cite our paper "Extending CLIP for Category-to-image Retrieval in E-commerce":

@inproceedings{hendriksen-2022-extending-clip,

author = {Hendriksen, Mariya and Bleeker, Maurits and Vakulenko, Svitlana and van Noord, Nanne and Kuiper, Ernst and de Rijke, Maarten},

booktitle = {ECIR 2022: 44th European Conference on Information Retrieval},

month = {April},

publisher = {Springer},

title = {Extending CLIP for Category-to-image Retrieval in E-commerce},

year = {2022}}