- Create a GitHub Project:

- Create a new repository on GitHub.

- Clone the repository to your local machine.

- Install GitHub Desktop:

- Download and install GitHub Desktop.

- Download and Setup VSCode:

- Download and install Visual Studio Code.

- Open your project folder in VSCode.

- Set up a virtual environment in VSCode.

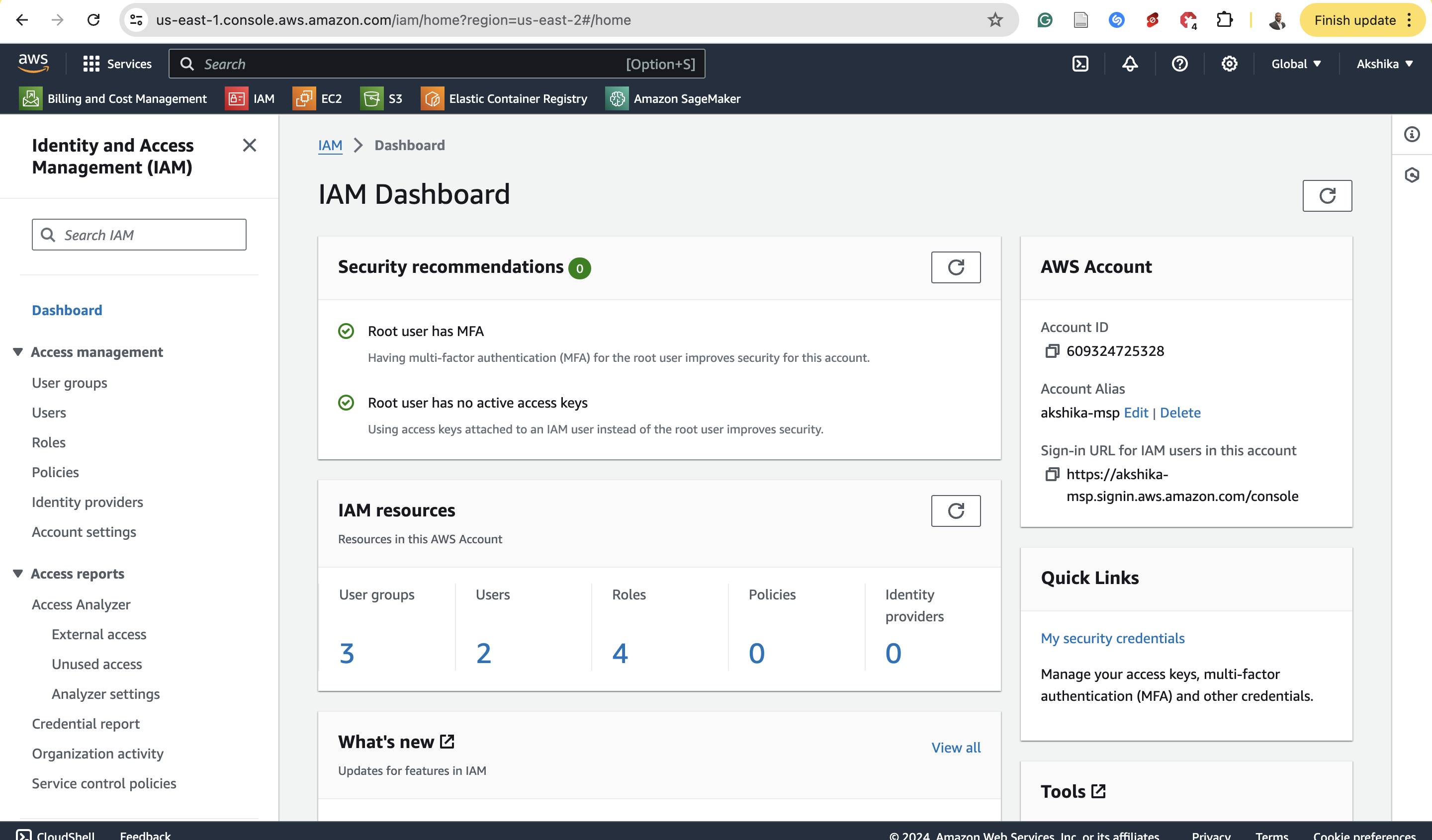

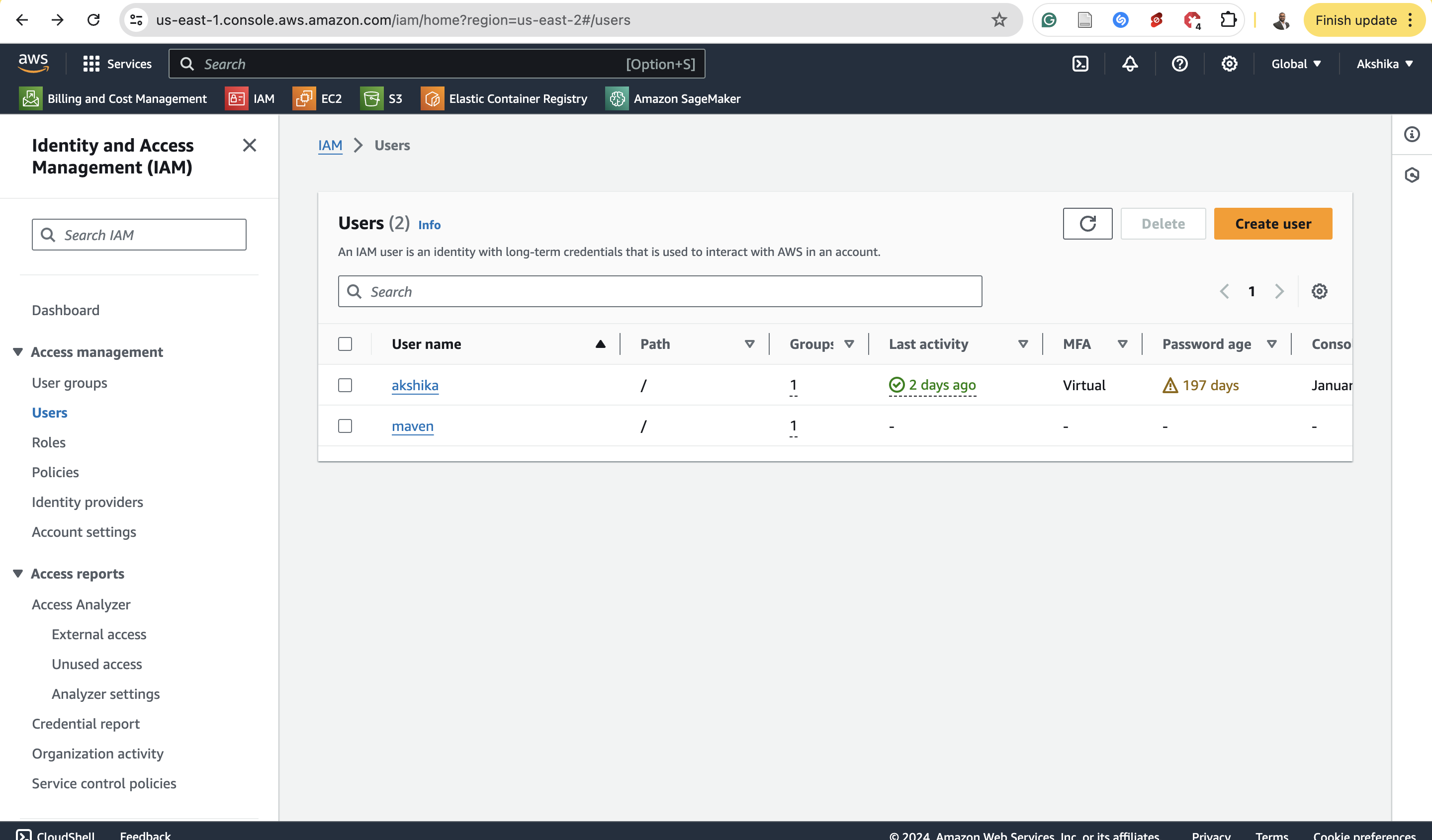

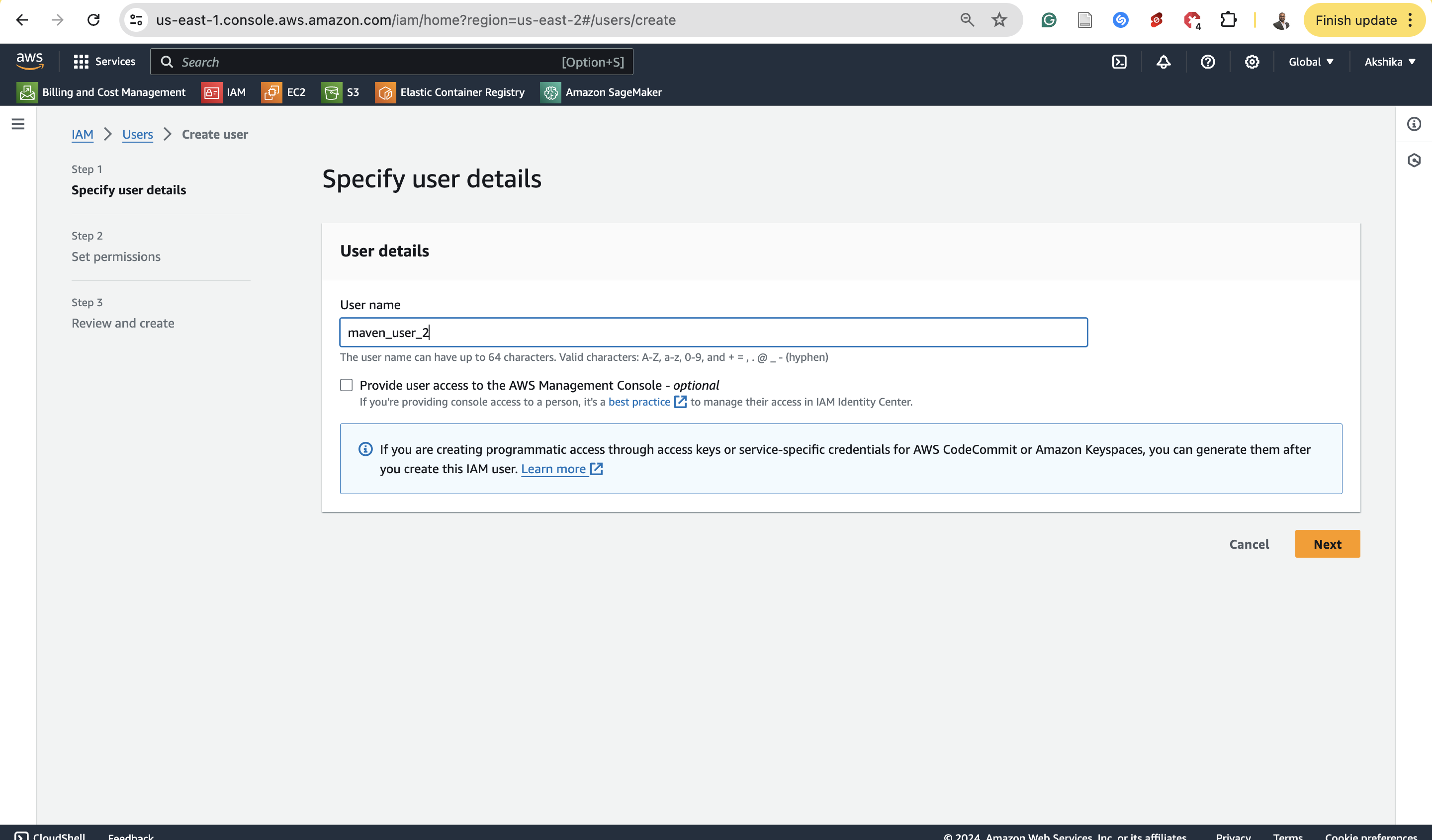

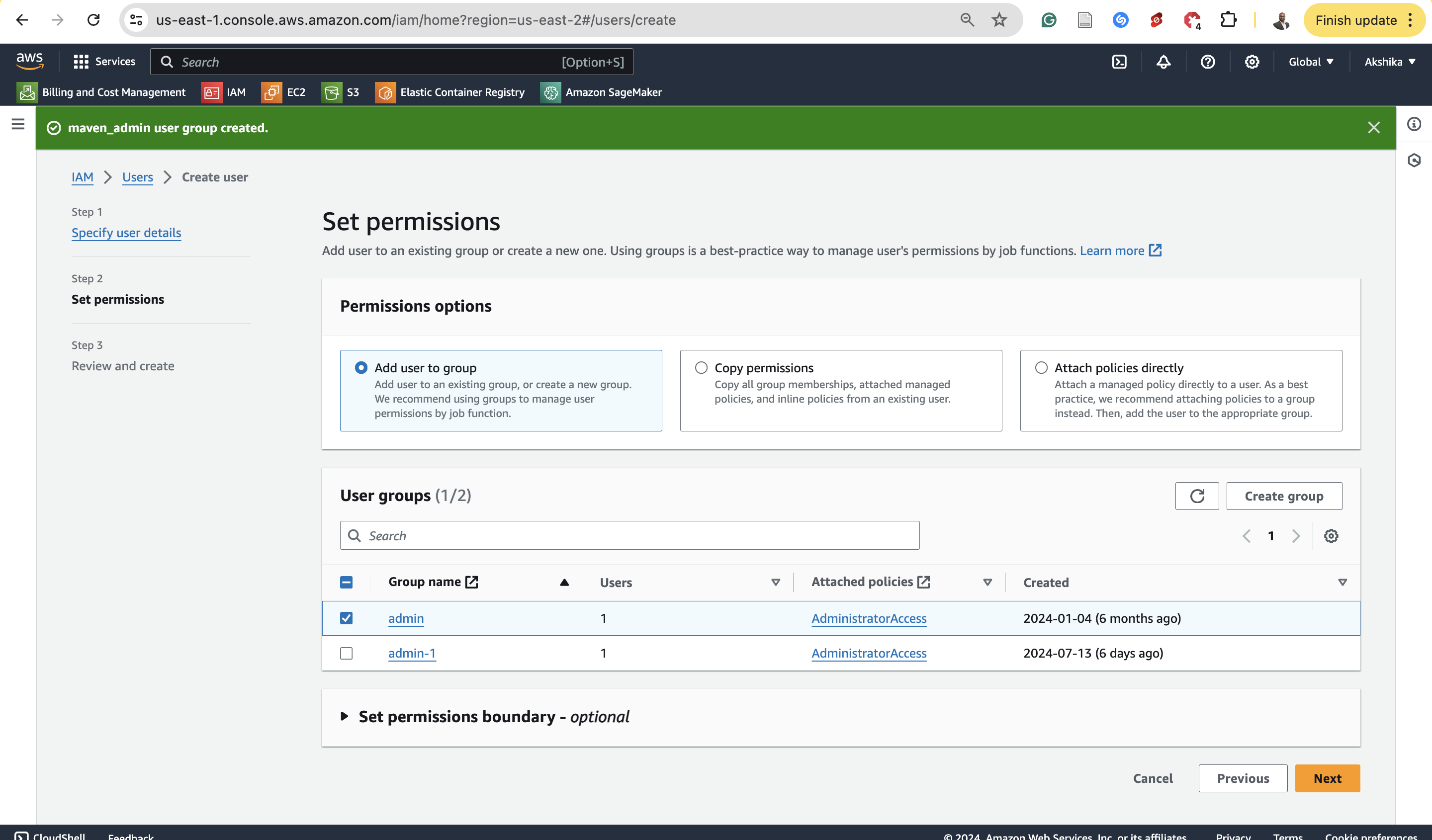

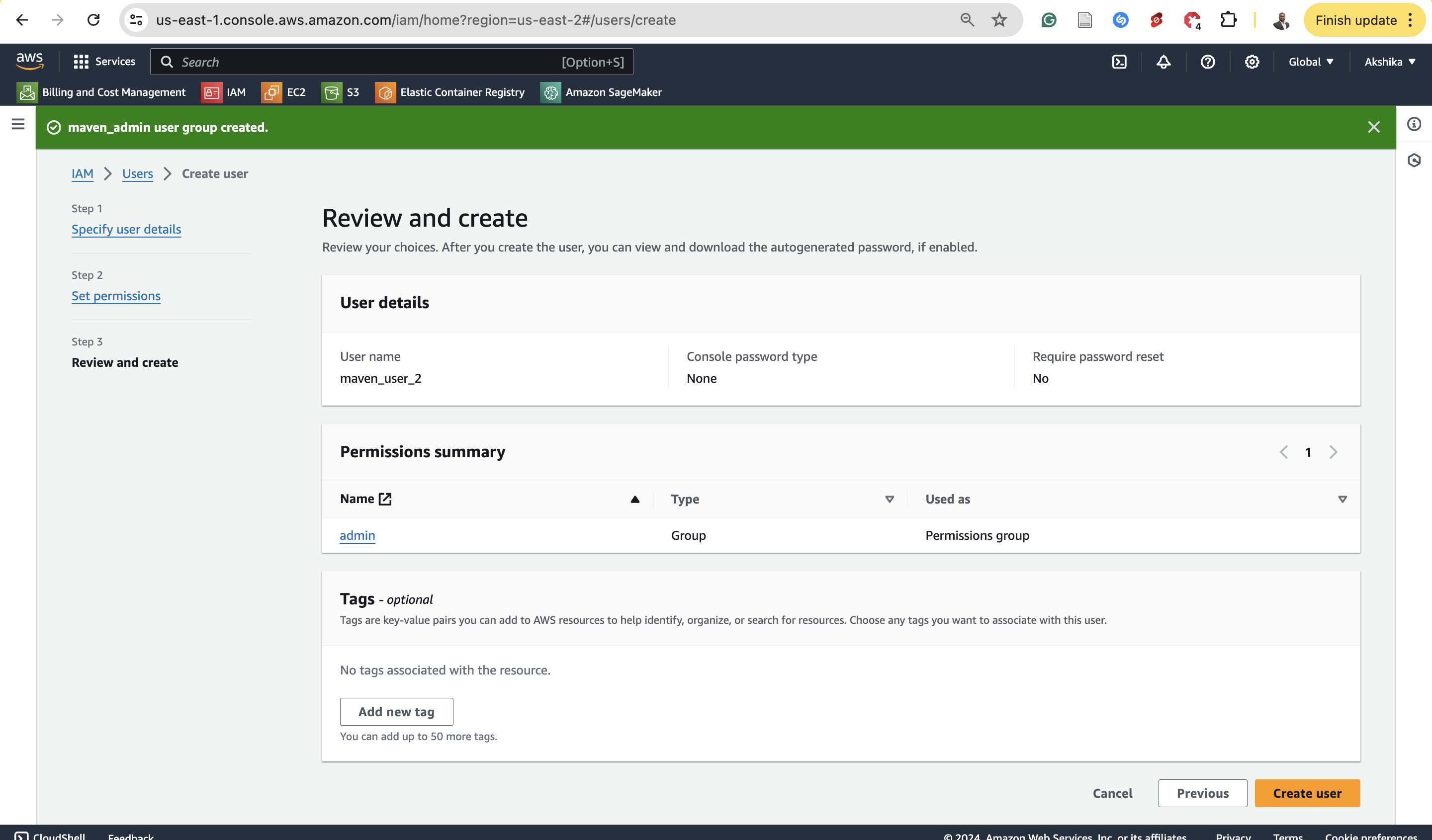

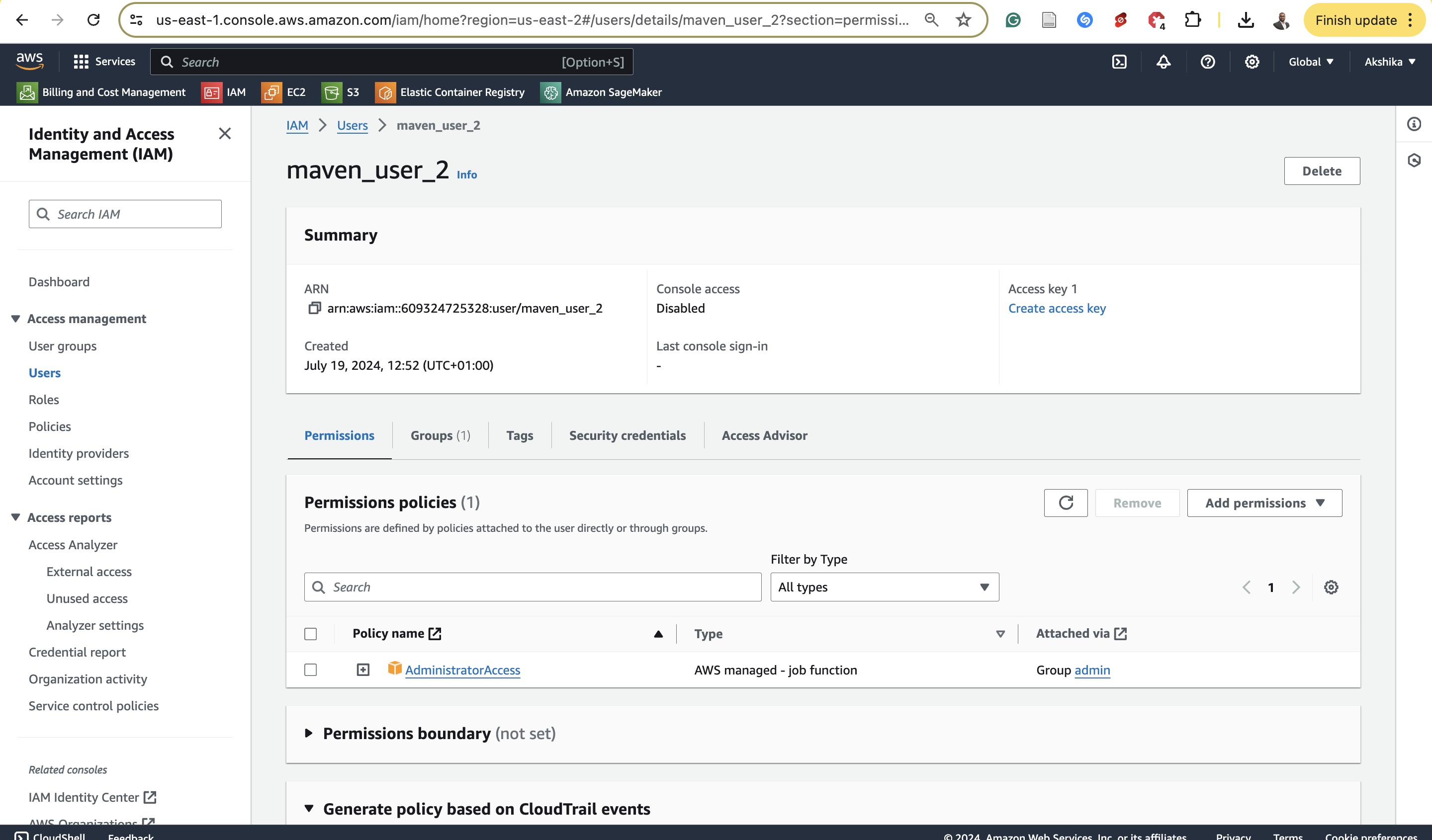

- Create IAM User with Proper Permissions:

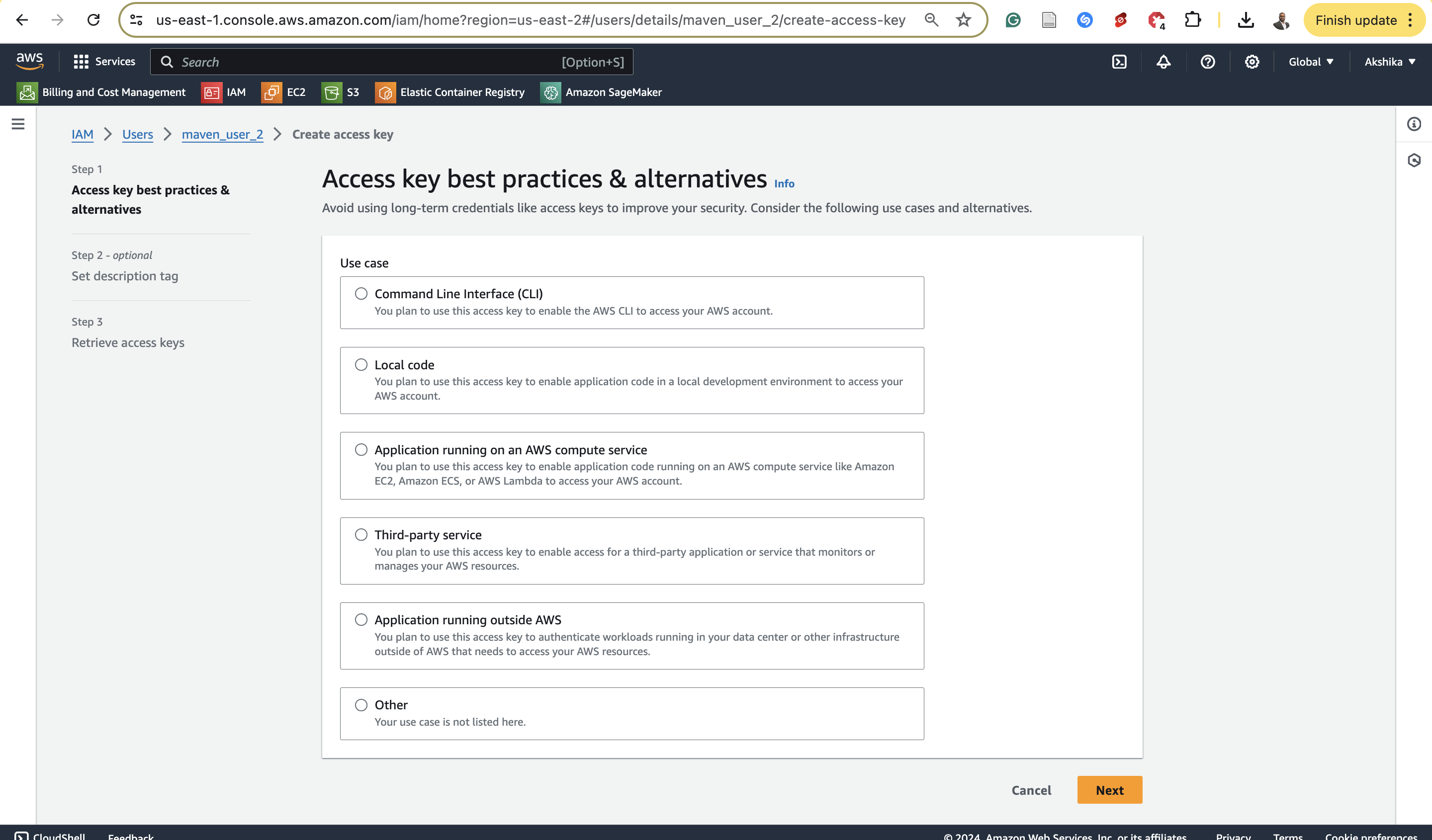

- Configure AWS CLI with IAM User Credentials:

- Install AWS CLI.

- Run

aws configureand input the IAM user’s access key, secret key, default region, and output format.

AWS Access Key ID:

AWS Secret Access Key:

Default region name: us-east-2

Default output format: json

- Create Project Files and Folders:

main.py: This is your main code that controls the entry into training and inference scripts:train.py: Your main training script.requirements.txt: List of dependencies.inference.py: Your main inference script.data/: Directory to store your dataset.

- Download Data from Kaggle:

- Download the dataset from Kaggle.

- Place the dataset in the

data/folder.

- Install Dependencies:

- Install the dependencies listed in

requirements.txtwithin your virtual environment.pip install -r requirements.txt

- Install the dependencies listed in

- Run the Training Script:

- Ensure

training.pyruns successfully in your environment.

- Ensure

- Install Docker:

- Download and install Docker.

- Create Dockerfile:

- Write a Dockerfile to containerize your application. More detailed explanation about the Docker file can be found here

- Build and Run Docker Image:

- Build the Docker image.

- Run the Docker container and verify the results.

-

Upload Data to S3:

- Upload your dataset to an S3 bucket.

- Ensure your IAM user has

s3:PutObjectands3:GetObjectpermissions.

-

Modify Code for S3 Integration:

- Update your code to download the data from S3 at runtime using Boto3.

import boto3 s3 = boto3.client('s3') s3.download_file('your-bucket-name', 'your-dataset.csv', 'data/your-dataset.csv')

-

Setup ECR Repository:

- Create a repository on Amazon Elastic Container Registry (ECR).

-

Push Docker Image to ECR:

- Authenticate Docker to your ECR using IAM credentials.

- Tag and push your Docker image to ECR.

aws ecr get-login-password --region your-region | docker login --username AWS --password-stdin your-account-id.dkr.ecr.your-region.amazonaws.com docker tag your-image:latest your-account-id.dkr.ecr.your-region.amazonaws.com/your-repo:latest docker push your-account-id.dkr.ecr.your-region.amazonaws.com/your-repo:latest -

Download Image from ECR:

- Pull the Docker image from ECR and run it locally to verify.

- Deploy Model on SageMaker:

- Write and deploy your model to a SageMaker endpoint.

- Call SageMaker Endpoint Locally:

- Write code to call the SageMaker endpoint from your local environment.

- Test the End-to-End Pipeline:

- Test the entire workflow from local code execution to SageMaker inference.