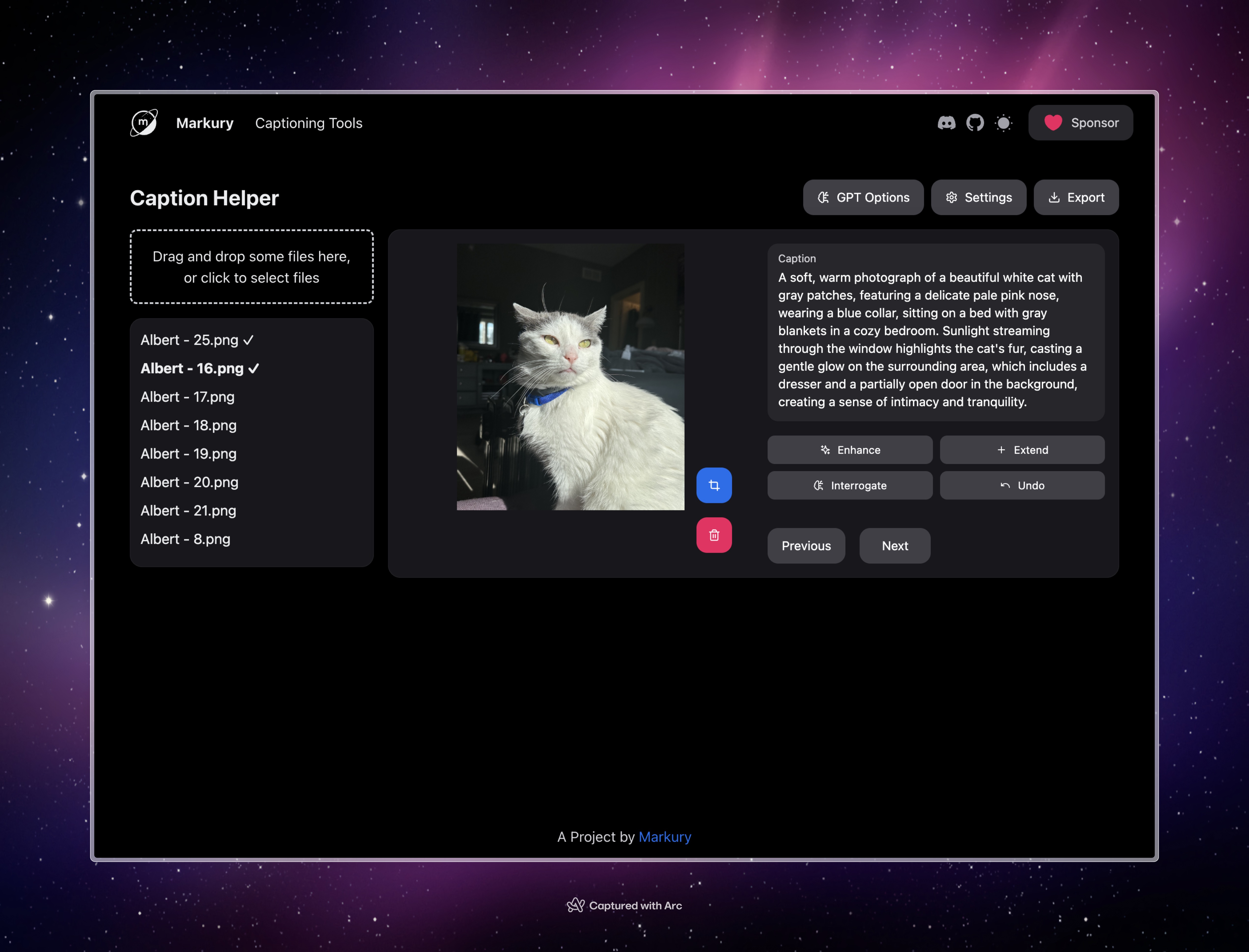

Caption Helper is a simple tool designed to assist in creating, managing, and enhancing captions for images, particularly for use in Stable Diffusion training.

- Image upload: Drag and drop or select multiple images

- Caption editing: Manually edit captions for each image

- AI-powered caption enhancement:

- Enhance: Improve existing captions

- Extend: Add more details to captions

- Interrogate: Generate new captions based on image content

- Image management: Delete unwanted images

- Image cropping: Adjust image framing

- Export: Save captions (and optionally images) as a ZIP file

- Clone the project or use the live version at https://sd-caption-helper.vercel.app/.

- Click the "Settings" button to add your API keys:

- Groq API key (for enhance and extend features)

- OpenAI API key (for the interrogate feature)

- Drag and drop images onto the upload area, or click to select files from your device.

- Uploaded images will appear in the sidebar on the left.

- Click on an image in the sidebar to select it.

- The selected image will appear in the main view along with its caption.

- Edit the caption directly in the text box provided.

For each image, you can use the following AI-powered features:

- Enhance: Click to improve the existing caption.

- Extend: Click to add more details to the current caption.

- Interrogate: Click to generate a new caption based on the image content.

- Delete: Remove the current image from your collection.

- Crop: Adjust the framing of the current image.

Use the navigation buttons to move between images in your collection.

Click the "GPT Options" button to set:

- Custom Token: A specific token to include in generated captions.

- Custom Instruction: Additional instructions for the AI when generating captions.

- Inherent Attributes: Attributes to avoid in generated captions.

- Click the "Export" button.

- Choose whether to include images in the export.

- Optionally, choose to rename images sequentially and set a prefix.

- Click "Export" to download a ZIP file containing your captions (and images if selected).

This document provides technical details about the Caption Helper project, including setup instructions, architecture overview, and implementation details of key features.

- Next.js 13+ (App Router)

- React

- TypeScript

- NextUI for UI components

- Tailwind CSS for styling

- react-dropzone for file uploads

- react-easy-crop for image cropping

- JSZip for creating ZIP files

- Groq API for caption enhancement and extension

- OpenAI API (GPT-4o) for image interrogation

caption-helper/

📦app

┣ 📂api

┃ ┣ 📂gpt-interrogate

┃ ┃ ┗ 📜route.ts

┃ ┣ 📂groq-enhance

┃ ┃ ┗ 📜route.ts

┃ ┗ 📂groq-extend

┃ ┃ ┗ 📜route.ts

┣ 📂blank-page

┃ ┣ 📜layout.tsx

┃ ┗ 📜page.tsx

┣ 📜error.tsx

┣ 📜layout.tsx

┣ 📜page.tsx

┗ 📜providers.tsx

📦components

┣ 📜CaptionEditor.tsx

┣ 📜ExportOptionsModal.tsx

┣ 📜GptOptionsModal.tsx

┣ 📜ImageViewer.tsx

┣ 📜Navigation.tsx

┣ 📜Settings.tsx

┣ 📜Sidebar.tsx

┣ 📜icons.tsx

┣ 📜navbar.tsx

┣ 📜primitives.ts

┗ 📜theme-switch.tsx

📦config

┣ 📜fonts.ts

┗ 📜site.ts

📦lib

┣ 📜types.ts

┗ 📜utils.ts

-

Clone the repository:

git clone https://github.com/markuryy/caption-helper.git cd caption-helper -

Install dependencies:

bun i -

Create a

.env.localfile in the root directory and add your API keys:GROQ_API_KEY=your_groq_api_key OPENAI_API_KEY=your_openai_api_key -

Run the development server:

bun dev

- Uses

react-dropzonefor handling file uploads. - Implemented in

app/page.tsxwithin theonDropfunction. - Processes uploaded files using the

processUploadedFilesutility function.

- Implemented in the

CaptionEditorcomponent. - Uses controlled input for real-time updates.

- Implemented in

app/page.tsxwithin thehandleCaptionActionfunction. - Uses separate API routes for each action:

app/api/groq-enhance/route.tsfor enhancing captionsapp/api/groq-extend/route.tsfor extending captionsapp/api/gpt-interrogate/route.tsfor generating captions from images

- Uses

react-easy-croplibrary. - Implemented in the

ImageViewercomponent.

- Implemented in

app/page.tsxwithin thehandleExportfunction. - Uses

JSZipto create ZIP files containing captions and optionally images. - Export options are managed through the

ExportOptionsModalcomponent.

- Uses React's

useStatehook for local state management. - Global states (like API keys) are stored in localStorage and managed through the

Settingscomponent.

- Used for caption enhancement and extension.

- API calls are made from the server-side API routes to protect API keys.

- Used for image interrogation (generating captions from images).

- Implemented in

app/api/gpt-interrogate/route.ts. - Uses the GPT-4o model for omni-modal capabilities.

- Client-side image downscaling is implemented in

lib/utils.tsusing thedownscaleImagefunction. - This ensures that large images are properly handled when sent to the GPT-4o API.

- Uses a combination of NextUI components and Tailwind CSS for styling.

- Global styles are defined in

styles/globals.css.

- Consider implementing server-side session storage for better state management across page reloads.

- Explore options for batch processing of images for more efficient handling of large collections.

- Implement user authentication to allow for saved projects and user-specific settings.

- Fork the repository.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them with descriptive commit messages.

- Push your changes to your fork.

- Submit a pull request to the main repository.

Please ensure that your code follows the existing style conventions and includes appropriate tests.

- Use clear, descriptive captions that accurately represent the image content.

- Include relevant details but avoid overly specific or unique identifiers.

- Experiment with the AI enhancement features to generate diverse captions.

- Use the custom token and instruction features to tailor captions to your specific training needs.

For issues, feature requests, or contributions, please visit the GitHub repository.