This project uses FastAPI to create an endpoint that returns an image generated from a text prompt using Stability-AI's Stable Diffusion model. If you run it on your local machine it will use your Nvidia GPU and CUDA if you have one or your CPU otherwise (this will take a lot longer). Alternatively, you can can run it on an AWS EC2 GPU Instance using the instructions below. If you would like to upload images to an S3 bucket and return the image url instead of returning the raw image bytes then check out the s3 branch.

This code is tested using Python 3.10 and diffusers 0.21.4

-

Install requirements.txt

pip install -r requirements.txt

-

Start up uvicorn server using

uvicorn main:app

-

Run the request example using

python request-example.py

-

Launch an AWS EC2 instance. I used a g4dn.xlarge instance with Ubuntu-22.04 since it is the most basic GPU instance available. Create a key pair and keep track of the .pem file that will be downloaded when you launch the instance. Under network settings, allow “HTTP traffic from the internet” so we can use the instance as a public endpoint. Lastly, make sure to increase the EBS volume size to 30GiB (maximum free amount) since we will need more than the default 8.

-

When you create the instance, press Connect to your instance. From there you can copy and paste the ssh example into your terminal to ssh into your instance. (You may have to change the .pem file path to match your's)

-

Once you are into the instance, update Ubuntu’s packages

sudo apt-get update

-

Next, we will set up miniconda3 for python.

Download and install

mkdir -p ~/miniconda3 wget <https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh> -O ~/miniconda3/miniconda.sh bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3 rm -rf ~/miniconda3/miniconda.sh

Initialize

~/miniconda3/bin/conda init bashRestart bash to activate the base conda environment

bash

Stop conda from activating the base environment by default on bash start-up

conda config --set auto_activate_base falseRestart bash again without conda

bash

-

Clone the repo

git clone https://github.com/martin-bartolo/stablediffusion-fastapi.git cd stablediffusion-fastapi/ -

Create a new conda environment with python 3.10

conda create --name stable-diffusion python==3.10 conda activate stable-diffusion

-

(Optional) Set our TMPDIR to a directory in our AWS instance volume. To ensure that our installs run smoothly and we do not run out of storage or RAM we can set our TMPDIR to an available AWS instance volume. This storage will be lost when we stop and restart the instance so it is suitable for our TMPDIR.

First we must mount the instance volume. Check the available storage blocks.

sudo fdisk -l

Check what your instance volume is called. For me it was /dev/nvme1n1. Create a directory to keep the mounted volume.

sudo mkdir /mnt

Create a filesystem on the volume (remember to use the right volume name)

sudo mkfs.ext4 /dev/nvme1n1

Mount the volume

sudo mount -t ext4 /dev/nvme1n1 /mnt

Set the TMPDIR to a directory called tmp inside the mount

TMPDIR=/mnt/tmp

-

Install the package requirements

pip install --upgrade pip pip install -r requirements.txt

-

Now that our repo is set up we can test the endpoint locally. This will also download the model checkpoints.

uvicorn main:app

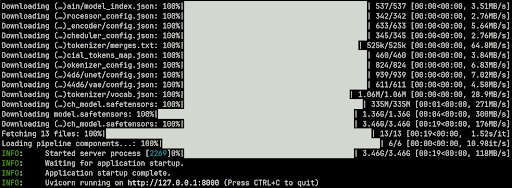

You should see something like this

Stop the server with Ctrl + C

-

Install CUDA drivers so that we can use the GPU

sudo apt-get install linux-headers-$(uname -r) distribution=$(. /etc/os-release;echo $ID$VERSION_ID | sed -e 's/\.//g') wget https://developer.download.nvidia.com/compute/cuda/repos/$distribution/x86_64/cuda-keyring_1.0-1_all.deb sudo dpkg -i cuda-keyring_1.0-1_all.deb sudo apt-get update sudo apt-get -y install cuda-drivers

Check that the install was successful

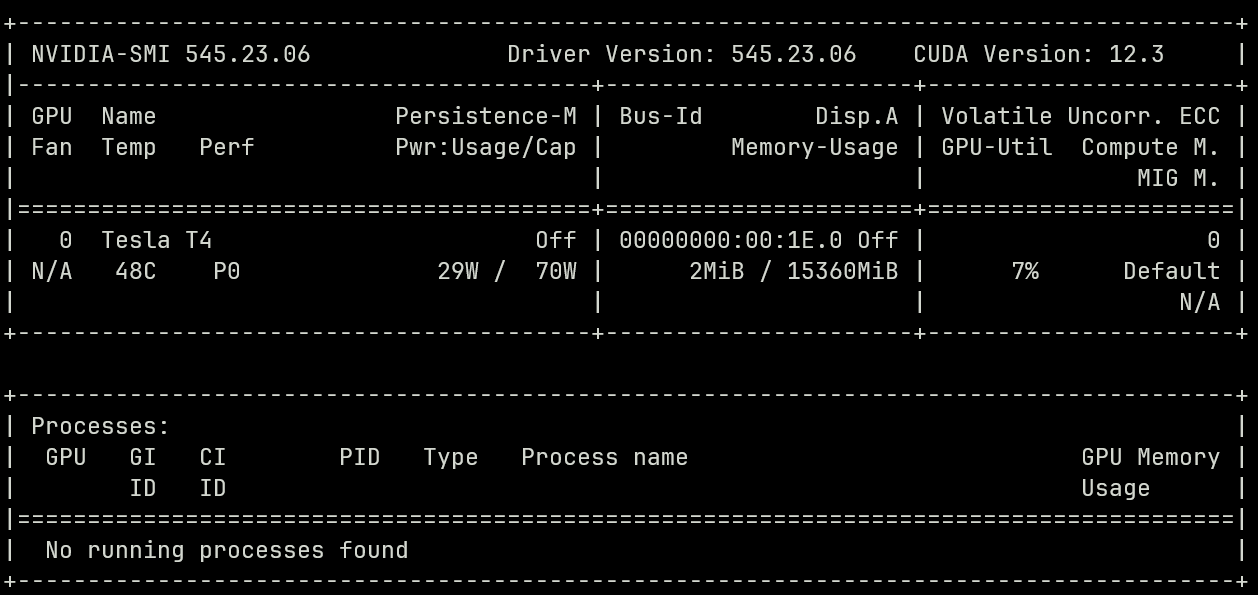

nvidia-smi

You should see something like this

Set the environment variables so that PyTorch knows where to look (change your cuda version according to what you see in nvidia-smi)

export PATH=/usr/local/cuda-12.3/bin${PATH:+:${PATH}} export LD_LIBRARY_PATH=/usr/local/cuda-12.2/lib64/${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

Make sure it worked by checking available GPUs using PyTorch

python import torch print(torch.cuda.is_available())

If the output is True then we can now use the GPU. Exit the python shell with Ctrl + D

-

Now let’s make the API publicly accessible. First, install nginx

sudo apt install nginx

Create a new folder for the server block using

cd /etc/nginx/sites-enabled/ sudo nano stablediffusionInside the file copy the following (server_name is replaced by the Public IP of your AWS instance)

server { listen 80; server_name 18.116.199.161; location / { proxy_pass http://127.0.0.1:8000; } }This will serve whatever we have running on our AWS instances' localhost to its public IP.

Save the file with Ctrl + X and then Enter to confirm the name.

Restart the server

sudo service nginx restart

-

We can now start the server again. Go back to the repository directory

cd ~/stablediffusion-fastapi

Start the server

uvicorn main:app

-

To confirm that everything has worked you can go to publicip/8000 (where publicip is the Public IP of your AWS instance) on your browser and make sure that you see {“message”: “Hello World”}

Congrats! Everything is set up. You can open request-example.py on your local machine, change the IP in the url and the prompt and run the script to generate images using stable diffusion!

To avoid having to ssh into the instance and start the uvicorn server up manually, let's create a serving that will start it whenever we start up the instance.

-

Allocate an Elastic IP to your instance

-

Change the nginx server IP

Start your instance and ssh in

Open the nginx server file

cd /etc/nginx/sites-enabled/ sudo nano stablediffusionInside the file change the server_name IP to your new elastic IP (server_name is replaced by the Public IP of your AWS instance)

Save the file with Ctrl + X and then Enter to confirm the name.

Restart the server

sudo service nginx restart

-

Create a new start-up service to run the uvicorn server

Create the service file

cd /etc/systemd/system/ sudo nano run_server.servicePaste the following inside the file (change any paths which are different for you)

[Unit] Description=Run Uvicorn Server After=network.target [Service] User=ubuntu WorkingDirectory=/home/ubuntu/stablediffusion-fastapi ExecStart=/home/ubuntu/miniconda3/envs/stable-diffusion/bin/uvicorn main:app Restart=always [Install] WantedBy=multi-user.targetSave the file with Ctrl + X and then Enter to confirm the name.

Reload the systemd manager configuration

sudo systemctl daemon-reload

Enable the service on start-up

sudo systemctl enable run_server.service -

The service will now run everytime you start the instance. Remember to give it a minute or 2 to set up the server and load the model pipeline before it will work.