@INPROCEEDINGS{9674611,

author={Sun, Fanglei and Tao, Qian and Hu, Jianqiao and Liu, Jieqiong},

booktitle={2021 7th International Conference on Computer and Communications (ICCC)},

title={Composite Evolutionary GAN for Natural Language Generation with Temper Control},

year={2021},

volume={},

number={},

pages={1710-1714},

doi={10.1109/ICCC54389.2021.9674611}}

- PyTorch >= 1.1.0

- Python 3.6

- Numpy 1.14.5

- CUDA 7.5+ (For GPU)

- nltk 3.4

- tqdm 4.32.1

- KenLM (https://github.com/kpu/kenlm)

To install, run pip install -r requirements.txt. In case of CUDA problems, consult the official PyTorch Get Started guide.

-

Download stable release and unzip: http://kheafield.com/code/kenlm.tar.gz

-

Need Boost >= 1.42.0 and bjam

- Ubuntu:

sudo apt-get install libboost-all-dev - Mac:

brew install boost; brew install bjam

- Ubuntu:

-

Run within kenlm directory:

mkdir -p build cd build cmake .. make -j 4 -

pip install https://github.com/kpu/kenlm/archive/master.zip -

For more information on KenLM see: https://github.com/kpu/kenlm and http://kheafield.com/code/kenlm/

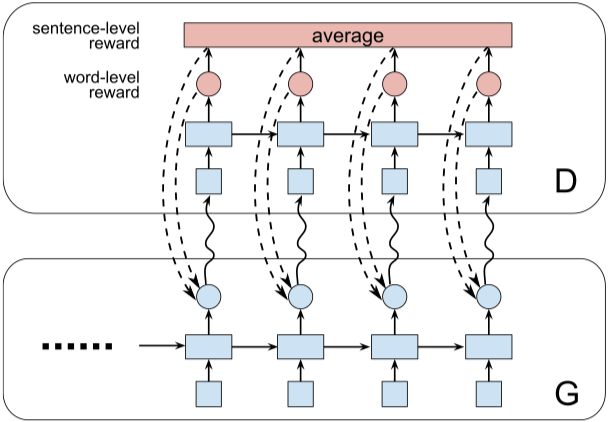

- SeqGAN - SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient

- LeakGAN - Long Text Generation via Adversarial Training with Leaked Information

- MaliGAN - Maximum-Likelihood Augmented Discrete Generative Adversarial Networks

- JSDGAN - Adversarial Discrete Sequence Generation without Explicit Neural Networks as Discriminators

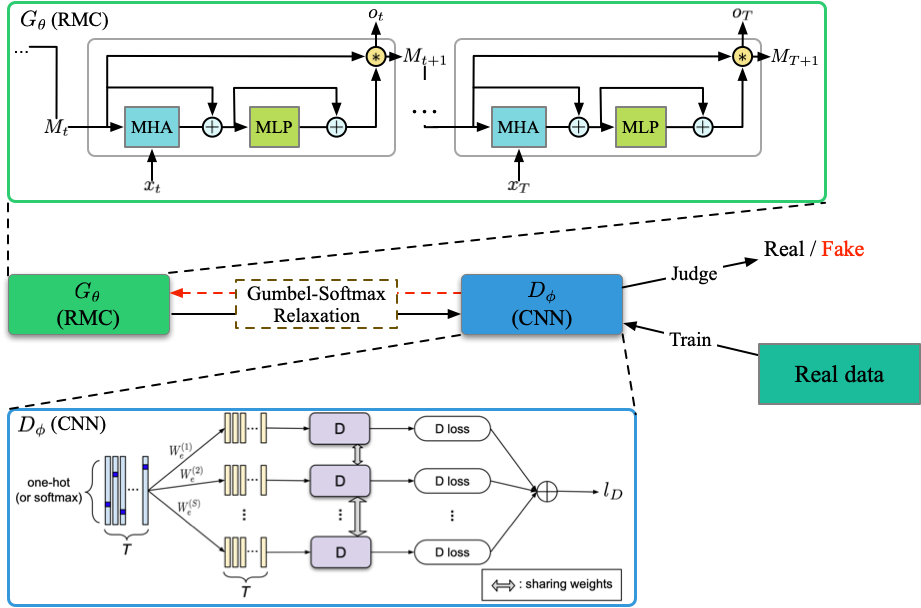

- RelGAN - RelGAN: Relational Generative Adversarial Networks for Text Generation

- DPGAN - DP-GAN: Diversity-Promoting Generative Adversarial Network for Generating Informative and Diversified Text

- DGSAN - DGSAN: Discrete Generative Self-Adversarial Network

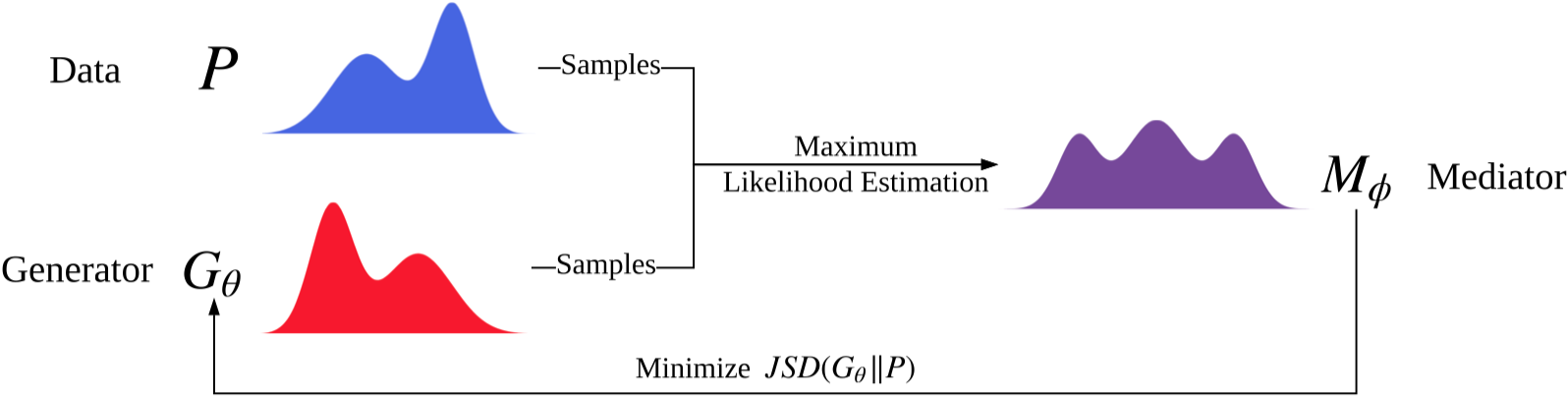

- CoT - CoT: Cooperative Training for Generative Modeling of Discrete Data

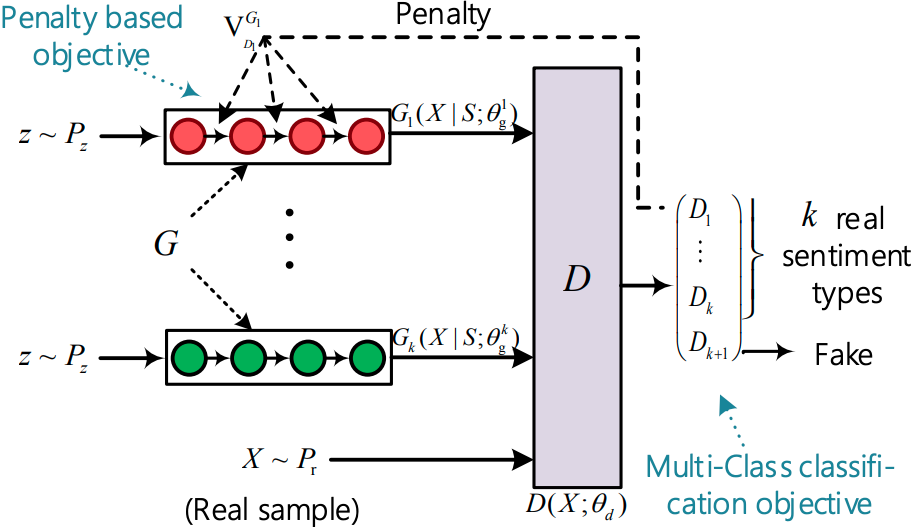

- SentiGAN - SentiGAN: Generating Sentimental Texts via Mixture Adversarial Networks

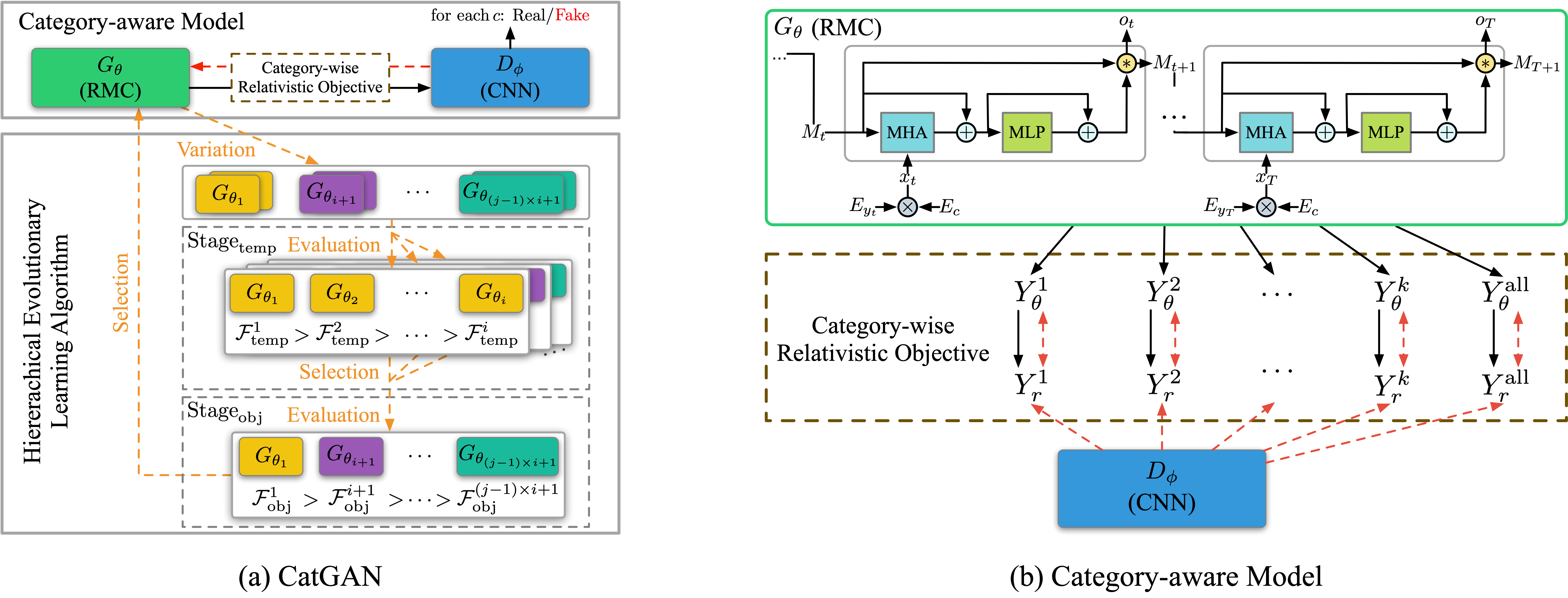

- CatGAN (ours) - CatGAN: Category-aware Generative Adversarial Networks with Hierarchical Evolutionary Learning for Category Text Generation

- Get Started

git clone https://github.com/williamSYSU/TextGAN-PyTorch.git

cd TextGAN-PyTorch- For real data experiments, all datasets (

Image COCO,EMNLP NEWs,Movie Review,Amazon Review) can be downloaded from here. - Run with a specific model

cd run

python3 run_[model_name].py 0 0 # The first 0 is job_id, the second 0 is gpu_id

# For example

python3 run_seqgan.py 0 0-

Instructor

For each model, the entire runing process is defined in

instructor/oracle_data/seqgan_instructor.py. (Take SeqGAN in Synthetic data experiment for example). Some basic functions likeinit_model()andoptimize()are defined in the base classBasicInstructorininstructor.py. If you want to add a new GAN-based text generation model, please create a new instructor underinstructor/oracle_dataand define the training process for the model. -

Visualization

Use

utils/visualization.pyto visualize the log file, including model loss and metrics scores. Custom your log files inlog_file_list, no more thanlen(color_list). The log filename should exclude.txt. -

Logging

The TextGAN-PyTorch use the

loggingmodule in Python to record the running process, like generator's loss and metric scores. For the convenience of visualization, there would be two same log file saved inlog/log_****_****.txtandsave/**/log.txtrespectively. Furthermore, The code would automatically save the state dict of models and a batch-size of generator's samples in./save/**/modelsand./save/**/samplesper log step, where**depends on your hyper-parameters. -

Running Signal

You can easily control the training process with the class

Signal(please refer toutils/helpers.py) based on dictionary filerun_signal.txt.For using the

Signal, just edit the local filerun_signal.txtand setpre_sigtoFaslefor example, the program will stop pre-training process and step into next training phase. It is convenient to early stop the training if you think the current training is enough. -

Automatiaclly select GPU

In

config.py, the program would automatically select a GPU device with the leastGPU-Utilinnvidia-smi. This feature is enabled by default. If you want to manually select a GPU device, please uncomment the--deviceargs inrun_[run_model].pyand specify a GPU device with command.

-

run file: run_seqgan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

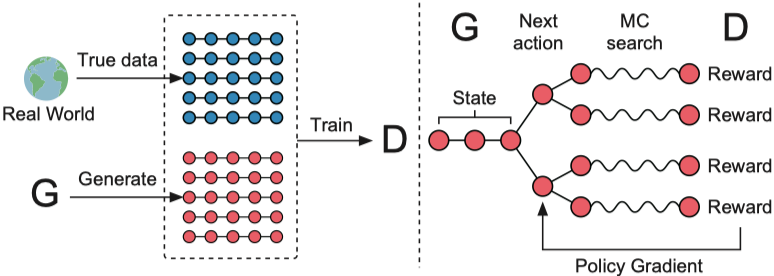

Structure (from SeqGAN)

-

run file: run_leakgan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

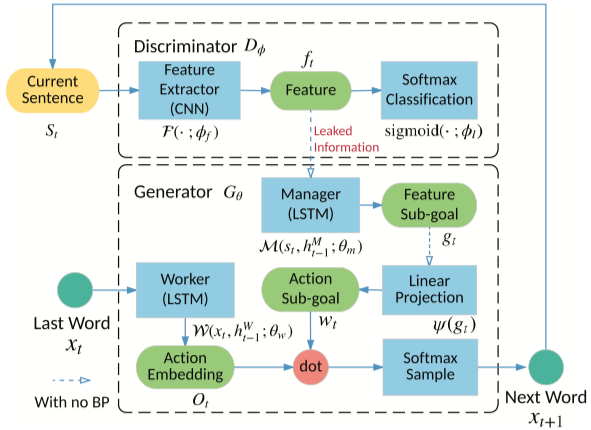

Structure (from LeakGAN)

-

run file: run_maligan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

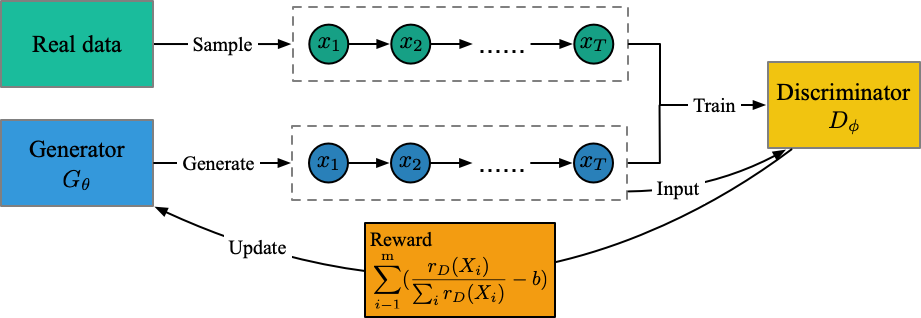

Structure (from my understanding)

-

run file: run_jsdgan.py

-

Instructors: oracle_data, real_data

-

Models: generator (No discriminator)

-

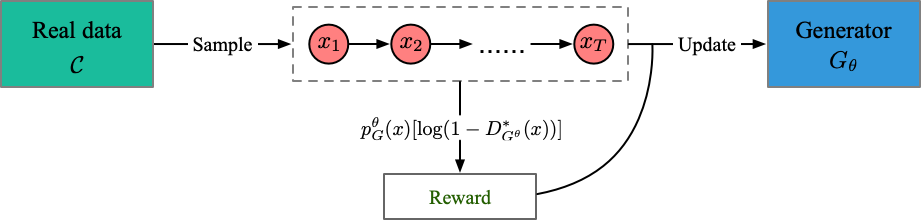

Structure (from my understanding)

-

run file: run_relgan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

Structure (from my understanding)

-

run file: run_dpgan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

Structure (from DPGAN)

-

run file: run_dgsan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

run file: run_cot.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

Structure (from CoT)

-

run file: run_sentigan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

Structure (from SentiGAN)

-

run file: run_catgan.py

-

Instructors: oracle_data, real_data

-

Models: generator, discriminator

-

Structure (from CatGAN)